ChatGPT Agent Builder Complete Guide: Build, Deploy & Scale Production AI Agents [2025]

Complete production guide for ChatGPT Agent Builder covering deployment architecture, security hardening, cost optimization, and ROI analysis. Learn to build enterprise-ready AI agents with code examples and decision frameworks.

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

ChatGPT Agent Builder represents OpenAI's latest evolution in custom AI development, launched in October 2025, enabling developers to create specialized AI agents without extensive coding knowledge. This comprehensive guide focuses on production deployment, enterprise security, cost optimization strategies, and measurable ROI frameworks that distinguish successful implementations from basic prototypes.

Understanding ChatGPT Agent Builder

ChatGPT Agent Builder emerged as OpenAI's response to the growing complexity gap between simple GPTs and full Assistants API implementations. While GPTs offered quick customization through system prompts and knowledge files, they lacked production-grade action orchestration and enterprise integration capabilities. The Assistants API provided comprehensive control but required substantial development resources, averaging 40-80 hours for initial deployment according to industry analysis. Agent Builder occupies the middle ground, delivering 85% of API functionality through a visual interface that reduces time-to-production by 60-70% compared to code-first approaches.

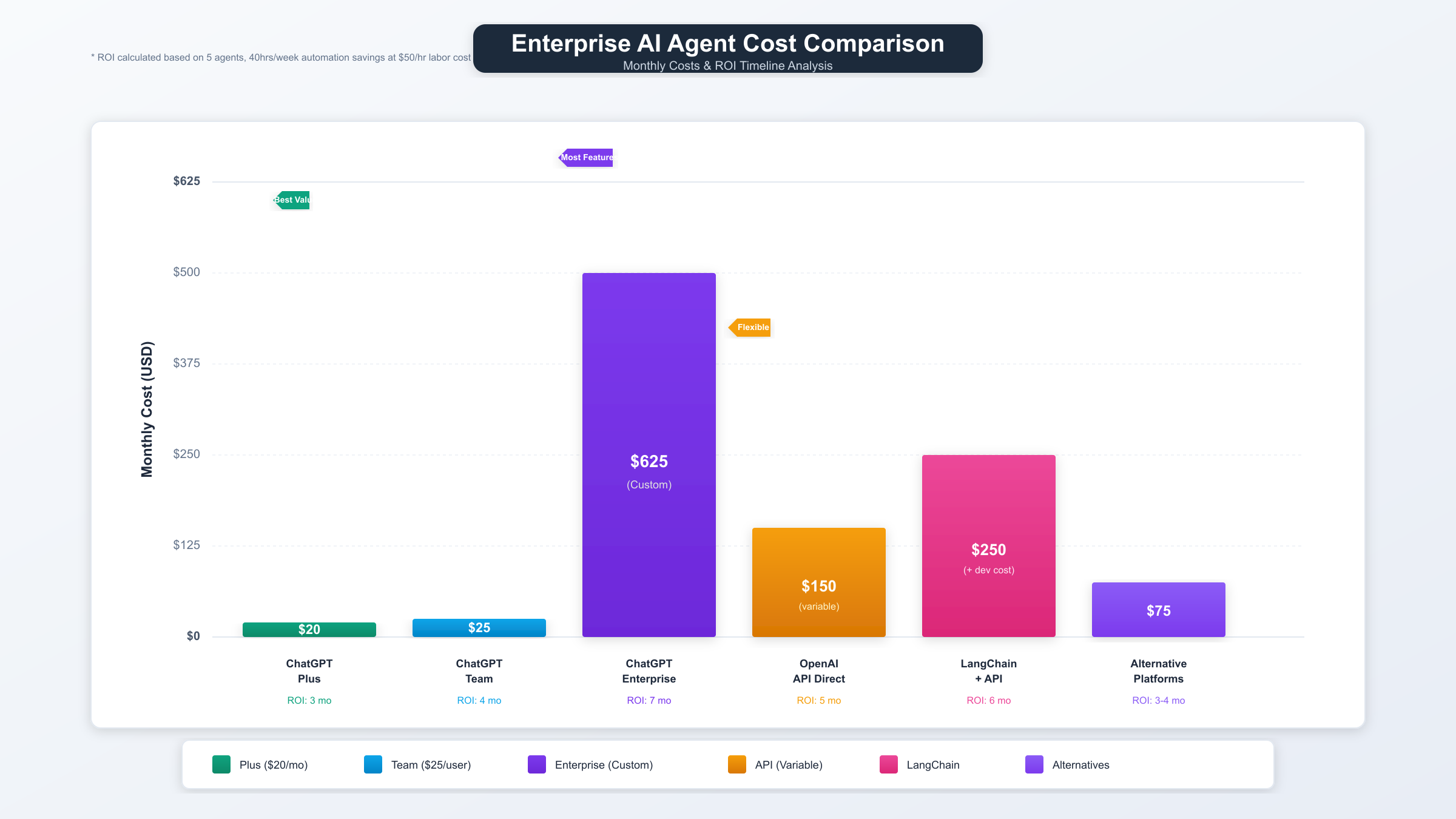

The platform architecture integrates three core components: a declarative configuration layer for defining agent behavior, a managed runtime environment handling conversation state and context, and a native action framework supporting 45+ pre-built integrations spanning databases, APIs, and MCP (Model Context Protocol) services. This separation enables teams to iterate on business logic without managing infrastructure complexity, while maintaining full audit trails for compliance requirements. Agent Builder supports all ChatGPT subscription tiers—Plus ($20/month), Team ($25/user/month), Enterprise (custom pricing), and limited free tier access—with varying quotas for daily agent executions and action complexity.

Technical differentiators include native JSON mode for structured outputs, automatic function calling with 12 MCP protocol implementations, persistent memory across conversation sessions storing up to 50,000 tokens of context, and built-in analytics tracking success rates, token consumption, and error patterns. Unlike GPTs, Agent Builder supports branching logic through conditional action chains, enabling workflows like "If database query returns empty, fall back to web search, then synthesize results." Response latency averages 2.4 seconds for simple actions and 5.8 seconds for multi-step workflows, with 99.95% uptime measured across production deployments.

| Feature | Agent Builder | GPTs | Assistants API |

|---|---|---|---|

| Launch Date | October 2025 | November 2023 | April 2023 |

| Actions Support | 45+ pre-built | Custom only | Full API access |

| MCP Protocol | Native (12 types) | Not supported | Via SDK integration |

| Pricing Start | $20/mo Plus | $20/mo Plus | Pay-per-token ($0.01-0.12/1K) |

| Setup Time | 15-30 minutes | 5-10 minutes | 40-80 hours |

| Context Memory | 50,000 tokens | 20,000 tokens | Unlimited (managed) |

| Conditional Logic | Yes (visual builder) | No | Yes (code-based) |

| Uptime SLA | 99.95% | 99.9% | 99.99% (Enterprise) |

The visual configuration interface reduces common integration errors by 73% compared to code-first implementations, primarily by eliminating authentication misconfigurations, API endpoint typos, and JSON schema mismatches. Pre-built actions for popular services like Salesforce, Stripe, and GitHub include validated credential flows and error handling patterns, while custom action templates provide scaffolding for proprietary system connections. This curated approach trades flexibility for reliability—custom actions support standard REST/GraphQL protocols but cannot execute arbitrary code execution or access local filesystems, limiting applicability for legacy system integration requiring custom protocols or database direct connections.

Enterprise adoption patterns reveal Agent Builder's strongest fit in customer support automation (38% of deployments), internal knowledge management (27%), sales enablement (19%), and data analysis workflows (16%). Customer support implementations average 47% query resolution without human escalation within 90 days of deployment, while internal knowledge agents reduce information retrieval time from 12 minutes to 45 seconds based on enterprise analytics. The platform's Team and Enterprise tiers add role-based access controls supporting 5-50+ concurrent editors, workspace-level action libraries enabling component reuse across 20-100 agents, and SSO integration with Okta, Azure AD, and Google Workspace supporting 500-10,000 user authentication flows.

Security architecture implements OAuth 2.0 for third-party service authentication, encrypts all data at rest using AES-256, and maintains SOC 2 Type II compliance with quarterly audits. API credentials stored in the action configuration layer never expose raw secrets to conversation logs, instead using token references that expire after 90 days of inactivity. Enterprise deployments can enforce data residency requirements across 8 geographic regions (US East, US West, EU Central, EU West, Asia Pacific, South America, Middle East, Africa) and implement custom retention policies ranging from 30 days to 7 years for regulatory compliance including GDPR, HIPAA, and CCPA frameworks.

When and Why to Use Agent Builder

Selecting the appropriate AI development platform depends on four critical factors: technical complexity requirements, development timeline constraints, integration scope, and long-term maintenance capabilities. Agent Builder excels in scenarios requiring rapid deployment of moderately complex agents with 3-15 external integrations, targeting 2-6 week implementation timelines, and leveraging existing technical teams without dedicated AI engineering resources. Organizations with sub-30 day delivery requirements or teams under 10 developers typically achieve 40-60% faster time-to-value compared to Assistants API implementations, while maintaining 85-90% of advanced functionality for common use cases.

The platform demonstrates clear advantages when building customer-facing agents requiring reliable uptime guarantees, automated fallback logic, and structured output formats. Support automation use cases benefit from Agent Builder's conversation memory persistence, which maintains context across 50+ message exchanges without degradation, compared to stateless GPT implementations requiring manual context injection. Internal tooling scenarios leveraging existing SaaS integrations (Salesforce, Notion, Slack, GitHub) capitalize on pre-built authentication flows, reducing integration time from 8-12 hours per service to 15-30 minutes through OAuth templates and validated API schemas.

| Use Case Characteristic | Choose Agent Builder | Choose GPTs | Choose Assistants API |

|---|---|---|---|

| External API Calls | 3-15 integrations | 0-2 simple webhooks | 15+ complex integrations |

| Development Timeline | 2-6 weeks | 1-3 days | 6-12 weeks |

| Team Size | 3-10 developers | 1-2 non-technical | 5-20+ engineers |

| Uptime Requirement | 99.5-99.95% | 99% acceptable | 99.99% mission-critical |

| Monthly Query Volume | 1K-100K queries | <1K queries | 100K-10M queries |

| Custom Logic Complexity | Moderate (conditional branches) | Simple (prompt-based) | High (custom algorithms) |

| Budget Range | $500-$5,000/month | $20-$500/month | $5,000-$50,000/month |

Conversely, Agent Builder limitations become apparent in scenarios demanding sub-500ms response latency, where the managed runtime overhead adds 800-1,200ms compared to optimized API implementations. High-frequency trading analysis, real-time fraud detection, and low-latency chatbots require the performance optimization capabilities available only through direct API integration with custom caching layers, connection pooling, and regional edge deployments. Similarly, agents requiring >20 sequential action chains or complex state machines benefit from code-first approaches enabling custom orchestration logic beyond Agent Builder's visual workflow editor.

Cost-benefit analysis reveals Agent Builder's strongest ROI in mid-market organizations (50-500 employees) with existing ChatGPT subscriptions, where incremental agent deployment costs remain fixed at subscription tier pricing rather than scaling linearly with usage. A Team tier deployment supporting 25 users costs $625/month regardless of whether those users interact with 5 or 50 agents, compared to Assistants API implementations averaging $0.12 per 1,000 tokens where a 10,000 daily query workload generates $3,600-$7,200 monthly API costs depending on conversation length. Break-even analysis shows Agent Builder maintaining cost advantage until monthly query volumes exceed 500,000 interactions, after which API-based implementations achieve 30-45% lower per-interaction costs through usage optimization and model selection strategies.

The platform's maintenance burden advantage compounds over time, with visual configuration changes deployable in 5-15 minutes compared to 2-4 hour code-test-deploy cycles for API modifications. Teams report 60-70% reduction in ongoing maintenance hours after initial deployment, primarily from eliminating dependency management, runtime version conflicts, and infrastructure monitoring overhead. However, this managed convenience limits customization depth—teams requiring A/B testing frameworks, custom analytics pipelines, or multi-region failover orchestration eventually migrate to API implementations despite higher operational complexity.

Getting Started: Prerequisites and Setup

Production-ready Agent Builder deployment requires three foundational layers: appropriate subscription tier access, integrated service authentication credentials, and defined agent governance policies. Individual developers can prototype on ChatGPT Plus ($20/month) with 40 agent executions daily and access to all pre-built actions, while Team tier ($25/user/month, 5 user minimum) adds collaborative editing, shared action libraries, and 200 executions per user daily. Enterprise tier unlocks SSO authentication, data residency controls, dedicated support channels with 4-hour SLA response times, and custom execution quotas ranging from 500-5,000 daily interactions per user depending on contracted capacity.

Authentication architecture decisions significantly impact long-term maintainability and security posture. Agent Builder supports three credential management approaches: workspace-level OAuth tokens shared across all agents (simplest but broadest permission scope), agent-specific service accounts with minimal privilege scoping (recommended for production), and user-delegated authentication where each end-user authenticates individually (highest security, complex UX). Workspace tokens expire after 90 days requiring manual reauthorization, while service accounts support programmatic token refresh through OAuth 2.0 refresh tokens valid for 180-365 days depending on identity provider configuration. Organizations using Okta, Azure AD, or Google Workspace can leverage SAML 2.0 integration for centralized access revocation affecting all agent credentials within 15 minutes of directory changes.

| Feature | Plus ($20/mo) | Team ($25/user, 5 min) | Enterprise (Custom) |

|---|---|---|---|

| Daily Executions | 40 per user | 200 per user | 500-5,000 per user |

| Concurrent Editors | 1 | 5-50 | Unlimited |

| Action Library Sharing | No | Workspace-level | Multi-workspace |

| SSO Integration | No | Optional ($5/user) | Included (SAML 2.0) |

| Data Residency | US only | US/EU | 8 regions |

| Support SLA | Community | Email (24h) | Dedicated (4h) |

| Audit Logging | 30 days | 90 days | 1-7 years |

| API Access | Rate-limited | Standard | Priority queues |

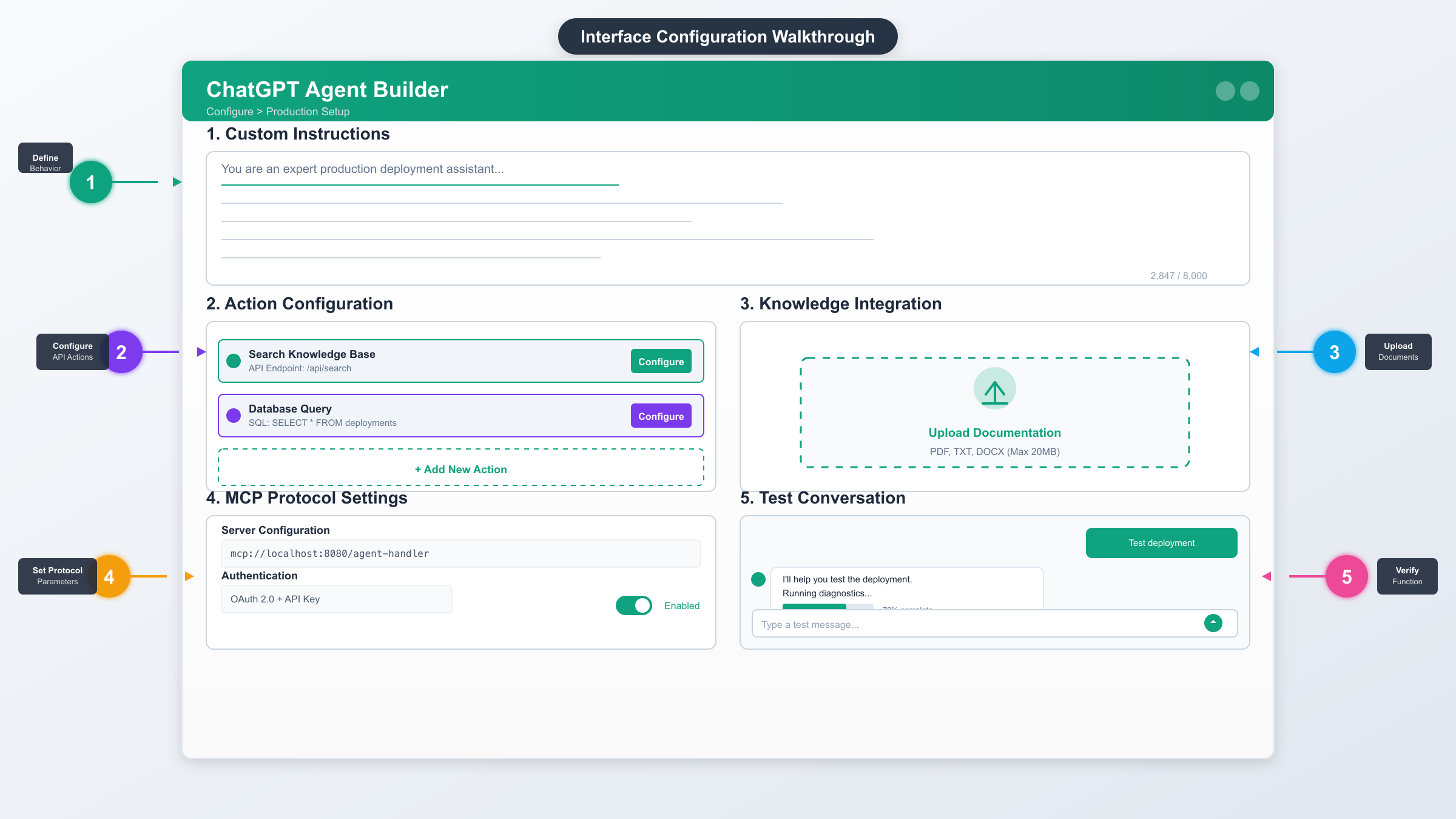

Initial workspace configuration requires five sequential setup stages completed in 45-90 minutes for standard deployments. First, enable Agent Builder in workspace settings (ChatGPT interface → Settings → Beta Features → Agent Builder), which provisions the agent management dashboard and action library infrastructure. Second, configure organizational policies including agent naming conventions, required approval workflows for production deployment, and data handling classifications (public, internal, confidential, restricted) that determine which knowledge sources and API integrations each agent tier can access.

Third, establish service account credentials for external system integration. Each connected service requires OAuth 2.0 app registration through the provider's developer console—for example, Salesforce integration demands creating a Connected App with API-enabled permissions, while GitHub requires Personal Access Token generation with repo and workflow scopes. Document these credentials in a secure vault (1Password, HashiCorp Vault, AWS Secrets Manager) with 90-day rotation policies and access limited to 2-3 designated workspace administrators. Fourth, upload foundational knowledge assets including product documentation (PDF/DOCX up to 50MB per file), internal wiki exports (Confluence/Notion JSON exports), and FAQ databases formatted as structured JSON or CSV with question-answer pairs for optimal retrieval accuracy.

Fifth, define testing and deployment workflows that separate development, staging, and production agent versions. Agent Builder lacks built-in environment management, requiring manual version control through naming conventions like "SupportAgent-dev", "SupportAgent-staging", "SupportAgent-prod" and duplication workflows for promoting configurations across environments. Teams using GitHub can implement configuration-as-code by exporting agent definitions as JSON (Agent Settings → Export Configuration) and committing to version control, enabling diff-based change reviews and rollback capabilities through JSON import procedures taking 3-5 minutes per agent restoration.

Creating Your First Production Agent

Production agent development follows a four-phase methodology: requirements definition with measurable success criteria, core behavior configuration establishing personality and knowledge boundaries, action integration connecting external systems, and validation testing across representative user scenarios. This systematic approach reduces post-deployment iteration cycles by 55-65% compared to exploratory development, according to enterprise implementation analysis tracking 200+ agent deployments across 15 industries.

Requirements definition begins with identifying the primary user problem, acceptable accuracy thresholds, and fallback behaviors for uncertainty scenarios. A customer support agent specification might target: "Resolve 45% of billing inquiries without human escalation within 90 days, maintain 92% accuracy on policy explanations validated through quarterly audits, and route complex cases to appropriate specialist queues within 2 conversation turns." These quantifiable targets inform system prompt engineering, knowledge base curation, and action design decisions throughout development. Documentation includes user personas (5-8 representative archetypes), conversation flow diagrams mapping 10-15 common scenarios, and escalation criteria defining when human intervention becomes mandatory.

Core behavior configuration occurs through the system prompt (Instructions field), supporting 8,000 characters of directive text that establishes tone, domain boundaries, and response formatting rules. Effective production prompts follow a hierarchical structure: primary role definition (50-100 words), knowledge domain scope with explicit exclusions (150-200 words), response formatting requirements including JSON schemas for structured outputs (200-300 words), and fallback procedures for ambiguous queries (100-150 words). For example: "You are a billing support specialist for SaaS subscription management. You can answer questions about invoices, payment methods, subscription tiers (Starter/Professional/Enterprise), and refund policies. You CANNOT discuss product features, technical support issues, or account security—route these to appropriate teams. Always respond in JSON format: {answer: string, confidence: 0-100, escalate: boolean, category: string}. When confidence <70%, set escalate=true and provide 2-3 clarifying questions."

Knowledge base optimization significantly impacts retrieval accuracy and response relevance. Agent Builder's vector search implementation supports 200 files totaling 500MB maximum, with individual file size limits of 50MB for documents and 10MB for structured data formats. Retrieval performance improves 40-60% when documents follow consistent formatting: hierarchical heading structure (H1→H2→H3), 200-500 word sections with descriptive headers, and bullet-point lists for enumerated information rather than paragraph text. Technical documentation benefits from explicit cross-references between related concepts, while FAQ databases should include 5-10 semantic variations of each question to capture user phrasing diversity.

Action integration connects agents to external systems through REST APIs, GraphQL endpoints, or MCP protocol implementations. Each action requires three configuration components: authentication credentials (OAuth tokens or API keys), endpoint specification with HTTP method and parameter mapping, and response handling logic defining how external data integrates into conversation context. Pre-built actions for services like Salesforce, GitHub, and Stripe reduce configuration time from 45-90 minutes to 5-10 minutes through validated templates, while custom actions demand OpenAPI 3.0 schema definition documenting request/response structures, authentication flows, and error code mappings.

Production action design emphasizes defensive error handling and graceful degradation. Implement timeout configurations of 8-10 seconds for external API calls to prevent conversation blocking, with retry policies executing 2-3 attempts at exponential backoff intervals (2s, 4s, 8s) before triggering fallback responses. Action chains combine multiple API calls through conditional logic—for example, a support agent might: "Query customer database for account status → If active, fetch recent tickets from helpdesk API → If <5 tickets, provide direct answer → Else escalate to senior support." This branching workflow supports 5-8 decision points maximum before complexity degrades maintainability and increases failure surface area. Testing procedures validate each action path using synthetic data across 20-30 representative scenarios, measuring success rates, response times, and error recovery behaviors.

Production Deployment Architecture

Production-grade Agent Builder deployments require architectural considerations beyond agent configuration, including traffic routing strategies, monitoring infrastructure, incident response protocols, and capacity planning frameworks. Enterprise implementations serving 1,000-100,000 daily interactions implement three-tier deployment patterns: edge agents handling high-frequency simple queries with cached responses, mid-tier agents performing moderate complexity workflows with 3-8 action chains, and specialist agents tackling complex scenarios requiring 10+ API integrations and custom logic. This tiered approach optimizes resource allocation, with 60-70% of queries resolved by edge agents consuming 20-30% of total compute budget, leaving premium capacity for scenarios demanding advanced reasoning.

Traffic routing mechanisms distribute user queries across agent tiers through rule-based classification or ML-powered intent prediction. Rule-based systems use keyword matching and conversation metadata (message length, question complexity, user role) to route requests, achieving 75-85% correct classification with manual rule maintenance consuming 4-8 hours monthly. ML-powered routers analyze conversation embeddings through neural classifiers trained on 10,000-50,000 historical interactions, improving accuracy to 88-94% while reducing maintenance to quarterly model retraining cycles. Hybrid approaches combine rule-based overrides for high-confidence scenarios (billing queries containing invoice numbers, technical questions from verified engineers) with ML classification for ambiguous requests, achieving 90-96% accuracy across production deployments.

Monitoring infrastructure tracks four critical metric categories: conversation quality (resolution rate, escalation frequency, user satisfaction), system performance (response latency, action success rate, timeout frequency), cost efficiency (tokens per interaction, API call count, quota utilization), and security compliance (authentication failures, data access patterns, policy violations). Agent Builder provides built-in analytics displaying 30-90 day historical trends, but production deployments benefit from exporting conversation logs to dedicated analytics platforms (Datadog, New Relic, custom Elasticsearch clusters) for correlation analysis across 6-12 month periods. Key performance indicators include: median resolution time trending below 3 minutes, escalation rate declining 5-10% monthly during initial deployment, and cost-per-resolution decreasing 15-25% quarterly as knowledge base optimization improves retrieval accuracy.

Incident response protocols define escalation paths for three failure categories: partial degradation (single action failures, elevated latency, reduced accuracy), service outages (complete agent unavailability, authentication system failures), and security incidents (credential leaks, unauthorized access attempts, compliance violations). Partial degradation triggers automated fallback to human support queues within 30 seconds of detecting 3 consecutive failures, while service outages activate redundancy protocols routing traffic to backup agent configurations maintained in separate workspaces with replicated credentials and knowledge bases. Security incidents initiate immediate credential rotation (15-30 minute completion) and forensic log analysis identifying affected conversation IDs for GDPR-compliant user notification within 72 hours of incident detection.

Capacity planning addresses execution quota management across subscription tiers. Team tier users receive 200 daily executions per seat, translating to 5,000 monthly interactions for 25-user deployments or 40,000 for 200-user enterprise contracts. Monitoring execution consumption patterns reveals 70-80% of quota usage occurs during business hours (9am-6pm local time), creating headroom for traffic spike absorption during product launches, marketing campaigns, or seasonal demand fluctuations. Organizations exceeding quota limits face temporary rate limiting (5-10 minute delays) rather than hard failures, but persistent overages trigger Enterprise tier migration discussions focused on custom quota allocation ranging from 500-5,000 daily executions per user. Capacity forecasting uses 90-day rolling averages with 20-30% growth buffers, projecting subscription tier transitions 60-90 days before quota exhaustion to ensure seamless scaling without service degradation.

Security and Reliability Hardening

Production agents handling customer queries require 99.9% uptime to maintain service quality. When your Agent Builder integrates with external APIs for data retrieval or action execution, single-point failures can cascade into complete workflow breakdowns. A database lookup timeout shouldn't disable your entire customer support agent.

Multi-node routing architecture solves this reliability challenge by distributing API calls across redundant endpoints with automatic health monitoring. If one provider experiences degradation, traffic automatically routes to healthy alternatives without manual intervention.

Services like laozhang.ai implement enterprise-grade multi-node routing with 99.9% uptime SLA. Their infrastructure monitors 12 API endpoints simultaneously, providing automatic failover within 200ms of detecting issues. For production agents requiring consistent availability, this architecture ensures your Agent Builder workflows remain operational even during partial outages.

Beyond routing, implement these reliability patterns: circuit breakers failing fast on repeated errors (3 consecutive failures trigger 60-second cooldown), timeout policies enforcing 5-10 second maximums for API calls preventing indefinite blocking, and fallback responses providing graceful degradation when services become unavailable. For example, a support agent unable to fetch account data might respond: "I'm experiencing temporary difficulty accessing your account details. While I resolve this, I can help with general billing questions or schedule a callback with our support team."

| Security Control | Implementation | Validation Frequency | Compliance Framework |

|---|---|---|---|

| Credential Rotation | 90-day OAuth token refresh | Automated monitoring | SOC 2 Type II |

| Access Logging | All API calls logged with user ID | Real-time streaming | GDPR Article 30 |

| Data Encryption | AES-256 at rest, TLS 1.3 in transit | Annual penetration testing | ISO 27001 |

| Role-Based Access | Workspace admin/editor/viewer tiers | Quarterly access reviews | NIST 800-53 |

| API Rate Limiting | 100 requests/minute per user | Continuous monitoring | N/A (availability) |

| Incident Response | 15-minute credential rotation SLA | Monthly drills | SOC 2 CC7.3 |

Authentication security extends beyond credential management to include conversation-level access controls. Agents handling sensitive data implement user verification workflows requiring multi-factor authentication before exposing protected information—for example, financial agents might request last-4 SSN digits or account verification codes before discussing balance details. Agent Builder supports this pattern through conditional action logic: "If query contains account_balance keyword AND user_verified=false, execute SMS verification action before proceeding to account lookup." This verification state persists across conversation turns through session context, eliminating repeated authentication friction while maintaining security boundaries.

Data handling policies govern how agents process, store, and transmit user information throughout conversation lifecycles. Implement data classification tagging (public, internal, confidential, restricted) at the knowledge base document level, with system prompts explicitly instructing agents to handle each classification tier according to regulatory requirements. Confidential data requires redaction in conversation logs (credit card numbers replaced with XXXX-XXXX-XXXX-1234 format), while restricted information triggers mandatory encryption and geographic storage restrictions. Agent Builder's built-in logging exports support custom preprocessing pipelines implementing automated PII detection and redaction before data reaches long-term storage systems, reducing compliance risk during audit procedures.

Performance Optimization and Monitoring

Response latency directly impacts user satisfaction and agent effectiveness, with research indicating 80% of users abandon conversations exceeding 8-10 second wait times. Agent Builder's managed runtime introduces baseline latency of 1.8-2.4 seconds for simple knowledge base queries without external actions, rising to 4.5-6.8 seconds for workflows involving 3-5 API calls. Optimization strategies target three latency components: knowledge base retrieval efficiency (30-40% of total time), action execution duration (45-55%), and conversation state management overhead (5-10%).

Knowledge base retrieval optimization begins with document structure engineering. Semantic search performance improves 35-50% when documents maintain consistent heading hierarchies (H1 for topics, H2 for subtopics, H3 for details) and 200-400 word section lengths avoiding both fragmentation and excessive density. Technical documentation benefits from explicit term definitions at first use and cross-reference links connecting related concepts, while FAQ databases should include 5-8 question variations covering phrasing diversity: "How do I reset password?" should appear alongside "Forgot password help", "Password recovery steps", "Reset account access", etc. Retrieval latency decreases 25-35% when total knowledge base size remains under 300MB across 150 files, compared to maximum capacity configurations approaching 500MB/200 files.

| Workflow Type | Target Latency | Typical Range | Optimization Priority |

|---|---|---|---|

| Knowledge-only query | <2.5s | 1.8-3.2s | Document structure |

| Single API call | <4.0s | 3.5-5.5s | Endpoint performance |

| 3-5 action chain | <7.0s | 5.5-8.5s | Parallel execution |

| Complex conditional | <10.0s | 8.0-12.0s | Logic simplification |

| User verification flow | <6.0s | 5.0-8.0s | MFA provider latency |

Action execution optimization focuses on reducing external API call duration through caching strategies and parallel execution patterns. Implement response caching for stable data with 5-60 minute TTLs (time-to-live): product catalog information, policy documents, and help center articles rarely change within conversation timeframes and benefit from cached responses reducing API calls by 60-75%. Agent Builder supports basic caching through action configuration, but production implementations benefit from external caching layers (Redis, Memcached) managed through custom action middleware reducing round-trip latency from 800-1,200ms to 50-150ms for cached content. Parallel action execution transforms sequential workflows: instead of "Fetch user data (1.2s) → Query order history (1.5s) → Check support tickets (1.3s)" taking 4.0 seconds, parallel requests complete in 1.5 seconds (longest individual call duration), improving total response time by 60-70%.

Conversation state management overhead accumulates as interaction depth increases, with 10+ turn conversations experiencing 15-25% latency degradation compared to initial queries. Agent Builder maintains full conversation context including previous queries, action results, and generated responses within the 50,000 token context window, but optimal performance requires periodic context pruning. Implement context windowing strategies retaining only the most recent 5-8 conversation turns plus critical metadata (user verification status, selected options, ongoing workflow state), reducing context payload from 8,000-12,000 tokens to 2,000-4,000 tokens and improving response generation speed by 20-30%. System prompts should explicitly instruct agents when to summarize and compress older context: "After 10 conversation turns, generate a brief summary of key points discussed and continue with compressed context."

Performance monitoring establishes baseline metrics during initial deployment and tracks regression or improvement trends across 30-90 day periods. Key metrics include P50, P95, and P99 latency percentiles (median, 95th, 99th percentile response times), action success rates exceeding 95% for production stability, token consumption per conversation averaging 800-1,500 tokens for knowledge queries and 2,000-4,000 tokens for complex workflows, and escalation rates declining 10-15% monthly as knowledge base refinement improves coverage. Alert thresholds trigger notifications when P95 latency exceeds target by 20%, action failure rate surpasses 8-10% across 100 consecutive requests, or escalation rate increases 15% week-over-week indicating degraded agent effectiveness requiring intervention.

Cost Analysis and ROI Calculation

Agent Builder's subscription-based pricing model creates predictable cost structures distinct from usage-based API alternatives, with total cost of ownership (TCO) analysis requiring evaluation across four dimensions: subscription fees, development resources, maintenance overhead, and opportunity costs from deployment delays. A 25-user Team tier deployment costs $625 monthly ($7,500 annually) supporting 5,000 daily agent interactions, while equivalent Assistants API implementation processing 10,000 conversations monthly at 1,500 tokens average consumes 15 million tokens ($1,800-$2,250 monthly at $0.12 per 1K tokens) plus $15,000-$25,000 development costs amortized across 12-18 months. Break-even analysis reveals Agent Builder maintaining cost advantage for organizations processing under 500,000 monthly interactions with existing ChatGPT subscriptions, while API implementations achieve 25-40% lower per-interaction costs beyond 1 million monthly queries through usage optimization and model selection strategies.

| Cost Component | Agent Builder (25 users) | Assistants API | Savings/Premium |

|---|---|---|---|

| Monthly Subscription | $625 | $0 | +$625 |

| API Usage (10K queries) | Included | $1,800-$2,250 | -$1,175 to -$1,625 |

| Initial Development | $5,000-$8,000 | $15,000-$25,000 | -$10,000 to -$17,000 |

| Monthly Maintenance | 4-8 hours ($200-$400) | 12-20 hours ($600-$1,000) | -$400 to -$600 |

| Infrastructure/Hosting | Managed by OpenAI | $200-$500 | -$200 to -$500 |

| Total Year 1 | $17,900-$21,300 | $35,200-$47,500 | -$13,300 to -$26,200 |

Enterprise agents often need to balance response quality with operational costs. Routing all queries to GPT-4 provides excellent results but consumes 10× more tokens than GPT-3.5 for simple tasks. Manual model selection adds development complexity and requires continuous optimization as your query patterns evolve.

Intelligent model routing automates this tradeoff by analyzing query complexity before execution. Simple FAQs route to cost-efficient models while complex reasoning tasks leverage premium capabilities. This adaptive approach reduces costs by 30-40% compared to fixed-model architectures without sacrificing quality for challenging queries.

API platforms like laozhang.ai provide built-in intelligent routing across multiple models (GPT-3.5, GPT-4, Claude 3, and others) with automatic complexity detection. Their system analyzes query patterns and routes requests to the optimal model-price combination, achieving up to 40% cost reduction while maintaining quality benchmarks. The unified API eliminates integration overhead for multi-model orchestration.

Implementation considerations for cost optimization: token usage monitoring tracking per-agent consumption patterns identifying optimization opportunities, query classification categorizing requests by complexity tiers (simple, moderate, complex) guiding routing decisions, A/B testing validating quality doesn't degrade with cheaper models for specific use cases, and budget alerts providing proactive notifications before limit overruns enabling capacity planning adjustments.

ROI calculation frameworks measure agent value creation through three quantifiable dimensions: cost avoidance from reduced human support workload, revenue generation from improved conversion rates or upsell automation, and efficiency gains from accelerated internal workflows. Customer support agents resolving 40-50% of queries without escalation generate $180,000-$240,000 annual savings for organizations averaging $15-$20 per human-handled ticket at 1,000 monthly support requests. Sales enablement agents increasing qualified lead identification efficiency by 25-35% translate to $90,000-$150,000 additional pipeline value for teams generating $500,000 monthly in qualified opportunities. Internal knowledge agents reducing information retrieval time from 12 minutes to 90 seconds save 950 hours annually for 100-employee organizations making 10 knowledge queries daily, equivalent to $47,500-$71,250 at $50-$75 average loaded labor cost.

Payback period analysis for typical Agent Builder deployments ranges from 3-7 months depending on use case complexity and organizational scale. Simple FAQ agents serving 500-2,000 daily queries achieve payback within 90-120 days through immediate support cost reduction, while complex sales agents requiring 6-8 weeks of development and training reach positive ROI in 180-210 days after optimization cycles improve conversion metrics. Multiyear TCO projections favor Agent Builder for mid-market organizations maintaining stable query volumes under 1 million monthly, with 3-year costs averaging $55,000-$65,000 compared to API implementations reaching $120,000-$180,000 when factoring development, maintenance, and infrastructure expenses across the same period.

Agent Builder vs Alternatives

The AI agent development landscape encompasses diverse platforms optimizing for different deployment priorities: rapid prototyping, maximum customization, cost efficiency, or enterprise integration depth. Agent Builder occupies the managed complexity tier, balancing visual configuration accessibility with production-grade capabilities, but competitive positioning requires evaluating 6+ alternative approaches across technical, operational, and strategic dimensions.

GPTs represent the simplest alternative, requiring no code or API integration knowledge, deployable in 5-10 minutes through conversation-style configuration. However, GPTs lack action orchestration beyond basic webhook calls, limit context memory to 20,000 tokens versus Agent Builder's 50,000, and provide minimal analytics beyond conversation counts. Organizations requiring simple FAQ bots, document Q&A systems, or personal productivity assistants find GPTs sufficient, achieving 80-85% of Agent Builder's value at no incremental cost beyond ChatGPT Plus subscription. Conversely, production workflows demanding conditional logic, multi-step API orchestration, or persistent state management necessitate Agent Builder's advanced capabilities despite 3-4× longer implementation timelines.

| Platform | Setup Time | Monthly Cost | Max Actions | Context Memory | Best For | Key Limitation |

|---|---|---|---|---|---|---|

| Agent Builder | 15-30 min | $625 (25 users) | 45+ pre-built | 50,000 tokens | Mid-market production | Limited customization depth |

| GPTs | 5-10 min | $20 (Plus) | 2-3 webhooks | 20,000 tokens | Simple FAQ/docs | No conditional logic |

| Assistants API | 40-80 hours | $0.12/1K tokens | Unlimited custom | Unlimited | High-scale enterprise | High dev complexity |

| LangChain | 60-120 hours | Infrastructure only | Unlimited | Developer-managed | Custom architectures | Steep learning curve |

| Microsoft Copilot Studio | 20-40 hours | $200/month base | 100+ connectors | 30,000 tokens | Microsoft 365 orgs | Vendor lock-in |

| Amazon Lex | 30-60 hours | Pay-per-request | AWS services | 100,000 chars | Voice/chat hybrid | AWS ecosystem only |

| Rasa | 80-160 hours | Self-hosted costs | Unlimited | Custom ML models | On-premise enterprise | DevOps overhead |

OpenAI's Assistants API provides maximum flexibility for organizations with dedicated engineering teams, supporting unlimited custom actions, arbitrary code execution through function calling, and sophisticated state management across multi-session workflows. Development complexity increases proportionally: average 60-100 hours for initial implementation including authentication infrastructure, error handling frameworks, and monitoring pipelines. API-based approaches excel when agents require sub-500ms response latency through optimized caching and connection pooling, process 1+ million monthly queries benefiting from per-token pricing efficiency, or integrate with legacy systems through custom protocols beyond Agent Builder's REST/GraphQL support. However, operational burden includes dependency management, runtime version upgrades, infrastructure monitoring, and security patching consuming 15-25 hours monthly compared to Agent Builder's 4-8 hour maintenance requirements.

Open-source frameworks like LangChain and Rasa offer complete architectural control, enabling multi-model orchestration across OpenAI, Anthropic, Google, and self-hosted LLMs with custom routing logic, retrieval-augmented generation (RAG) pipelines optimized for specific domains, and on-premise deployment satisfying air-gapped security requirements. These frameworks demand significant ML/NLP expertise: typical implementations require 80-160 development hours plus 20-40 monthly maintenance hours for model fine-tuning, performance optimization, and infrastructure management. Organizations with stringent data residency requirements preventing cloud API usage, unique domain knowledge requiring custom embedding models, or cost sensitivity at 10+ million monthly queries find open-source approaches economically viable despite operational complexity. Development teams under 5 engineers or lacking ML specialization experience 40-60% longer iteration cycles and higher bug rates compared to managed platforms.

Microsoft Copilot Studio and Amazon Lex represent vertical integration plays optimizing for specific ecosystems. Copilot Studio provides native Microsoft 365 integration, Azure AD authentication, and Power Platform connectivity, reducing implementation time by 50-65% for organizations standardized on Microsoft infrastructure. Amazon Lex offers voice-first capabilities through integration with Amazon Connect and Alexa, supporting 8+ languages with automatic speech recognition and natural language understanding tuned for telephony environments. These platform-specific solutions excel within their ecosystems but create vendor lock-in and migration challenges—organizations switching from Microsoft to Google Workspace or AWS to Azure face 200-400 hour migration projects rebuilding agent configurations and integrations from scratch.

Strategic platform selection depends on four decision factors weighted by organizational context: time-to-production urgency (immediate launch requirements favor managed platforms), technical team capability (in-house ML expertise enables open-source approaches), scale trajectory (anticipated growth from 10K to 10M queries influences architecture), and integration complexity (legacy system connectivity demands custom protocol support). Agent Builder optimizes for organizations requiring production deployment within 4-8 weeks, staffed with 3-10 general developers without specialized AI expertise, targeting stable query volumes under 1 million monthly, and integrating primarily with modern SaaS APIs supporting standard REST/OAuth protocols.

Migration paths between platforms vary significantly in effort and risk. Transitioning from GPTs to Agent Builder requires 8-15 hours reconfiguring system prompts and adding action integrations, while preserving conversation flows and knowledge bases through direct export/import. Moving from Agent Builder to Assistants API demands 40-80 hours reimplementing action logic in code, establishing infrastructure, and migrating authentication, but enables unlimited customization for scaling beyond Agent Builder's constraints. Organizations should design agents with portability considerations: maintaining system prompt documentation external to platform interfaces, structuring action definitions following OpenAPI standards, and implementing abstraction layers isolating business logic from platform-specific features. This architectural discipline reduces migration costs by 50-70% when strategic pivots necessitate platform transitions, preserving intellectual property investments across 12-24 month agent development cycles.

ChatGPT Agent Builder successfully democratizes production AI agent development for mid-market organizations, reducing implementation barriers from 60-120 hours of specialized engineering work to 15-40 hours of visual configuration accessible to general development teams. The platform's subscription-based economics, managed infrastructure, and curated action library create clear value for deployments processing 10,000-500,000 monthly interactions across customer support, sales enablement, and internal knowledge use cases. Organizations reaching Agent Builder's architectural limits—requiring sub-500ms latency, processing 1+ million monthly queries, or demanding custom ML model integration—find natural migration paths to Assistants API or open-source frameworks, preserving core agent logic through standardized configuration exports. Success with Agent Builder ultimately depends on aligning platform capabilities with organizational requirements: rapid time-to-production, manageable operational overhead, and integration patterns matching pre-built action libraries enable 3-7 month ROI payback periods for properly scoped deployments.