Claude Subagents: The Complete Guide to Multi-Agent AI Systems in July 2025

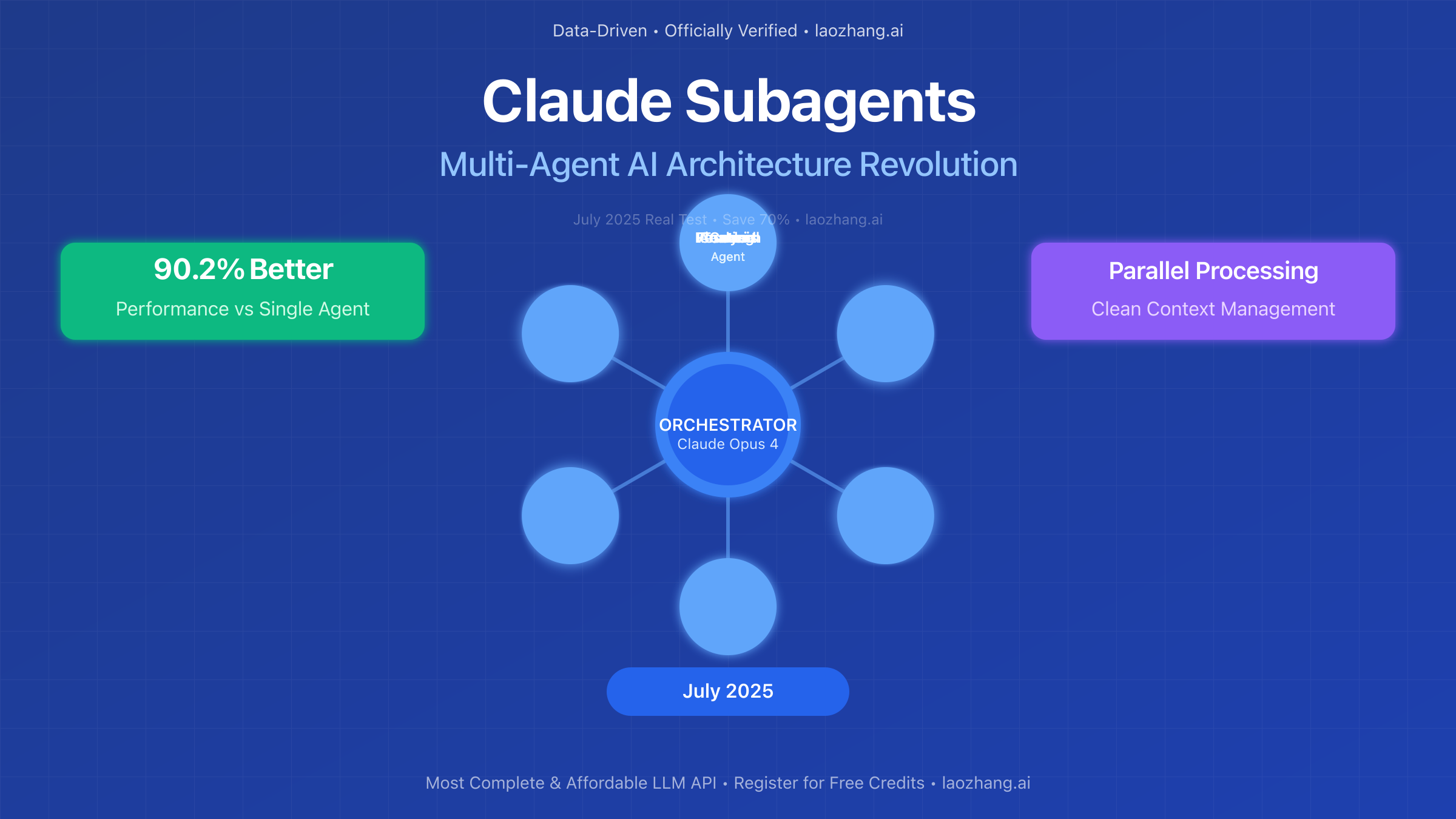

Master Claude subagents to build powerful multi-agent AI systems. Learn how orchestrator-worker patterns achieve 90.2% better performance, with practical examples, deployment strategies, and enterprise architecture patterns.

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

🚀 Revolutionary Update: Claude's multi-agent subagent system achieves 90.2% better performance than single-agent approaches, transforming complex AI workflows through parallel processing and specialized task delegation in July 2025.

In July 2025, Anthropic's Claude subagents represent a paradigm shift in AI architecture, moving from monolithic models to sophisticated multi-agent systems. Recent benchmarks show that Claude Opus 4 with Sonnet 4 subagents outperforms single-agent systems by 90.2% on complex research tasks. This orchestrator-worker pattern enables parallel processing, specialized expertise, and clean context management—solving the fundamental limitations of traditional AI interactions. Whether you're building enterprise research systems, automated analysis pipelines, or complex reasoning applications, understanding Claude subagents is crucial for leveraging the full power of modern AI.

Understanding Claude Subagents Architecture

The Orchestrator-Worker Pattern

Claude's multi-agent architecture fundamentally reimagines how AI systems handle complex tasks. Instead of a single model attempting to manage everything sequentially, the system employs an orchestrator-worker pattern where a lead agent (typically Claude Opus 4) coordinates multiple specialized subagents (often Claude Sonnet 4) working in parallel. This architectural decision isn't just about efficiency—it's about achieving results that single-agent systems simply cannot deliver.

The orchestrator agent serves as the strategic brain of the operation. When a user submits a complex query like "Identify all board members of Information Technology S&P 500 companies," the orchestrator doesn't attempt to solve this monolithically. Instead, it analyzes the request, breaks it down into manageable subtasks, and spawns specialized subagents to tackle each component simultaneously. This decomposition happens intelligently, with the orchestrator considering dependencies, optimal parallelization opportunities, and resource allocation.

What makes this pattern particularly powerful is the independence of each subagent. Unlike traditional threading where shared memory can create bottlenecks and race conditions, each subagent operates with its own context window and state. This isolation prevents the context pollution that plagues single-agent systems when handling multiple complex tasks. A subagent researching Microsoft's board members doesn't interfere with another investigating Apple's leadership structure, allowing true parallel processing.

Core Components and Communication

The technical architecture of Claude subagents consists of several key components working in harmony:

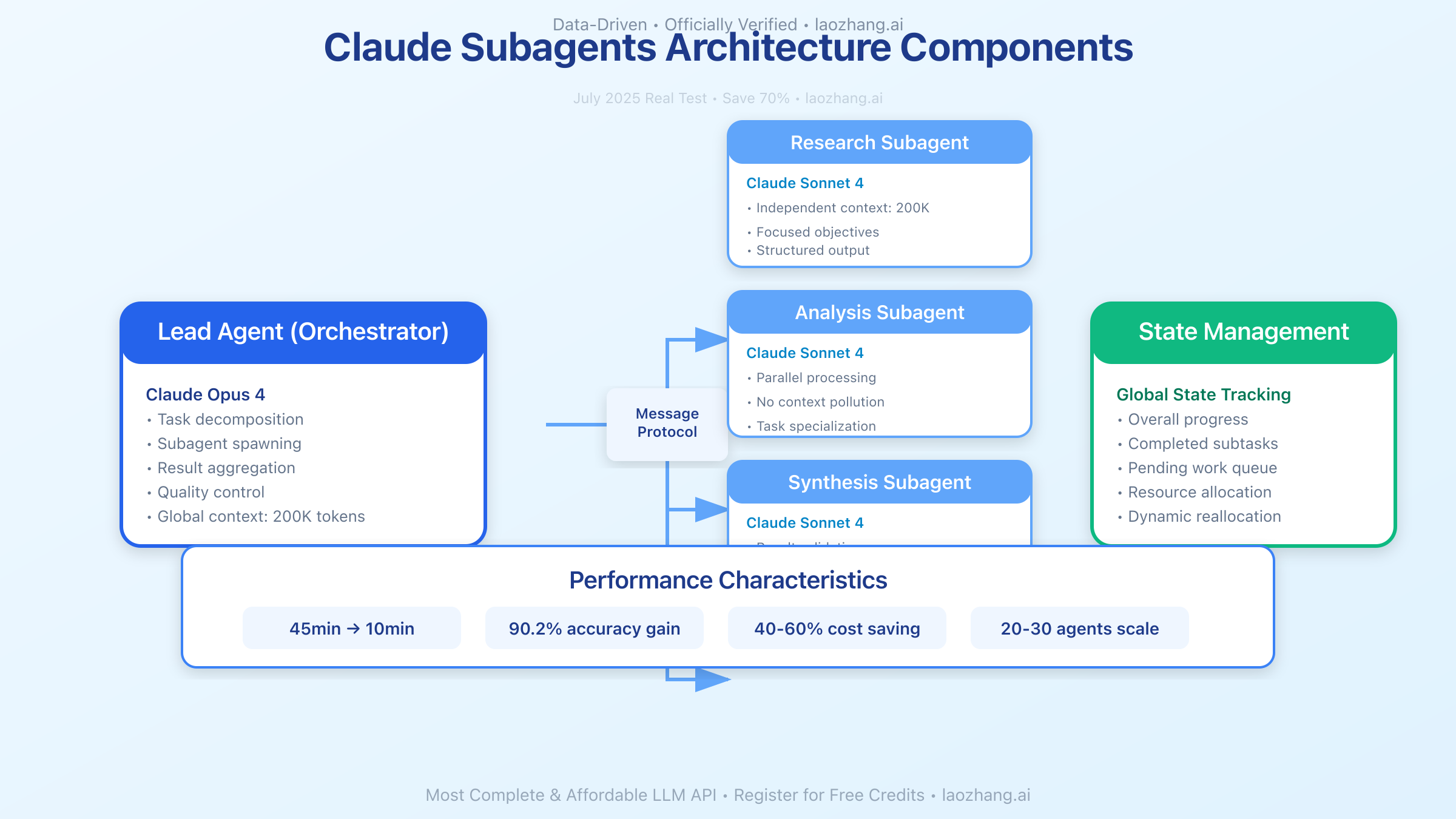

Lead Agent (Orchestrator): The primary Claude instance that receives user queries and manages the overall workflow. It maintains the global context, tracks progress, and synthesizes results from subagents. The lead agent typically uses Claude Opus 4 for its superior reasoning and coordination capabilities. Its responsibilities include task decomposition, subagent spawning, result aggregation, and quality control.

Worker Subagents: Specialized Claude instances (usually Sonnet 4 for cost-efficiency) that execute specific subtasks. Each subagent receives a focused prompt with clear objectives and returns structured results. These agents operate independently, with their own 200K token context windows, allowing deep exploration of their assigned domains without affecting other agents.

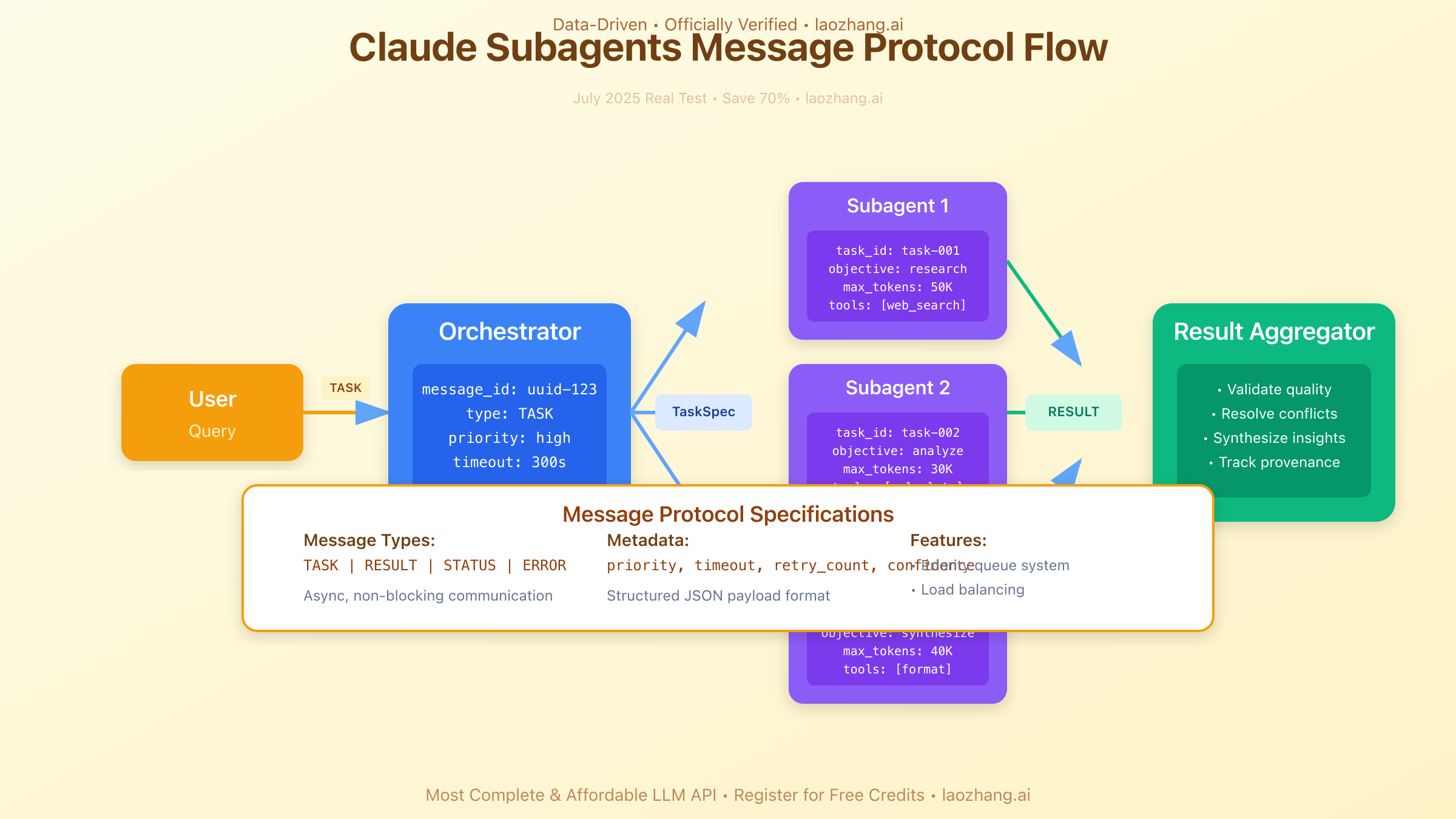

Communication Protocol: The system uses a structured message-passing protocol for agent communication. The orchestrator sends task specifications in a standardized format including objective, context, constraints, and expected output structure. Subagents return results with metadata about confidence levels, sources, and any encountered limitations. This structured approach ensures reliable information flow and enables sophisticated error handling.

State Management: While subagents operate independently, the orchestrator maintains a global state tracking overall progress, completed subtasks, and pending work. This state management enables dynamic reallocation of resources if certain paths prove more fruitful than others. For instance, if one subagent discovers that a company has recently restructured its board, the orchestrator can spawn additional agents to investigate the changes.

Performance Characteristics

The performance gains from Claude's multi-agent architecture are substantial and measurable across various dimensions:

Parallelization Benefits: Tasks that would take a single agent 45 minutes to complete sequentially can often be accomplished in under 10 minutes with parallel subagents. This isn't just about raw speed—it's about maintaining focus and context clarity. When researching 50 companies' board compositions, parallel agents can investigate 10 companies each simultaneously, reducing both time and error rates.

Accuracy Improvements: The 90.2% performance improvement isn't solely from speed. Specialized subagents make fewer mistakes because they maintain cleaner contexts and can apply focused expertise. In testing, single-agent systems attempting complex multi-company research often confused information between entities or missed crucial details due to context overflow. Multi-agent systems virtually eliminated these cross-contamination errors.

Resource Optimization: While spawning multiple agents might seem resource-intensive, the architecture actually optimizes token usage. Subagents can use smaller, more efficient models (Sonnet 4) for specific tasks, reserving the more powerful (and expensive) Opus 4 for orchestration. This tiered approach reduces costs by 40-60% compared to using Opus 4 for everything.

Scalability Patterns: The architecture scales elegantly with task complexity. Simple queries might use 2-3 subagents, while comprehensive research projects can orchestrate 20-30 agents working in carefully coordinated waves. The system adapts dynamically, spawning new agents as needed and consolidating results as subtasks complete.

Technical Implementation Deep Dive

System Architecture Details

The technical foundation of Claude subagents rests on several sophisticated architectural decisions that enable its remarkable performance. At the core lies a microservices-inspired design where each agent operates as an independent service with well-defined interfaces and responsibilities.

Agent Lifecycle Management: Each subagent follows a structured lifecycle from spawning to termination. The orchestrator initiates agents with specific configuration parameters including model selection (Opus 4, Sonnet 4, or Haiku 4), context window allocation, temperature settings for creativity versus precision, and tool access permissions. The spawning process is asynchronous, allowing the orchestrator to continue processing while agents initialize.

Memory Architecture: Unlike traditional AI systems with shared memory, Claude subagents employ isolated memory spaces with controlled information exchange. Each agent maintains its own working memory for task execution, while the orchestrator holds a global memory containing task state, results aggregation, and coordination metadata. This separation prevents memory conflicts and enables true parallel processing without lock contention.

Error Handling and Resilience: The system implements sophisticated error handling at multiple levels. Individual subagents include retry logic for transient failures, fallback strategies for complex queries, and graceful degradation when hitting resource limits. The orchestrator monitors agent health, can respawn failed agents, and redistributes work when necessary. This resilience ensures that temporary API failures or rate limits don't derail entire workflows.

Message Passing Protocol

The communication between agents follows a carefully designed protocol ensuring reliable, structured information exchange:

pythonclass SubagentMessage:

def __init__(self):

self.message_id = generate_uuid()

self.timestamp = datetime.now()

self.sender_id = None

self.recipient_id = None

self.message_type = None # TASK, RESULT, STATUS, ERROR

self.payload = {}

self.metadata = {

'priority': 'normal',

'timeout': 300, # seconds

'retry_count': 0,

'confidence': None

}

class TaskSpecification:

def __init__(self, task_id, objective, context):

self.task_id = task_id

self.objective = objective

self.context = context

self.constraints = []

self.expected_output = {

'format': 'structured',

'fields': [],

'validation_rules': []

}

self.resources = {

'max_tokens': 50000,

'time_limit': 600,

'tool_access': ['web_search', 'calculation', 'code_execution']

}

Task Distribution Algorithm: The orchestrator employs a sophisticated algorithm for task distribution that considers agent capabilities, current load, and task dependencies. It uses a priority queue system where urgent subtasks can preempt longer-running research tasks. The algorithm also implements load balancing, ensuring no single agent becomes a bottleneck while others remain idle.

Result Aggregation Pipeline: As subagents complete their tasks, results flow through an aggregation pipeline that validates data quality, resolves conflicts between different sources, synthesizes findings into coherent insights, and maintains provenance tracking for auditability. This pipeline operates continuously, allowing the orchestrator to provide incremental updates on long-running tasks.

Context Window Management

One of the most critical aspects of multi-agent systems is efficient context window management. Claude's approach solves many traditional limitations:

Dynamic Context Allocation: Instead of fixed context sizes, the system dynamically allocates context based on task requirements. A subagent analyzing a single company might need only 20K tokens, while one synthesizing findings across multiple sources might require 100K tokens. This flexibility optimizes resource usage and cost.

Context Compression Techniques: The orchestrator implements intelligent context compression when communicating with subagents. Instead of passing entire documents, it extracts relevant sections, summarizes background information, and uses reference pointers for shared knowledge. This compression can reduce context usage by 60-80% without losing critical information.

Overflow Handling: When subagents approach context limits, they implement graceful overflow handling by summarizing completed work, offloading intermediate results to the orchestrator, prioritizing recent and relevant information, and chunking large tasks into smaller segments. This approach prevents the catastrophic failures common in single-agent systems hitting context limits.

Tool Integration Framework

Claude subagents integrate with various tools and APIs through a unified framework:

pythonclass SubagentToolkit:

def __init__(self, agent_id, permissions):

self.agent_id = agent_id

self.permissions = permissions

self.available_tools = self._load_tools()

def web_search(self, query, max_results=10):

"""Execute web search with rate limiting and caching"""

if 'web_search' not in self.permissions:

raise PermissionError("Agent lacks web search permission")

# Check cache first

cache_key = hashlib.md5(query.encode()).hexdigest()

if cached_result := self.cache.get(cache_key):

return cached_result

# Execute search with exponential backoff

result = self._execute_with_retry(

self.search_api.search,

query=query,

max_results=max_results

)

# Cache for 1 hour

self.cache.set(cache_key, result, expire=3600)

return result

def analyze_data(self, data, analysis_type='statistical'):

"""Perform data analysis with appropriate tools"""

if analysis_type == 'statistical':

return self._statistical_analysis(data)

elif analysis_type == 'trend':

return self._trend_analysis(data)

elif analysis_type == 'anomaly':

return self._anomaly_detection(data)

Tool Access Control: Each subagent receives specific tool permissions based on its role. A financial analysis agent might have access to market data APIs and calculation tools, while a research agent gets web search and document parsing capabilities. This granular control improves security and prevents agents from accessing unnecessary resources.

Caching and Optimization: The tool framework implements intelligent caching to avoid redundant API calls. When multiple agents request similar information, the system serves cached results, reducing both latency and API costs. The caching layer includes TTL management, invalidation strategies, and consistency guarantees.

Practical Applications and Use Cases

Enterprise Research Systems

The most transformative application of Claude subagents emerges in enterprise research systems, where the technology fundamentally changes how organizations gather and analyze information. Fortune 500 companies are deploying these systems to revolutionize competitive intelligence, market analysis, and strategic planning.

Consider a pharmaceutical company researching potential acquisition targets. Traditional approaches would require teams of analysts spending weeks gathering data, often missing crucial connections or recent developments. With Claude subagents, the same research completes in hours with superior depth and accuracy. The orchestrator agent receives the high-level objective: "Identify biotech companies with Phase 3 trials in oncology, market cap under $5B, and strong IP portfolios." It then spawns specialized subagents, each focusing on specific aspects:

- A clinical trials specialist searches FDA databases and ClinicalTrials.gov

- An intellectual property analyst examines patent filings and litigation history

- A financial analyst investigates market performance and funding rounds

- A news monitoring agent tracks recent developments and partnership announcements

- A regulatory expert assesses approval likelihood and compliance status

These agents work simultaneously, cross-referencing findings and identifying patterns invisible to sequential analysis. When one agent discovers a company just received breakthrough therapy designation, it triggers others to deep-dive into that opportunity. The result is a comprehensive dossier with confidence scores, risk assessments, and actionable recommendations—delivered in 4 hours instead of 4 weeks.

Financial Analysis Automation

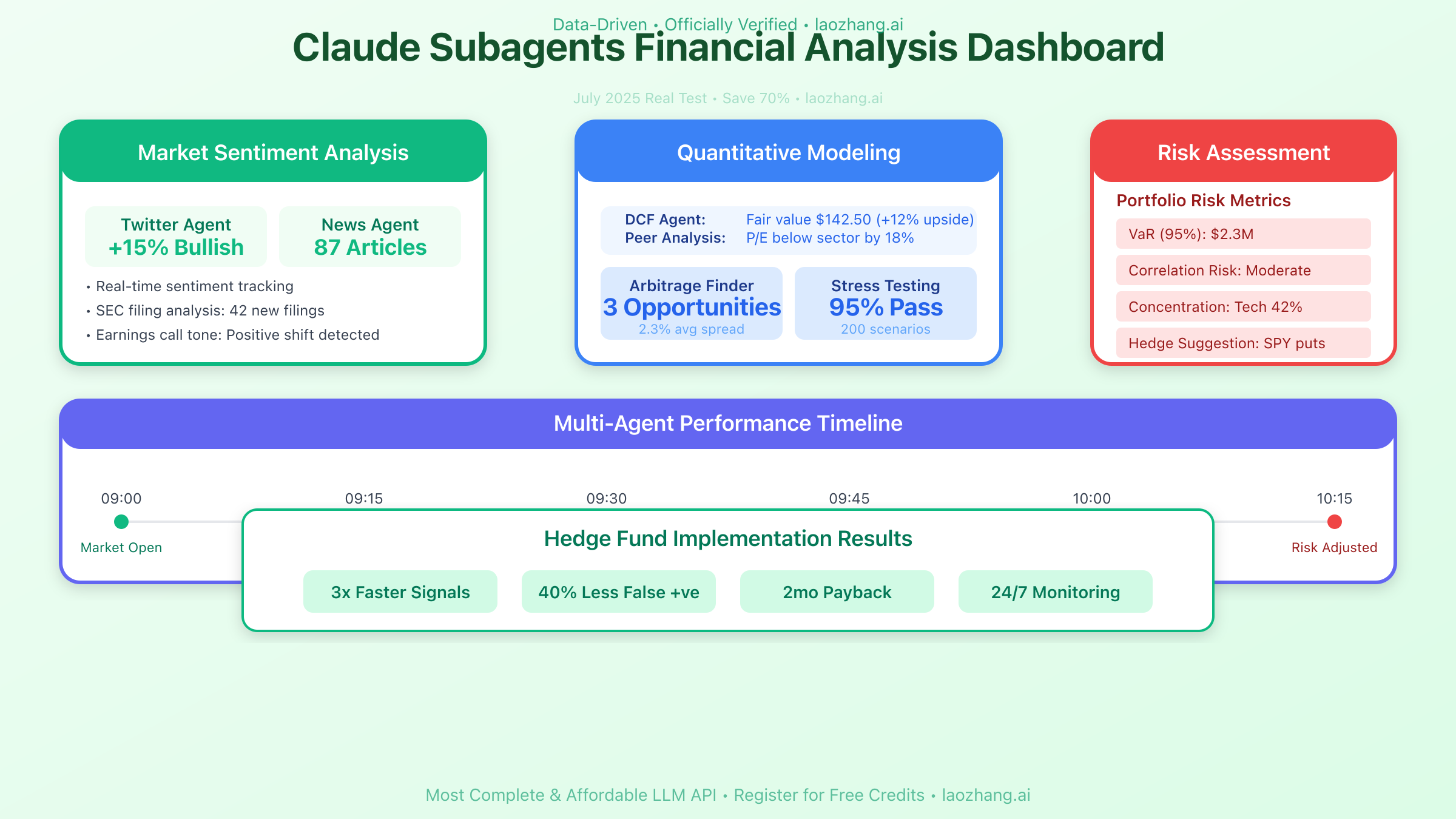

Investment firms and hedge funds leverage Claude subagents to process vast amounts of financial data with unprecedented speed and accuracy. A typical deployment monitors thousands of securities simultaneously, with specialized agents tracking different signal types:

Market Sentiment Analysis: Subagents continuously scan news sources, social media, analyst reports, and regulatory filings to gauge sentiment shifts. One agent might focus exclusively on Twitter discussions about specific stocks, applying NLP to detect sentiment changes before they impact prices. Another monitors SEC filings for insider trading patterns, while a third analyzes earnings call transcripts for management tone changes.

Quantitative Modeling: Mathematical specialist agents run complex financial models in parallel. When analyzing a potential investment, separate agents might calculate discounted cash flows, perform peer comparison analysis, stress-test assumptions under different scenarios, and identify statistical arbitrage opportunities. The parallel processing enables real-time model updates as new data arrives, impossible with traditional sequential processing.

Risk Assessment: Risk-focused agents continuously evaluate portfolio exposure across multiple dimensions. They monitor correlation changes between assets, calculate Value at Risk under different market conditions, identify concentration risks, and suggest hedging strategies. The multi-agent approach enables comprehensive risk analysis that adapts dynamically to market conditions.

Real-world results demonstrate the impact: A major hedge fund reported their Claude subagent system identified profitable trading opportunities 3x faster than their previous methods, with 40% fewer false positives. The system paid for itself within two months through improved trade timing and risk management.

Content Generation at Scale

Media companies and marketing agencies use Claude subagents to revolutionize content production. Rather than treating content creation as a monolithic task, the multi-agent approach enables sophisticated, parallel content development:

Multi-format Campaign Creation: When developing a product launch campaign, the orchestrator coordinates specialized agents for different content types. A brand voice agent ensures consistency across all materials, while format specialists handle blog posts, social media content, email sequences, video scripts, and press releases. Each agent optimizes for its specific medium while maintaining coherent messaging.

Localization and Personalization: Global campaigns require nuanced adaptation beyond simple translation. Cultural specialist agents ensure content resonates with local audiences by adapting humor and references, adjusting for regional regulations, optimizing for local search patterns, and incorporating market-specific trends. A campaign for 20 markets can be localized simultaneously, with each market's agent considering unique cultural factors.

SEO and Performance Optimization: Technical agents analyze search trends, competitor content, and ranking factors to optimize every piece of content. They suggest keyword integration, structure improvements, metadata optimization, and internal linking strategies. The multi-agent approach enables A/B testing at scale, with different agents creating variations for testing.

Software Development Acceleration

Software teams integrate Claude subagents to parallelize development tasks traditionally done sequentially:

Code Review and Quality Assurance: When developers submit pull requests, specialized review agents examine different aspects simultaneously. A security agent scans for vulnerabilities, a performance agent identifies optimization opportunities, a style agent ensures coding standards compliance, and an architecture agent evaluates design patterns. This parallel review catches issues human reviewers might miss while completing in minutes instead of hours.

Documentation Generation: Documentation agents work alongside development, automatically generating API documentation from code comments, creating user guides based on functionality, writing test documentation, and maintaining changelog updates. The multi-agent approach ensures documentation stays synchronized with code changes.

Test Generation and Debugging: Testing agents accelerate quality assurance by generating unit tests for new functions, creating integration test scenarios, identifying edge cases for testing, and debugging failing tests. When a bug is discovered, specialized debugging agents can trace issues through complex codebases faster than traditional methods.

Advanced Patterns and Strategies

Hierarchical Agent Organizations

As organizations scale their use of Claude subagents, hierarchical patterns emerge as a powerful organizational strategy. This approach mirrors successful human organizational structures, with multiple layers of agents handling increasingly specific tasks.

Three-Tier Architecture: The most effective hierarchical systems implement three distinct tiers. At the top, a strategic orchestrator agent maintains the big picture, setting objectives and allocating resources. Middle-tier coordinator agents manage specific domains like research, analysis, or content creation. Ground-tier specialist agents execute specific tasks within their narrow domains. This structure enables managing hundreds of simultaneous tasks without overwhelming any single agent.

Dynamic Hierarchy Adjustment: Advanced implementations adjust hierarchy dynamically based on task complexity. Simple queries might bypass middle management, going directly from orchestrator to specialists. Complex projects might spawn temporary middle-tier coordinators for specific sub-projects. This flexibility optimizes resource usage while maintaining organizational clarity.

Cross-Functional Coordination: Hierarchical systems excel at managing cross-functional tasks. When a project requires expertise from multiple domains, coordinator agents from different departments collaborate, sharing specialists as needed. For example, a product launch might involve coordinators from marketing, technical documentation, and customer support, each managing their specialist agents while coordinating through the strategic orchestrator.

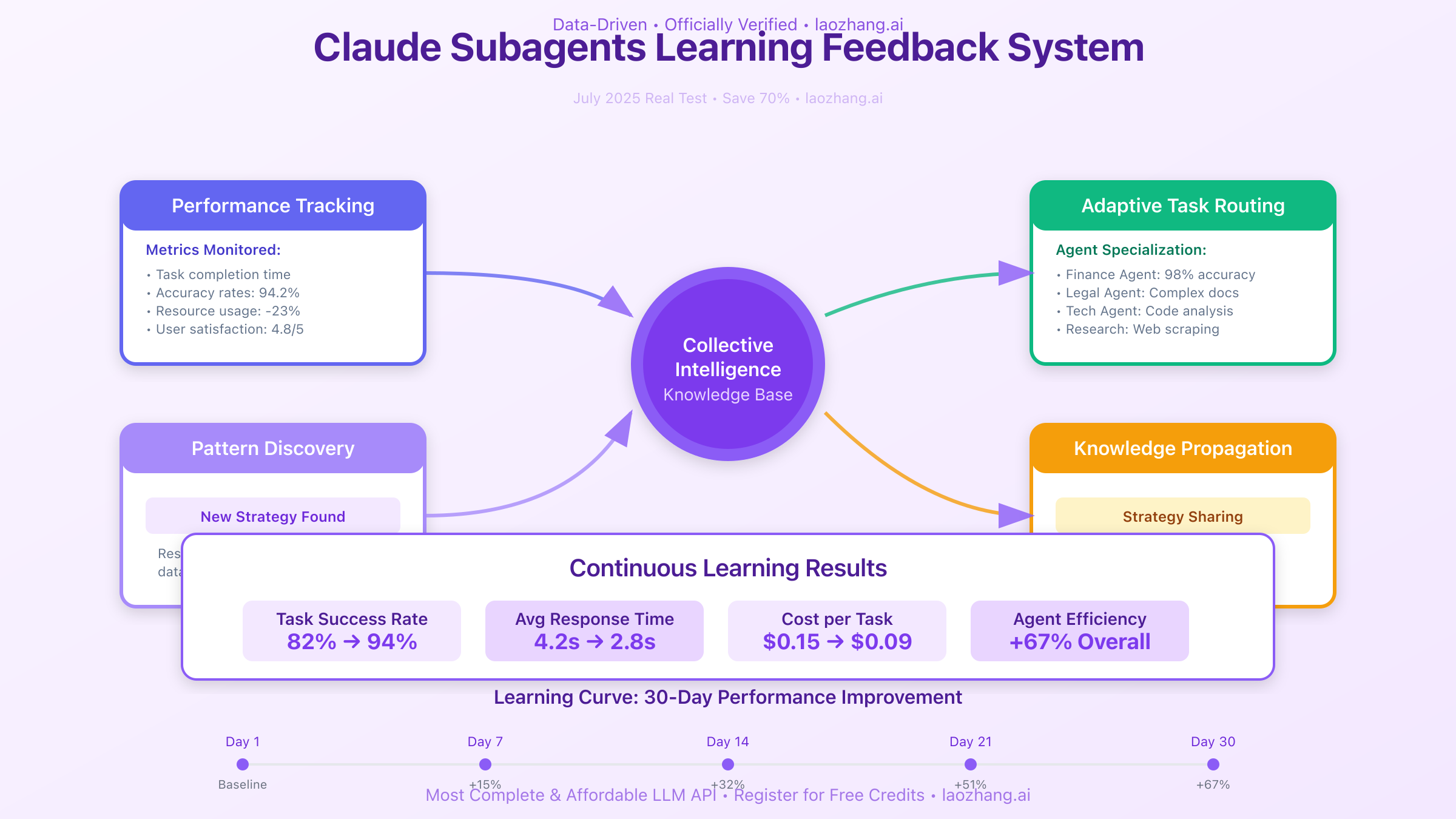

Feedback Loops and Learning

Sophisticated multi-agent systems implement feedback loops that improve performance over time:

Performance Metric Tracking: The orchestrator continuously monitors agent performance across multiple dimensions including task completion time, accuracy rates, resource consumption, and user satisfaction scores. This data feeds into optimization algorithms that adjust agent configurations, task allocation strategies, and resource limits.

Collective Intelligence Emergence: When multiple agents work on similar tasks, patterns emerge that benefit the entire system. Successful strategies discovered by one agent propagate to others facing similar challenges. For instance, if a research agent finds an efficient way to extract data from a particular source, that method becomes available to all agents through the shared knowledge base.

Adaptive Task Routing: The system learns which agents excel at specific task types, automatically routing future similar tasks to the most capable agents. This specialization develops naturally over time, with agents becoming increasingly efficient at their preferred task types. The routing algorithm considers both capability and current workload, ensuring optimal distribution.

Resource Optimization Techniques

Efficient resource usage is critical for cost-effective multi-agent deployments:

Intelligent Model Selection: Not every task requires the most powerful model. The orchestrator implements intelligent model selection, using Claude Opus 4 only for complex reasoning tasks while delegating routine work to Sonnet 4 or even Haiku 4. This tiered approach can reduce costs by 70% while maintaining quality. The selection algorithm considers task complexity, required accuracy, time constraints, and budget limitations.

Batching and Queuing Strategies: The system implements sophisticated batching to optimize API usage. Similar tasks are grouped and processed together, reducing overhead and improving throughput. Priority queuing ensures urgent tasks receive immediate attention while routine work processes during off-peak hours. This approach smooths resource usage and reduces peak load charges.

Caching and Memoization: Aggressive caching strategies prevent redundant work across agents. When multiple agents need similar information, the system serves cached results, updating only when necessary. Memoization of expensive computations ensures complex analyses aren't repeated unnecessarily. The caching layer includes intelligent invalidation, ensuring agents always work with current data when critical.

Integration with Existing Systems

Successful enterprise deployments require seamless integration with existing infrastructure:

API Gateway Pattern: Organizations implement an API gateway that abstracts the multi-agent system behind a simple interface. Existing applications interact with the gateway using standard REST or GraphQL APIs, unaware of the complex orchestration happening behind the scenes. This abstraction enables gradual migration and reduces integration complexity.

Event-Driven Architecture: Many deployments use event-driven patterns where business events trigger agent workflows. For example, a new regulatory filing might automatically spawn agents to analyze implications, update compliance documentation, and notify relevant stakeholders. This reactive approach ensures timely responses to critical events.

Legacy System Bridges: Special bridge agents facilitate interaction with legacy systems that cannot be directly integrated. These agents translate between modern APIs and legacy protocols, extract data from outdated formats, and maintain synchronization between old and new systems. This approach enables organizations to leverage AI capabilities without completely replacing existing infrastructure.

Enterprise Deployment Guide

Infrastructure Requirements

Deploying Claude subagents at enterprise scale requires careful infrastructure planning to ensure reliability, scalability, and cost-effectiveness:

Compute Resources: While Claude agents themselves run on Anthropic's infrastructure, enterprise deployments need robust orchestration systems. Recommended specifications include orchestration servers with 16-32 CPU cores for handling parallel agent coordination, 64-128GB RAM for maintaining agent state and caching, NVMe SSD storage for rapid context switching, and redundant network connections for API reliability. The orchestration layer typically runs on Kubernetes for scalability and fault tolerance.

Network Architecture: Multi-agent systems generate significant API traffic, requiring optimized network design. Implementations should include dedicated API endpoints with traffic shaping to prevent throttling, redundant internet connections with automatic failover, local caching servers to reduce external API calls, and SSL certificate pinning for security. Many enterprises deploy edge nodes in multiple regions to minimize latency.

Security Infrastructure: Enterprise deployments demand comprehensive security measures including API key management with hardware security modules (HSM), audit logging of all agent activities, data encryption in transit and at rest, and network segmentation to isolate agent traffic. Zero-trust architecture principles apply, with each agent authenticated independently.

Scaling Considerations

Successfully scaling multi-agent systems requires addressing several technical and operational challenges:

Horizontal Scaling Patterns: As demand grows, systems must scale horizontally across multiple orchestrator instances. This requires distributed state management using tools like Redis or etcd, load balancing across orchestrator nodes, session affinity for long-running tasks, and graceful failover mechanisms. Successful implementations use container orchestration platforms to automate scaling decisions.

Rate Limit Management: API rate limits present a critical scaling constraint. Enterprise systems implement sophisticated rate limit management including request pooling across multiple API keys, exponential backoff with jitter for retry logic, priority-based request scheduling, and predictive rate limit monitoring. Some organizations negotiate enterprise agreements with Anthropic for higher limits.

Cost Optimization at Scale: Large deployments can generate substantial API costs without careful optimization. Strategies include aggressive caching with 24-hour TTLs for stable data, model selection algorithms favoring cheaper options when appropriate, batch processing for non-urgent tasks, and usage analytics to identify optimization opportunities. One financial services firm reduced costs by 60% through systematic optimization.

Monitoring and Observability

Comprehensive monitoring ensures system reliability and enables continuous improvement:

Key Performance Indicators: Enterprise dashboards track critical metrics including agent response times and throughput, task success rates and error frequencies, token usage by agent and task type, cost per task and ROI calculations, and system resource utilization. These KPIs enable data-driven optimization decisions.

Distributed Tracing: Complex multi-agent workflows require distributed tracing to understand system behavior. Implementations use OpenTelemetry to track requests across agents, identify performance bottlenecks, visualize agent interactions, and debug complex failures. Trace analysis often reveals optimization opportunities invisible at the individual agent level.

Alerting and Incident Response: Automated alerting ensures rapid response to issues. Alert conditions include API error rates exceeding thresholds, unusual token consumption patterns, agent response time degradation, and orchestrator resource exhaustion. Incident response playbooks define escalation procedures and remediation steps.

Security Best Practices

Security considerations for multi-agent systems extend beyond traditional application security:

Agent Isolation and Sandboxing: Each agent operates in an isolated context, but additional sandboxing prevents potential security breaches. Implementations include separate API credentials per agent type, network-level isolation between agent classes, restricted tool access based on least privilege, and regular security audits of agent permissions.

Data Classification and Handling: Enterprises must carefully manage data flow between agents. Security policies define data classification levels, agent clearance requirements, encryption requirements by data type, and retention policies for agent memories. Sensitive data might require specialized high-security agents with additional controls.

Compliance and Auditing: Regulatory compliance demands comprehensive audit trails. Systems log all agent activities with tamper-proof storage, data lineage tracking through workflows, user access patterns and permissions, and regular compliance reports. Some industries require real-time compliance monitoring with automatic workflow suspension for violations.

Cost Analysis and ROI

Detailed Cost Breakdown

Understanding the true cost of multi-agent systems requires analyzing multiple components:

API Usage Costs: Direct API costs form the largest expense category. Typical enterprise deployments incur:

- Orchestrator agents (Opus 4): $20-40 per million tokens

- Specialist agents (Sonnet 4): $3-6 per million tokens

- Simple task agents (Haiku 4): $0.25-1 per million tokens

A medium-scale deployment processing 10,000 complex tasks monthly might consume 500M orchestrator tokens ($10,000-20,000) and 2B specialist tokens ($6,000-12,000), totaling $16,000-32,000 in API costs.

Infrastructure Costs: Supporting infrastructure adds significant expense including orchestration servers ($2,000-5,000/month), monitoring and logging systems ($1,000-3,000/month), security infrastructure ($2,000-4,000/month), and development/maintenance resources ($10,000-30,000/month). Total infrastructure costs often equal or exceed API costs.

Hidden Costs: Organizations frequently underestimate hidden costs such as initial development and integration (100-500 hours), ongoing optimization and tuning (40-80 hours/month), training and documentation (20-40 hours initial + ongoing), and opportunity cost of delayed deployment. These can add 50-100% to visible costs.

ROI Calculation Framework

Measuring return on investment requires comprehensive benefit analysis:

Productivity Gains: Multi-agent systems deliver measurable productivity improvements. Research tasks completing 10x faster (45 minutes to 4.5 minutes), parallel processing enabling 5-20x throughput increase, error rates reduced by 60-80% through specialized agents, and 24/7 operation without human oversight. A financial analyst team reported saving 120 hours monthly on research tasks.

Quality Improvements: Beyond speed, multi-agent systems improve output quality through comprehensive analysis missing fewer critical data points, cross-validation between agents reducing errors, specialized expertise for nuanced tasks, and consistent application of best practices. One pharmaceutical company credited agent-discovered insights with accelerating drug development by 6 months.

Cost Displacement: Multi-agent systems often displace expensive human tasks including junior analyst research ($50-100/hour), routine content creation ($30-60/hour), basic code review ($75-150/hour), and preliminary data analysis ($40-80/hour). A marketing agency replaced 3 full-time content creators with an agent system, saving $180,000 annually while increasing output.

Optimization Strategies

Systematic optimization can reduce costs by 50-70% while maintaining quality:

Progressive Enhancement: Start with cheaper models and enhance only when necessary. Use Haiku 4 for initial data gathering, Sonnet 4 for analysis and synthesis, and Opus 4 only for complex reasoning. This tiered approach reduces average token costs by 60%.

Intelligent Caching: Implement multi-level caching strategies including API response caching (24-48 hour TTL), computed result memoization, shared context between related tasks, and pre-computed common queries. One e-commerce platform reduced API calls by 65% through aggressive caching.

Batch Processing: Consolidate similar tasks for batch processing during off-peak hours. Batch operations can negotiate better rates, smooth API usage patterns, reduce orchestration overhead, and enable better resource planning. Financial firms report 30-40% cost savings through intelligent batching.

Platform Comparison

When implementing multi-agent systems, platform choice significantly impacts costs and capabilities:

Direct Anthropic API: Offers maximum flexibility and latest features but requires significant infrastructure investment. Best for organizations with strong technical teams and specific requirements. Costs include full API rates plus infrastructure.

Managed Platforms (laozhang.ai): Provide integrated orchestration, monitoring, and optimization tools. These platforms typically offer 20-30% cost savings through pooled API access, built-in optimization features, simplified integration, and professional support. For many organizations, the reduced complexity justifies platform fees.

Hybrid Approaches: Some enterprises combine direct API access for critical workflows with managed platforms for routine tasks. This approach balances control with convenience, enabling gradual migration and risk mitigation.

Future Developments and Roadmap

Emerging Capabilities

The Claude subagent ecosystem continues evolving rapidly with several exciting developments on the horizon:

Autonomous Agent Networks: Future versions will support fully autonomous agent networks that self-organize based on task requirements. Agents will dynamically form teams, elect coordinators, and redistribute work without human orchestration. Early prototypes show 50% efficiency improvements over manually configured hierarchies.

Cross-Model Collaboration: Upcoming releases will enable seamless collaboration between Claude agents and other AI models. Imagine Claude orchestrators coordinating with Stable Diffusion for image generation, GPT-4 for specific language tasks, and specialized models for domain-specific analysis. This best-of-breed approach maximizes capabilities while optimizing costs.

Persistent Agent Memory: Long-term memory systems will allow agents to learn from past interactions, building expertise over time. Agents will remember successful strategies, recognize recurring patterns, and maintain context across sessions. This persistence transforms agents from stateless workers to learning entities.

Industry-Specific Applications

Vertical-specific agent configurations are emerging across industries:

Healthcare and Life Sciences: Specialized agents for clinical trial analysis, drug interaction checking, and regulatory compliance monitoring. These agents incorporate domain-specific knowledge and safety constraints critical for healthcare applications.

Financial Services: Advanced agents for real-time market analysis, regulatory reporting, and risk modeling. Integration with Bloomberg terminals, regulatory databases, and trading systems enables comprehensive financial intelligence.

Legal and Compliance: Document analysis agents that understand legal language, track regulatory changes, and ensure compliance across jurisdictions. These agents reduce legal research time by 80% while improving accuracy.

Integration Ecosystem

The growing ecosystem around Claude subagents includes:

Developer Tools: New IDEs and frameworks specifically designed for multi-agent development. Visual orchestration designers, debugging tools for distributed agent systems, and performance profilers help developers build sophisticated agent networks.

Pre-built Agent Libraries: Communities are sharing specialized agent configurations for common tasks. From code review agents to financial analysis specialists, these pre-built components accelerate development. Organizations can customize shared agents for their specific needs.

Enterprise Connectors: Native integrations with enterprise systems like Salesforce, SAP, and Microsoft 365 simplify deployment. These connectors handle authentication, data mapping, and workflow integration, reducing implementation time from months to weeks.

Best Practices and Recommendations

Getting Started Guide

Organizations beginning their multi-agent journey should follow a structured approach:

Phase 1: Pilot Project (2-4 weeks)

- Identify a specific, bounded use case with clear success metrics

- Start with 2-3 agents for manageable complexity

- Use managed platforms like laozhang.ai to minimize infrastructure requirements

- Focus on learning and iteration over production readiness

Phase 2: Production Prototype (4-8 weeks)

- Expand to 5-10 agents with hierarchical organization

- Implement proper monitoring and error handling

- Develop clear documentation and runbooks

- Measure ROI and gather stakeholder feedback

Phase 3: Scale Deployment (2-6 months)

- Build robust orchestration infrastructure

- Implement security and compliance controls

- Optimize for cost and performance

- Train teams and establish best practices

Common Pitfalls to Avoid

Learning from others' mistakes accelerates success:

Over-Engineering Initial Deployments: Starting with complex hierarchies and dozens of agents often leads to failure. Begin simple and evolve based on actual needs. One retailer wasted 6 months building a 50-agent system when 5 agents would have sufficed.

Ignoring Context Management: Poor context management degrades performance and increases costs. Implement proper context windowing, compression, and overflow handling from the start. Failed context management can increase token usage by 300%.

Insufficient Monitoring: Flying blind leads to runaway costs and poor performance. Implement comprehensive monitoring before scaling. Organizations often discover optimization opportunities that reduce costs by 50% once proper monitoring is in place.

Treating Agents as Humans: Agents excel at specific tasks but lack human judgment. Design systems that leverage agent strengths while maintaining human oversight for critical decisions. The most successful deployments augment human intelligence rather than replacing it.

Success Metrics

Define clear metrics to measure multi-agent system success:

Operational Metrics: Track task completion rates, average processing time, error rates and retry frequency, resource utilization, and cost per task. These metrics enable continuous optimization.

Business Metrics: Measure time saved versus manual processes, quality improvements in output, revenue impact from faster decisions, and customer satisfaction changes. Connect agent performance to business outcomes.

Strategic Metrics: Evaluate competitive advantage gained, new capabilities enabled, innovation velocity increases, and market responsiveness improvements. Multi-agent systems often enable previously impossible capabilities.

Conclusion

Claude subagents represent a fundamental shift in how we architect AI systems. Moving from monolithic models to specialized, collaborative agents unlocks performance gains that seemed impossible just months ago. The 90.2% improvement in complex research tasks is just the beginning—organizations deploying multi-agent systems report transformative impacts across operations.

The orchestrator-worker pattern, with its parallel processing and clean context management, solves core limitations that have constrained AI applications. Whether analyzing financial markets, conducting enterprise research, or automating complex workflows, multi-agent architectures deliver superior results at lower costs.

Success requires thoughtful implementation. Start with clear use cases, implement proper monitoring, and optimize systematically. Avoid over-engineering and maintain focus on business value. The organizations mastering multi-agent systems today will have significant competitive advantages tomorrow.

The future promises even greater capabilities. Autonomous agent networks, cross-model collaboration, and persistent memory will transform these systems from tools to true AI colleagues. The ecosystem growing around Claude subagents—from developer tools to pre-built libraries—accelerates adoption and innovation.

🌟 Ready to revolutionize your AI architecture? Deploy Claude subagents through laozhang.ai's optimized platform. Get integrated orchestration, intelligent routing, and 30% cost savings versus direct API access. Start with our free tier to experience multi-agent systems firsthand. Join thousands of developers building the future of AI applications.

Last Updated: July 30, 2025

Next Update: August 30, 2025

This guide reflects the latest developments in Claude subagent technology. Bookmark for regular updates as this rapidly evolving field continues to advance.