How to Set Up Custom API Keys in Cursor: Complete Guide for 2025

A comprehensive guide to configuring custom API keys in Cursor IDE for OpenAI, Anthropic, Google, and Azure. Take control of your AI usage with step-by-step instructions for unlimited coding assistance.

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

How to Set Up Custom API Keys in Cursor: Complete Guide for 2025

{/* Cover image */}

Cursor has revolutionized coding with its powerful AI integration, but to truly maximize its potential, you need to understand how to configure custom API keys. This comprehensive guide, updated for 2025, walks you through setting up API keys for all supported providers: OpenAI, Anthropic, Google, and Azure.

🔥 2025 Update: This guide contains the latest configuration methods tested in March 2025, including support for the newest models like Claude 3.5 Sonnet, GPT-4o, and Gemini 1.5 Pro!

As of 2025, Cursor allows you to integrate your own API keys for major AI providers, giving you unlimited access to AI assistance at your own cost. This approach offers several advantages, including higher rate limits, cost control, and the ability to use specialized or custom models not available through Cursor's built-in service.

Why Use Custom API Keys in Cursor?

Before diving into the setup process, let's understand the key benefits of using your own API keys:

1. Cost Control and Transparency

When you use your own API keys, all charges appear directly on your provider account, giving you complete visibility and control over your expenditure. You can set usage limits, monitor costs in real-time, and allocate resources more efficiently.

2. Higher Rate Limits

Cursor's built-in service has limitations to ensure fair usage across all users. With custom API keys, you're only bound by your provider's rate limits, which can be significantly higher, especially with paid tiers.

3. Model Flexibility

Using custom API keys unlocks access to specific model configurations, fine-tuned models, or specialized variants that might not be available through Cursor's standard offering.

4. Privacy and Security

For organizations with strict data policies, using your own API keys provides an additional layer of control over how your code and prompts are processed, allowing you to comply with internal security requirements.

How Cursor Handles Custom API Keys

Before configuring your keys, it's important to understand how Cursor handles them:

- Your API keys are used to authenticate requests to the respective AI providers

- Keys are sent to Cursor's server with each request but are not permanently stored

- Cursor's backend handles prompt construction and optimization for coding tasks

- You are billed directly by the AI provider based on your usage

- Some specialized features like Tab Completion require specific models and may not work with custom keys

Now, let's dive into the step-by-step setup process for each provider.

Setting Up OpenAI API Keys in Cursor

OpenAI's models remain among the most popular choices for AI coding assistance, including GPT-4o, GPT-3.5-Turbo, and the newer o-series models.

Step 1: Obtain an OpenAI API Key

- Visit OpenAI's Platform website

- Log in or create an account if you don't have one

- Navigate to the API section in your account

- Click on "Create new secret key"

- Enter a descriptive name for your key (e.g., "Cursor Integration")

- Copy the key immediately (OpenAI will only show it once)

⚠️ Important: Keep your API key secure. Treat it like a password and never share it publicly or include it in repositories.

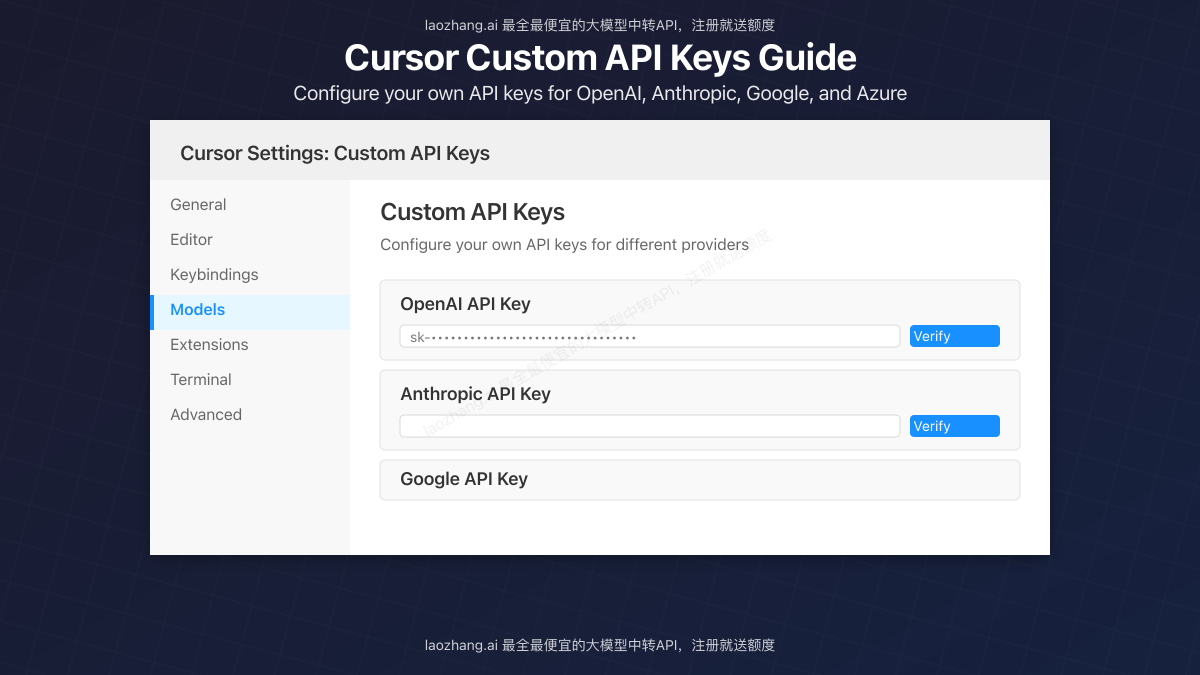

Step 2: Configure the Key in Cursor

- Open Cursor and click on the Settings icon (⚙️) in the bottom left corner

- Select "Models" from the settings menu

- Scroll down to the "OpenAI API Keys" section

- Paste your API key into the field

- Click the "Verify" button to validate the key

- If verification succeeds, you'll see a confirmation message

Step 3: Select Models to Use

- In the same Models settings section, review the list of available OpenAI models

- Check the ones you want to use with your custom API key

- You may need to restart Cursor for changes to fully take effect

For advanced usage, Cursor also allows you to override the OpenAI base URL, which is particularly useful for proxy services or enterprise deployments. To do this:

- Toggle on "Override OpenAI Base URL (when using key)"

- Enter your custom base URL

- Click Save

Setting Up Anthropic API Keys

Anthropic's Claude models offer excellent coding capabilities and are known for their thoughtful responses with long context windows. Here's how to set them up:

Step 1: Obtain an Anthropic API Key

- Visit Anthropic's website

- Sign up for an account or log in

- Navigate to the API section

- Generate a new API key

- Copy the key to a secure location

Step 2: Configure the Key in Cursor

- Open Cursor's Settings and navigate to Models

- Scroll to the "Anthropic API Keys" section

- Paste your Anthropic API key

- Click "Verify" to validate

- Once verified, you'll see success confirmation

Step 3: Enable Claude Models

- In the Models list, find the Claude models you want to use

- Check the boxes next to them to enable

- Claude 3.5 Sonnet and Claude 3 Opus are recommended for coding tasks due to their enhanced reasoning abilities

Setting Up Google AI (Gemini) API Keys

Google's Gemini models provide another powerful option for AI-assisted coding, with impressive performance on technical tasks.

Step 1: Get a Google AI Studio API Key

- Visit Google AI Studio

- Sign in with your Google account

- Go to "Get API key" in the settings

- Create a new API key

- Copy and save the key securely

Step 2: Add the Key to Cursor

- In Cursor's Models settings, find the "Google API Keys" section

- Paste your Google API key

- Click "Verify" to validate the key

- Wait for the confirmation message

Step 3: Select Gemini Models

- In the Models list, locate the Gemini models (1.5 Pro, 1.5 Flash, etc.)

- Check the boxes next to the models you want to use

- Gemini 1.5 Pro is recommended for complex coding tasks, while Gemini 1.5 Flash offers faster responses

Setting Up Azure OpenAI Integration

For enterprise users, Azure OpenAI provides advanced security, compliance features, and dedicated resources.

Step 1: Deploy Azure OpenAI

- Sign in to the Azure Portal

- Create or access your Azure OpenAI resource

- Deploy the models you want to use

- Note your API key, endpoint URL, and deployed model names

Step 2: Configure Azure in Cursor

- In Cursor's Models settings, navigate to the "Azure Integration" section

- Enter your Azure API key

- Add your Azure OpenAI endpoint URL

- Configure any additional Azure-specific settings

- Click "Verify" to test the connection

Step 3: Map Azure Models

- For each model you want to use, you may need to map Cursor's model names to your Azure deployments

- This is done through the advanced configuration options in the Azure section

- Save your changes and restart Cursor

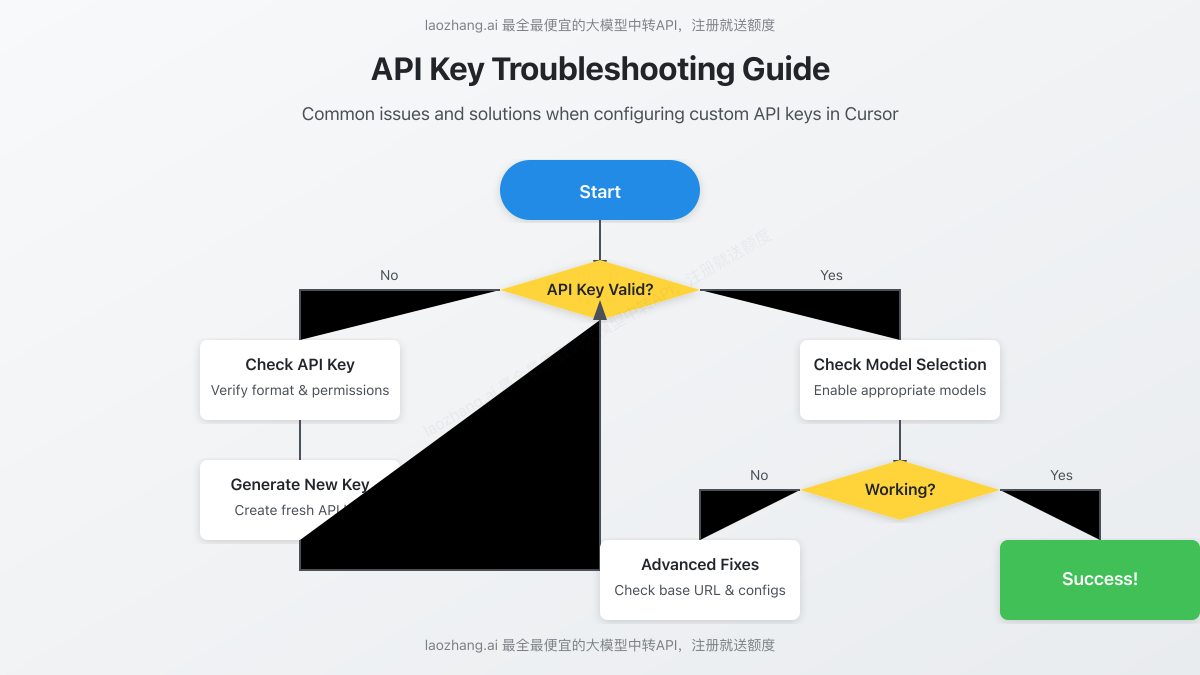

Troubleshooting API Key Issues

API key setup doesn't always go smoothly. Here are solutions to common issues:

Verification Failures

If your API key fails to verify:

- Check for typos: Ensure you've copied the full key correctly, with no extra spaces

- Verify account status: Confirm you have sufficient credits or billing set up with the provider

- Check rate limits: Temporary verification failures can occur if you've hit rate limits

- Proxy or VPN issues: Some networks may block API calls; try a different connection

- Model selection: Ensure you have at least one model enabled for the provider

"Default Model" Error

Some users encounter an error related to the "default" model when verifying keys. To resolve this:

- Ensure you have checked at least one model in the provider's model list

- For custom endpoint integrations, specify the exact model name in the advanced settings

- If the issue persists, try adding a new custom model name that matches your deployed model

Base URL Configuration Issues

When using custom base URLs:

- Ensure the URL format is correct and includes the protocol (https://)

- Check that the URL points to the correct API version endpoint

- Verify your API key has access to the custom endpoint

- Some proxy services may require additional headers or parameters

Advanced Configuration Tips

For power users, here are some advanced configurations to enhance your experience:

Using OpenRouter Integration

OpenRouter allows you to access multiple AI providers through a single API key:

- Get an OpenRouter API key

- Configure it as an OpenAI key in Cursor

- Set the base URL to

https://openrouter.ai/api/v1 - Add custom models that match OpenRouter's model IDs

Rate Limit Optimization

To avoid hitting rate limits during intensive coding sessions:

- Configure multiple API keys from the same provider

- Rotate between them using different profiles in Cursor

- Consider using a provider's enterprise tier for higher limits

- Use models with lower token costs for routine tasks

Secure Key Management

For team environments:

- Consider using environment variables rather than directly entering keys

- Rotate API keys regularly

- Assign dedicated keys for different projects or team members

- Monitor usage patterns to detect any unusual activity

Cost Management Strategies

Using custom API keys means paying for your own usage. Here are strategies to keep costs manageable:

1. Model Selection Based on Task Complexity

Not every coding task requires the most powerful model:

- Use GPT-3.5-Turbo or Gemini 1.5 Flash for simpler tasks and documentation

- Reserve GPT-4o or Claude 3 Opus for complex problem-solving and architecture design

- Consider o3-mini or Claude 3 Haiku for routine code completion and simple explanations

2. Set Up Usage Alerts

Configure spending alerts with your API provider:

- Set monthly budget caps

- Create email notifications when approaching limits

- For OpenAI, use their usage limits feature to automatically cut off access when thresholds are reached

3. Batch Similar Requests

To maximize efficiency:

- Collect similar questions or tasks before engaging the AI

- Craft comprehensive prompts that address multiple issues at once

- This reduces the number of API calls and token usage

API Provider Comparisons for Cursor

Each provider has strengths and weaknesses when used with Cursor:

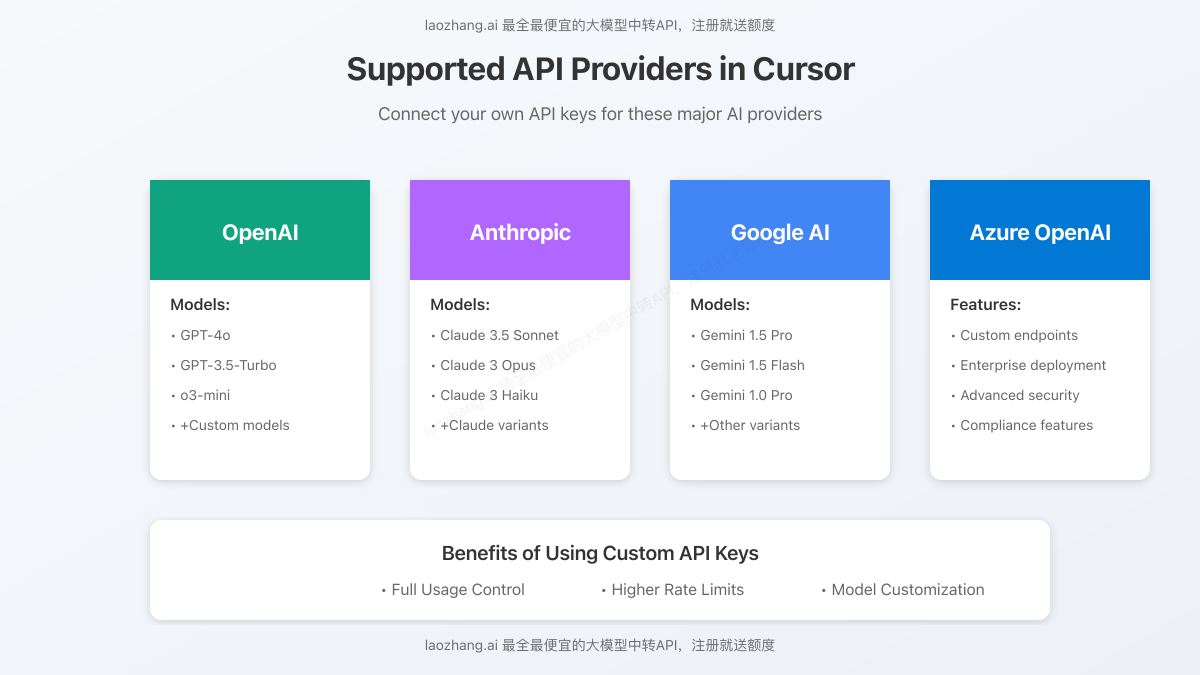

OpenAI

Strengths:

- Excellent for complex coding problems

- Strong at debugging and error fixing

- Wide model variety from economical to cutting-edge

Considerations:

- Higher costs for top-tier models

- Rate limits can be restrictive on free tier

Anthropic (Claude)

Strengths:

- Exceptional at understanding and explaining complex code

- Very long context window (up to 200K tokens)

- Natural, detailed explanations

Considerations:

- Fewer model options than OpenAI

- Sometimes more verbose in responses

Google (Gemini)

Strengths:

- Competitive pricing

- Strong multilingual code generation

- Good integration with Google ecosystem

Considerations:

- Fewer specialized coding models

- Documentation sometimes less comprehensive

Azure OpenAI

Strengths:

- Enterprise-grade security and compliance

- Dedicated capacity options

- Regional deployment for data residency requirements

Considerations:

- More complex setup process

- Higher minimum spending commitments

Frequently Asked Questions

Q1: Will my API key be stored or leave my device?

A1: Your API key isn't permanently stored by Cursor, but it is sent to Cursor's server with each request. All requests are routed through Cursor's backend where the final prompt construction happens.

Q2: What custom LLM providers are supported?

A2: Cursor officially supports OpenAI, Anthropic, Google, and Azure. The application also supports API providers that are compatible with the OpenAI API format (like OpenRouter). Custom local LLM setups aren't officially supported.

Q3: Can I use custom models not listed in Cursor?

A3: Yes, you can add custom models by clicking the "+ Add model" button in the Models settings page, though the model must be accessible through one of the supported providers.

Q4: Can I switch between custom and Cursor-provided API keys?

A4: Yes, you can disable your custom API keys at any time to return to using Cursor's built-in service, subject to any plan limitations.

Q5: Do all Cursor features work with custom API keys?

A5: Not all features. Tab Completion requires specialized models and won't work with custom keys. Most other features work as long as you're using compatible models.

Q6: I'm getting "model not found" errors. How do I fix this?

A6: Ensure the model name in Cursor exactly matches what your provider expects. For custom deployments, you may need to add the specific model name via the "+ Add model" option.

Integrating with laozhang.ai API Transit Service

If you're looking for a cost-effective way to access multiple AI models through a single API, consider using laozhang.ai as your API transit service.

Benefits of laozhang.ai for Cursor Integration:

- Access to all major models (OpenAI, Claude, Gemini) through a unified API

- Significantly lower rates compared to direct provider access

- Free starting credits for new registrations

- Simple configuration with Cursor

How to Set Up laozhang.ai with Cursor:

- Register for an account at api.laozhang.ai

- Get your API key from the dashboard

- In Cursor, configure it as an OpenAI key

- Set the base URL to

https://api.laozhang.ai/v1 - Enable the models you wish to use

Here's a sample API call to laozhang.ai:

bashcurl https://api.laozhang.ai/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"model": "gpt-4o",

"messages": [

{"role": "system", "content": "You are a helpful coding assistant."},

{"role": "user", "content": "Write a Python function to find prime numbers."}

]

}'

Conclusion: Maximizing Cursor's Potential

Configuring custom API keys in Cursor unlocks the full potential of this revolutionary coding tool. By following this guide, you've learned how to:

- Set up and verify API keys for all major providers

- Select appropriate models for different tasks

- Troubleshoot common issues

- Implement advanced configurations

- Manage costs effectively

The ability to use your own API keys transforms Cursor from a powerful coding assistant into a fully customizable AI development environment tailored to your specific needs and preferences.

For the optimal experience, we recommend starting with a mid-tier model like GPT-3.5-Turbo or Claude 3 Haiku for routine tasks, while reserving more powerful models like GPT-4o or Claude 3.5 Sonnet for complex problems. This balanced approach provides excellent assistance while keeping costs manageable.

💡 Pro Tip: Revisit your API configuration every few months as providers regularly launch new models and update their pricing structures. What's optimal today might not be the best choice in six months.

Whether you're a solo developer looking for unlimited AI assistance or part of a larger team requiring enterprise-grade security and compliance, Cursor's custom API key integration provides the flexibility you need to code smarter, faster, and more efficiently.

Update Log

plaintext┌─ Update Record ────────────────────────┐ │ 2025-03-05: Initial comprehensive guide│ └──────────────────────────────────────┘