Flux Image-to-Image: Complete Guide to AI-Powered Image Transformation (2025)

Master Flux.1 Kontext for powerful image-to-image generation. Learn API integration, pricing, best practices, and how it compares to DALL-E, Midjourney, and Stable Diffusion.

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

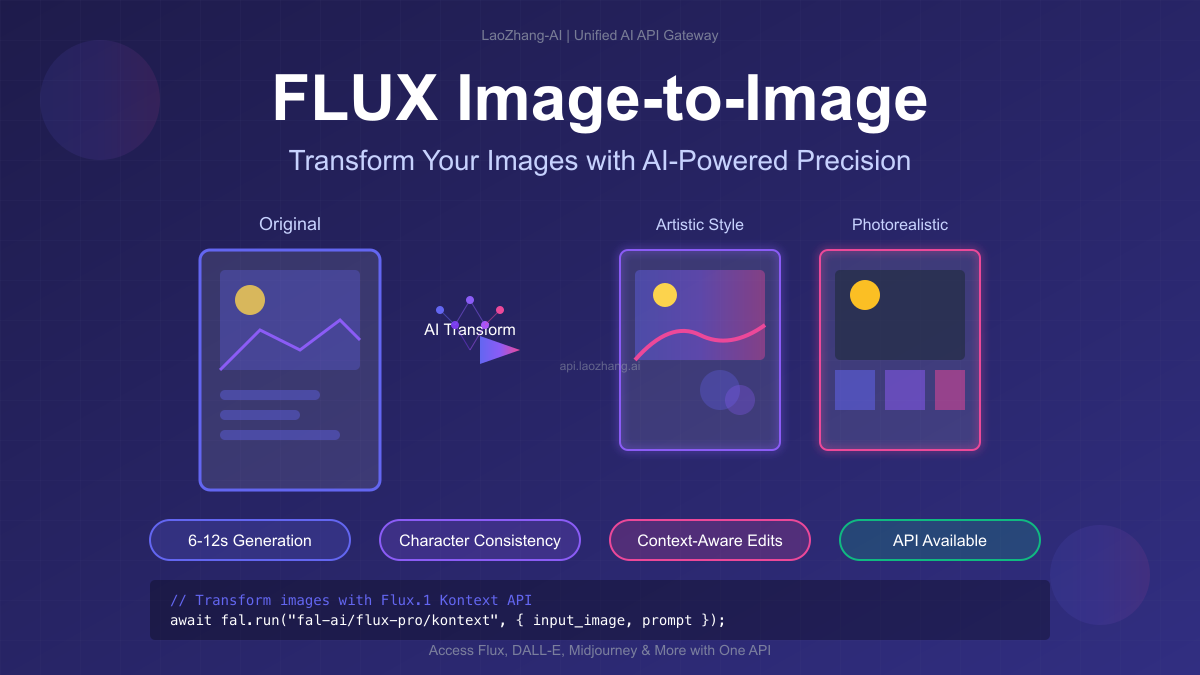

Revolutionary Update: May 2025 - Flux.1 Kontext brings 6-12 second image transformations with unprecedented consistency

Have you ever wanted to transform your images with AI while maintaining perfect consistency? Flux.1 Kontext is revolutionizing image-to-image generation with its lightning-fast processing and context-aware capabilities. This comprehensive guide will show you exactly how to leverage Flux's powerful image transformation features for your projects.

What is Flux Image-to-Image?

Flux.1 Kontext represents a breakthrough in AI-powered image editing. Released in May 2025 by Black Forest Labs (founded by the creators of Stable Diffusion), this suite of models enables context-aware image generation and editing by processing both text prompts and images together.

Key Innovation: Unlike traditional image editors, Flux.1 Kontext understands the relationship between your text instructions and image content, enabling surgical edits while preserving everything else.

The Flux.1 Kontext Family

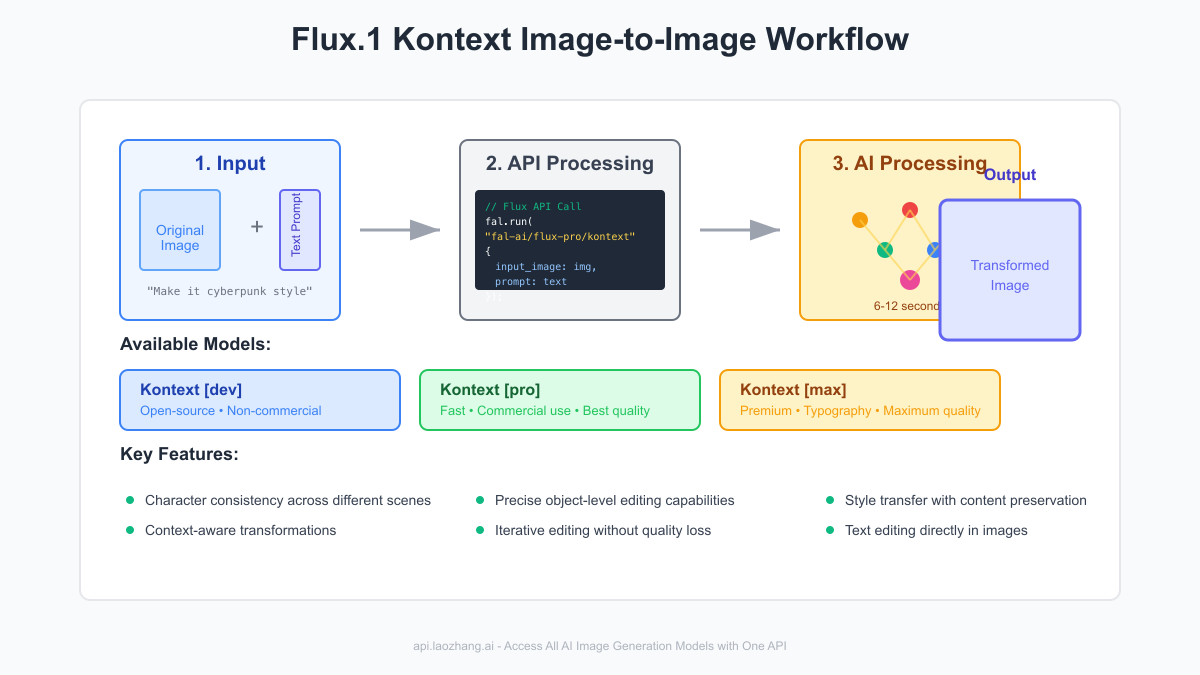

Three powerful variants serve different needs:

- Kontext [dev] - Open-source model for research and non-commercial use

- Kontext [pro] - Fast commercial model with state-of-the-art quality

- Kontext [max] - Premium model with enhanced typography and maximum performance

Why Choose Flux for Image-to-Image?

1. Lightning-Fast Generation

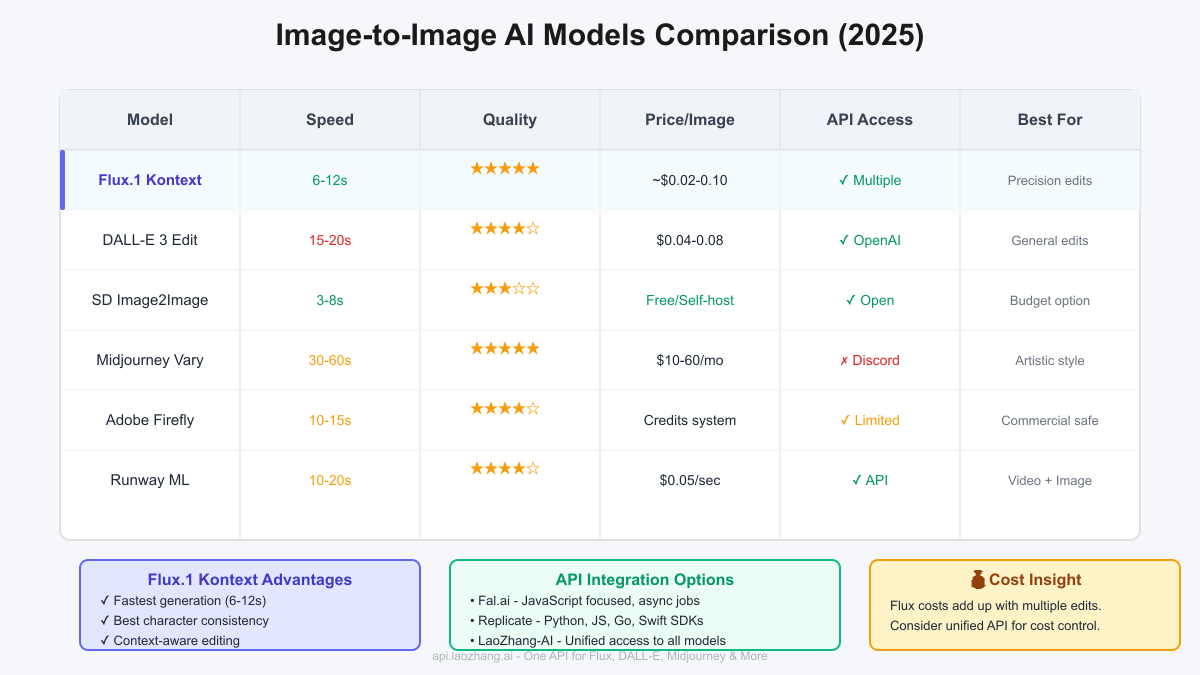

With 6-12 second generation times, Flux.1 Kontext is significantly faster than competitors:

- DALL-E 3 Edit: 15-20 seconds

- Midjourney Vary: 30-60 seconds

- Runway ML: 10-20 seconds

2. Unmatched Character Consistency

Flux excels at maintaining character identity across completely different environments. Take a photo of someone and seamlessly place them:

- As a chef in a restaurant kitchen

- As an astronaut on Mars

- In historical settings

- With different art styles

All while preserving facial features, expressions, and distinctive characteristics perfectly.

3. Context-Aware Transformations

The model truly understands what you're pointing at. You can:

- Take a logo and place it on a sticker

- Apply that sticker to a laptop

- Position the laptop in a coffee shop scene

- Each transformation maintains perfect consistency

Getting Started with Flux Image-to-Image

Prerequisites

Before diving in, you'll need:

- An API key from one of the supported platforms (Fal.ai, Replicate, or others)

- Basic programming knowledge (Python or JavaScript)

- Images you want to transform

Platform Options

Flux.1 Kontext is available through multiple platforms:

- Fal.ai - JavaScript-focused with robust async processing

- Replicate - Multi-language SDKs (Python, JS, Go, Swift)

- Runware - Cost-optimized hosting

- ComfyUI - Visual node-based interface

- LaoZhang-AI - Unified access to multiple AI models

Implementation Guide

Basic Setup with Fal.ai

javascriptimport * as fal from "@fal-ai/serverless-client";

// Configure your API key

fal.config({

credentials: process.env.FAL_KEY

});

// Basic image transformation

async function transformImage(imageUrl, prompt) {

const result = await fal.subscribe("fal-ai/flux-pro/kontext", {

input: {

image_url: imageUrl,

prompt: prompt

}

});

return result.image_url;

}

// Example usage

const transformed = await transformImage(

"https://example.com/original.jpg",

"Transform this portrait into cyberpunk style with neon lighting"

);

Python Implementation with Replicate

pythonimport replicate

# Initialize client

client = replicate.Client(api_token="your-api-token")

def transform_image(image_path, prompt):

"""Transform an image using Flux.1 Kontext"""

output = client.run(

"black-forest-labs/flux-kontext-pro",

input={

"image": open(image_path, "rb"),

"prompt": prompt,

"guidance_scale": 7.5,

"num_inference_steps": 50

}

)

return output

# Example: Change a day scene to night

result = transform_image(

"day_scene.jpg",

"Transform this daytime scene into a moody nighttime atmosphere with street lights"

)

Advanced Options

javascriptconst advancedTransform = await fal.subscribe("fal-ai/flux-pro/kontext", {

input: {

image_url: originalImage,

prompt: detailedPrompt,

// Advanced parameters

guidance_scale: 8.0, // Control prompt adherence (1-20)

num_inference_steps: 75, // Quality vs speed tradeoff

seed: 42, // For reproducible results

strength: 0.8, // How much to change (0-1)

negative_prompt: "blur, low quality, artifacts"

}

});

Best Practices for Optimal Results

1. Start with High-Quality Source Images

Pro Tip: Use clear, well-lit images at least 512x512 pixels. Higher resolution inputs generally produce better outputs.

2. Craft Specific Prompts

Instead of vague instructions, be precise:

❌ Poor prompt: "Make it better"

✅ Good prompt: "Transform the modern office into a Victorian-era study with mahogany furniture, oil lamps, and leather-bound books while maintaining the same layout and perspective"

3. Use Preservation Phrases

To maintain certain elements while changing others:

- "while keeping the person's face unchanged"

- "maintaining the original color scheme"

- "preserving the background architecture"

4. Iterate Incrementally

Flux.1 Kontext allows you to build complex transformations step by step:

javascript// Step 1: Change the time of day

let result = await transformImage(original, "Change to sunset lighting");

// Step 2: Add atmospheric elements

result = await transformImage(result, "Add light fog while maintaining sunset colors");

// Step 3: Enhance mood

result = await transformImage(result, "Add subtle rain effects to create melancholic atmosphere");

Real-World Use Cases

1. E-commerce Product Visualization

Transform product photos into different contexts:

javascript// Show a watch in various lifestyle settings

const contexts = [

"Place this watch on a businessman's wrist in a boardroom",

"Show the watch during an outdoor mountain adventure",

"Display the watch in an elegant jewelry box"

];

for (const context of contexts) {

await transformImage(productImage, context);

}

2. Character Consistency for Storytelling

Create consistent characters across different scenes:

pythoncharacter_base = "portrait_photo.jpg"

scenes = [

"Place this person as a detective in a noir-style office",

"Show the same person as a scientist in a futuristic lab",

"Transform into fantasy warrior in enchanted forest"

]

story_images = [transform_image(character_base, scene) for scene in scenes]

3. Brand Asset Adaptation

Adapt logos and brand materials across different media:

javascriptconst brandAdaptations = {

"billboard": "Place this logo on a Times Square billboard at night",

"product": "Apply the logo as embossing on a luxury leather wallet",

"digital": "Show the logo as a holographic projection"

};

4. Real Estate Virtual Staging

Transform empty rooms into furnished spaces:

pythonstaging_prompts = {

"living_room": "Furnish this empty room as a modern living room with sectional sofa, coffee table, and minimalist decor",

"bedroom": "Transform into a cozy master bedroom with king bed, nightstands, and warm lighting",

"office": "Convert to home office with desk, bookshelves, and professional atmosphere"

}

Pricing and Cost Optimization

Understanding the Cost Structure

Flux.1 Kontext pricing varies by model and platform:

| Model | Estimated Cost | Best For |

|---|---|---|

| Kontext [dev] | ~$0.02/image | Testing, non-commercial |

| Kontext [pro] | ~$0.05-0.10/image | Production use |

| Kontext [max] | ~$0.15-0.20/image | Premium quality needs |

⚠️ Cost Warning: Iterative editing can quickly add up. Each transformation counts as a new generation. Plan your edits carefully to manage costs.

Cost-Saving Strategies

- Batch Similar Transformations

javascript// Instead of multiple API calls

const batchTransform = await fal.run("fal-ai/flux-pro/kontext", {

input: {

images: [image1, image2, image3],

prompt: "Apply vintage film effect to all"

}

});

- Use Lower-Cost Models for Testing

python# Development phase: use dev model

test_result = transform_with_model("kontext-dev", image, prompt)

# Production: switch to pro

final_result = transform_with_model("kontext-pro", image, prompt)

- Implement Caching

javascriptconst transformCache = new Map();

async function cachedTransform(image, prompt) {

const cacheKey = `${image}_${prompt}`;

if (transformCache.has(cacheKey)) {

return transformCache.get(cacheKey);

}

const result = await transformImage(image, prompt);

transformCache.set(cacheKey, result);

return result;

}

Flux vs. Other Image-to-Image Solutions

Performance Comparison

| Feature | Flux.1 Kontext | DALL-E 3 | Stable Diffusion | Midjourney |

|---|---|---|---|---|

| Speed | 6-12s ⚡ | 15-20s | 3-8s | 30-60s |

| Character Consistency | Excellent | Good | Fair | Good |

| API Access | Multiple platforms | OpenAI only | Open source | Discord only |

| Context Understanding | Superior | Good | Basic | Good |

| Price | $0.02-0.10 | $0.04-0.08 | Free/Self-host | $10-60/mo |

When to Choose Flux

Choose Flux when you need:

- Fast iteration cycles (6-12s generation)

- Perfect character/object consistency

- Precise, context-aware edits

- API integration flexibility

- Commercial usage rights

Consider alternatives when:

- Budget is extremely limited (use Stable Diffusion)

- You need the absolute highest artistic quality (Midjourney)

- You're already in the OpenAI ecosystem (DALL-E 3)

Troubleshooting Common Issues

1. Inconsistent Results

Problem: Output varies too much between generations

Solution:

javascript// Use a fixed seed for reproducibility

const consistentTransform = await fal.run("fal-ai/flux-pro/kontext", {

input: {

image_url: sourceImage,

prompt: yourPrompt,

seed: 12345 // Fixed seed

}

});

2. Over-Transformation

Problem: Model changes too much of the image

Solution:

python# Reduce the strength parameter

gentle_transform = client.run(

"black-forest-labs/flux-kontext-pro",

input={

"image": image,

"prompt": prompt,

"strength": 0.3 # Lower values = less change

}

)

3. Poor Text Rendering

Problem: Text in images appears garbled

Solution: Use Kontext [max] for superior typography:

javascript// Switch to max model for text-heavy transformations

const textTransform = await fal.run("fal-ai/flux-max/kontext", {

input: {

image_url: signImage,

prompt: 'Change the sign text to "OPEN 24/7" in bold red letters'

}

});

Unified API Access with LaoZhang-AI

💡 Multi-Model Solution: Need access to Flux along with DALL-E, Midjourney, and other AI models? LaoZhang-AI provides unified API access with competitive pricing and simplified billing.

Benefits of using LaoZhang-AI:

- One API key for all major image generation models

- Unified billing across different providers

- Cost optimization with usage analytics

- Free trial credits to test all models

Example integration:

javascript// Access multiple models with one API

const laozhangClient = new LaoZhangAI({ apiKey: process.env.LAOZHANG_KEY });

// Use Flux for precise edits

const fluxResult = await laozhangClient.transform({

model: "flux-kontext-pro",

image: sourceImage,

prompt: "Add realistic shadows"

});

// Compare with DALL-E

const dalleResult = await laozhangClient.transform({

model: "dalle-3-edit",

image: sourceImage,

prompt: "Add realistic shadows"

});

Advanced Techniques

1. Multi-Stage Transformations

Create complex transformations through multiple passes:

pythonclass FluxPipeline:

def __init__(self, api_key):

self.client = replicate.Client(api_token=api_key)

def transform_pipeline(self, image, stages):

"""Apply multiple transformation stages"""

current_image = image

for stage in stages:

print(f"Applying: {stage['name']}")

current_image = self.client.run(

"black-forest-labs/flux-kontext-pro",

input={

"image": current_image,

"prompt": stage['prompt'],

"strength": stage.get('strength', 0.7)

}

)

return current_image

# Example: Complete style transformation

pipeline = FluxPipeline(api_key)

result = pipeline.transform_pipeline(original_photo, [

{"name": "Time period", "prompt": "Transform to 1920s aesthetic"},

{"name": "Lighting", "prompt": "Add dramatic film noir lighting", "strength": 0.5},

{"name": "Details", "prompt": "Add period-appropriate clothing and hairstyles", "strength": 0.8}

])

2. Conditional Generation

Generate variations based on conditions:

javascriptasync function conditionalTransform(image, conditions) {

const prompts = {

"summer": "Transform scene to bright summer day with clear skies",

"winter": "Convert to snowy winter scene with overcast sky",

"night": "Change to nighttime with street lights and moon",

"rain": "Add heavy rain and wet surfaces"

};

const results = {};

for (const [condition, prompt] of Object.entries(prompts)) {

if (conditions.includes(condition)) {

results[condition] = await transformImage(image, prompt);

}

}

return results;

}

3. Style Transfer with Preservation

Maintain specific elements while changing style:

pythondef style_transfer_preserve(image, style, preserve_elements):

"""Apply style while preserving specified elements"""

preservation_phrase = " while maintaining " + " and ".join(preserve_elements)

prompt = f"Transform this image into {style} style{preservation_phrase}"

return transform_image(image, prompt)

# Example: Anime style but keep realistic proportions

result = style_transfer_preserve(

portrait,

"anime",

["realistic facial proportions", "original color palette", "background architecture"]

)

Future of Flux Image-to-Image

Upcoming Features

Based on current development trends:

- Extended Context Windows - Process multiple reference images simultaneously

- Video Frame Consistency - Apply transformations across video sequences

- Real-time Processing - Sub-second generation for interactive applications

- Enhanced Control - More granular control over specific regions

Integration Possibilities

The future roadmap includes:

- Browser-based editing without server requirements

- Mobile SDK for on-device transformation

- Batch processing APIs for enterprise scale

- Collaborative editing features

Conclusion

Flux.1 Kontext represents a significant leap forward in image-to-image AI technology. With its unmatched speed, consistency, and context awareness, it's becoming the go-to solution for developers and creators who need reliable image transformation capabilities.

Key takeaways:

- 6-12 second generation makes it the fastest option available

- Character consistency surpasses all competitors

- Multiple API options provide flexibility in implementation

- Cost considerations require careful planning for production use

Whether you're building an e-commerce platform, creating consistent characters for storytelling, or developing the next generation of creative tools, Flux.1 Kontext provides the speed and quality you need.

For those requiring access to multiple AI models, services like LaoZhang-AI offer a unified solution that includes Flux alongside other leading image generation models, simplifying integration and billing.

Start experimenting with Flux image-to-image today and discover how AI-powered transformations can enhance your creative workflow!

Last updated: July 8, 2025. Model capabilities and pricing subject to change. Always refer to official documentation for the most current information.