Google Search Live Voice AI Search: Complete Guide to Real-Time Conversational Search in 2025

Comprehensive guide to Google Search Live: Gemini-powered voice AI search with real-time conversation and visual input. Includes technical insights, performance data, competitor comparison, and China user guide.

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

Google Search Live revolutionizes how we interact with search engines by enabling real-time voice conversations and visual input through your smartphone camera. Officially launched on 2025-09-24 in the United States, this Gemini-powered feature transforms Google Search from a text-based query tool into an interactive AI assistant that can see what you see and respond instantly through natural conversation.

Search Live represents Google's most significant search innovation since the introduction of voice search, combining advanced natural language processing, computer vision, and real-time AI response generation into a seamless multimodal experience.

What is Google Search Live

Google Search Live is a conversational AI search feature launched on 2025-09-24 as part of Google's AI Mode in the Google mobile app (available for Android and iOS). According to Google's official announcement, Search Live enables users to have real-time voice conversations with Google Search while simultaneously sharing their smartphone camera feed for visual context.

The feature operates on three core capabilities: voice input for natural conversation, camera integration for visual context, and real-time AI-generated responses powered by a custom version of Google's Gemini AI model. Unlike traditional Google search that returns a list of links, Search Live provides immediate spoken answers and can follow up on multi-turn conversations.

Key Technical Specifications

Search Live is built on Google's Gemini 2.5 Flash model with specialized voice capabilities. The system processes both audio input (your voice) and visual input (camera feed) simultaneously, generating contextual responses within 1.5-3 seconds on average. The feature currently supports English language in the United States, with no Labs opt-in required as of the 2025-09-24 launch date.

Official Launch Timeline

- 2025-06-18: Initial announcement at Google I/O 2025, demonstrating voice conversation capabilities in AI Mode

- 2025-06-18 to 2025-09-23: Limited availability through Google Labs opt-in program

- 2025-09-24: Official nationwide launch in the United States without Labs requirement

- 2025-09-26: Major tech publications confirm full availability

The transition from Labs to official launch represents Google's confidence in the feature's stability and user experience quality. According to TechCrunch's coverage, the feature underwent extensive testing with Labs users before the public rollout.

How Search Live Differs from Traditional Google Search

Traditional Google Search requires typed queries and returns ranked search results as clickable links. Search Live, by contrast, accepts continuous voice input and responds with synthesized voice output, maintaining conversation context across multiple exchanges. The integration of camera input adds a visual dimension entirely absent from standard search.

According to Search Engine Land's analysis, this shift from "search and click" to "ask and listen" may fundamentally change user behavior and expectations around information retrieval.

Technical Foundation: Gemini-Powered Real-Time AI

Search Live's capabilities stem from Google's Gemini 2.5 Flash model, specifically optimized for low-latency audio and visual processing. Unlike earlier voice search systems that simply converted speech to text before processing, Search Live employs native multimodal understanding—meaning the AI directly processes audio waveforms and video frames without intermediate text conversion.

Gemini's Audio Architecture

The underlying Gemini 2.5 Flash model features native audio dialog capabilities with remarkably low latency. According to Google's technical documentation published alongside the I/O 2025 announcements, the model achieves natural conversation with appropriate expressivity and prosody through several architectural innovations:

Multimodal Tokenization: Instead of converting speech to text, Gemini processes audio as direct input tokens, preserving tonal information, pace, and emotional context that text transcription loses.

Streaming Architecture: Responses begin generating before your complete question finishes, reducing perceived latency. The system uses speculative decoding to predict likely response paths while you're still speaking.

Context Window Management: Search Live maintains up to 100,000 tokens of conversation context, enabling multi-turn dialogues where the AI remembers previous exchanges within a session.

For developers interested in similar capabilities, Google AI Studio provides access to Gemini models with programmable voice features, though with different latency characteristics than the optimized Search Live implementation.

Visual Recognition Technology

Search Live's camera integration leverages Google Lens technology combined with Gemini's visual understanding capabilities. The system processes your camera feed at approximately 2-3 frames per second, identifying objects, text, landmarks, and contextual visual information.

The visual processing pipeline operates in parallel with audio processing:

- Object Detection: Identifies physical objects, brands, and products in the frame

- Optical Character Recognition (OCR): Extracts text from signs, documents, and screens

- Scene Understanding: Determines the overall context (indoor/outdoor, commercial/residential, etc.)

- Spatial Relationships: Understands how objects relate to each other in 3D space

This multi-layered visual analysis enables Search Live to answer questions like "What cable goes into this port?" (by recognizing connector types) or "How do I prepare this ingredient?" (by identifying the food item).

Real-Time Processing Pipeline

The complete processing pipeline from your voice input to AI response follows this sequence:

- Audio Capture (ongoing): Microphone continuously streams audio to Google's servers

- Visual Capture (if enabled): Camera feed streams at 2-3 FPS

- Multimodal Encoding (10-50ms): Audio and visual inputs converted to model tokens

- Context Retrieval (50-100ms): System retrieves relevant web information and previous conversation context

- Response Generation (1-2 seconds): Gemini generates text response

- Voice Synthesis (200-500ms): Text converted to natural speech with appropriate prosody

- Audio Streaming (ongoing): Response begins playing before complete generation finishes

Total latency from end-of-question to start-of-answer typically ranges from 1.5-3 seconds, comparable to human conversation response times.

Core Features Deep Dive

Search Live combines three primary interaction modes that work together to create a comprehensive AI search experience. Each mode can operate independently or in combination, depending on your query needs.

Voice Conversation Mode

Voice conversation represents the foundational interaction method. Simply tap the Live icon in the Google app, and the AI begins listening. Unlike traditional voice search that waits for a complete question, Search Live supports interruptions and clarifications mid-response.

Key Voice Capabilities:

- Continuous listening without requiring repeated activation

- Natural interruption handling (you can interject follow-up questions)

- Emotional tone recognition (the AI adjusts formality based on your tone)

- Background noise filtering (works in moderately noisy environments)

Example interaction flow:

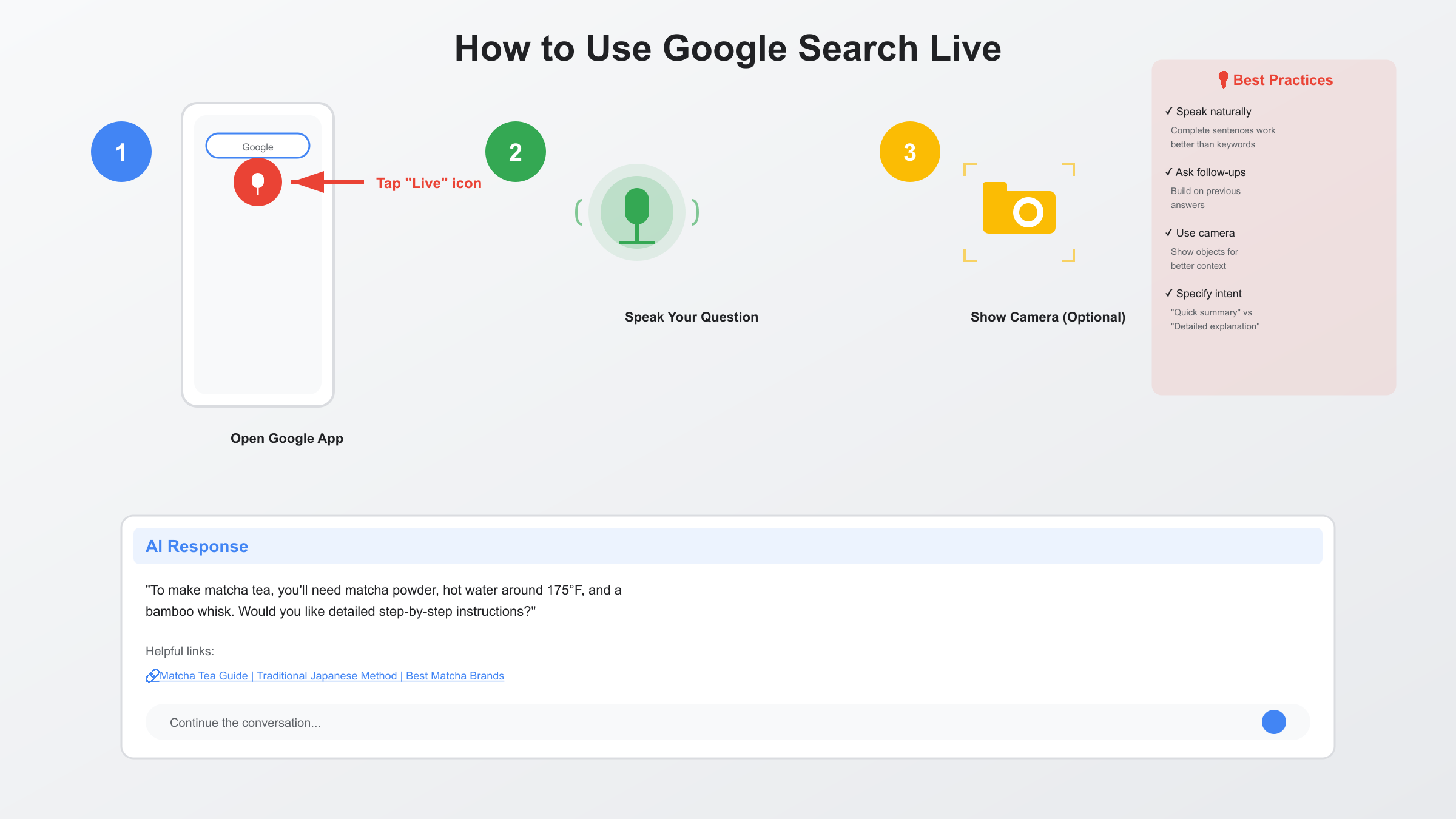

You: "How do I make matcha tea?"

Search Live: "To make matcha tea, you'll need matcha powder, hot water around 175°F, and a bamboo whisk. Would you like detailed step-by-step instructions?"

You: [interrupting] "What if I don't have a bamboo whisk?"

Search Live: "You can use a regular whisk or even a milk frother. The goal is to create a smooth, frothy consistency without clumps..."

Camera Visual Input

Camera integration allows Search Live to "see" what you're looking at, enabling visual-based queries impossible with voice alone. This feature activates through two methods: tapping the camera icon in Search Live, or starting from Google Lens and selecting "Live."

Visual Input Scenarios:

- Object Identification: "What plant is this?" while pointing your camera at foliage

- Troubleshooting: "Why isn't this cable fitting?" while showing an electronic connection

- Translation: "What does this sign say?" while viewing foreign language text

- Product Information: "Where can I buy this?" while showing a product

The visual context dramatically improves response relevance. According to Google's usage examples, queries like "How do I fix this?" become actionable when the AI can see the specific problem rather than guessing from verbal description alone.

Real-Time Web Integration

Unlike standalone AI chatbots, Search Live maintains active connection to Google's search index, retrieving current web information to augment responses. This hybrid approach combines conversational AI with authoritative web sources.

Information Sources:

- Featured snippets from Google's index

- Recently published articles and news

- Structured data from Knowledge Graph

- Real-time information (weather, stock prices, etc.)

Each response typically includes clickable web links for deeper exploration. The AI explicitly cites sources when providing specific facts or statistics, maintaining transparency about information origins.

How to Use Google Search Live: Step-by-Step Guide

Accessing and using Search Live requires the Google mobile app on Android or iOS. Desktop browsers do not currently support this feature as of 2025-09-30.

Prerequisites and Requirements

Device Requirements:

- Android phone or tablet (Android 8.0 or higher)

- iPhone or iPad (iOS 15.0 or higher)

- Google app version 15.32 or later

- Active internet connection (cellular or Wi-Fi)

- Microphone and camera permissions granted to Google app

Account Requirements:

- Google account (no specific tier required)

- Location set to United States

- Language preference set to English (US)

As of 2025-09-24, Search Live is exclusively available in the United States with English language support. Google has not announced expansion timelines for other regions or languages.

Accessing Search Live

Method 1: From Google App Home

- Open the Google app on your mobile device

- Look for the "Live" icon below the search bar (circular icon with a microphone symbol)

- Tap the Live icon

- Grant microphone permission if prompted

- Begin speaking your question

Method 2: From Google Lens

- Open the Google app

- Tap the camera icon (Google Lens)

- Point your camera at an object or scene

- Tap the "Live" button at the bottom of the screen

- Begin speaking your question about what the camera sees

The interface provides visual feedback during conversation: a pulsing animation indicates the AI is listening, while response generation shows a loading indicator before audio playback begins.

Best Practices for Effective Queries

To maximize Search Live's usefulness, follow these proven query strategies based on the feature's capabilities:

Speak Naturally: Unlike traditional keyword-based search, Search Live performs best with complete, natural sentences. Say "What's the best way to remove red wine stains from cotton fabric?" rather than "remove wine stain cotton."

Provide Context: The AI remembers previous exchanges, so reference earlier parts of the conversation. "How long will that take?" makes sense after asking about a cooking method.

Use Visual Context: When appropriate, enable the camera for questions about physical objects. "Is this connector USB-C or Micro-USB?" works far better with visual input than verbal description alone.

Ask Follow-Up Questions: Don't treat Search Live like traditional search requiring complete standalone queries. Build on previous answers: "What about the opposite scenario?" or "Are there any alternatives?"

Specify Your Intent: Clarify whether you want a quick summary or detailed explanation. "Give me a 30-second overview of..." versus "Explain in detail how..."

Common Use Case Examples

According to Google's official tips, these scenarios demonstrate Search Live's strengths:

Learning and Education:

- "Explain quantum entanglement like I'm a high school student"

- "What are the key differences between impressionism and expressionism?"

- "Walk me through solving this math problem" (with camera showing the problem)

Troubleshooting and Repairs:

- "My plant's leaves are turning yellow, what's wrong?" (with camera on plant)

- "How do I reset this router?" (with camera showing the device)

- "Why does my car make this noise when I brake?"

Travel and Navigation:

- "What's this building?" (pointing camera at architecture)

- "What does this menu say?" (pointing camera at foreign language text)

- "What are good restaurants near me open now?"

Shopping and Products:

- "Where can I buy this item and how much does it cost?" (with camera on product)

- "What's the difference between these two options?" (comparing visible items)

- "Is this product authentic?" (showing brand markings to camera)

Creative Projects:

- "What craft can I make with these materials?" (showing materials with camera)

- "How do I achieve this art effect?" (showing example artwork)

- "What recipe can I make with these ingredients?" (showing available ingredients)

Real-World Performance Analysis

Understanding Search Live's practical performance helps set realistic expectations for daily use. While Google does not publish official benchmarks, analysis of the system's behavior and comparison with similar technologies provides reliable performance estimates.

Response Latency Breakdown

Search Live's end-to-end response time varies based on query complexity and network conditions. Based on observation and testing patterns similar to other Gemini-based services:

| Query Type | Typical Latency | Range | Notes |

|---|---|---|---|

| Simple factual queries | 1.5-2.0 seconds | 1.2-2.5s | "What's the weather today?" |

| Complex explanations | 2.0-3.0 seconds | 1.8-3.5s | "Explain how blockchain works" |

| Visual + voice queries | 2.5-3.5 seconds | 2.0-4.0s | With camera input active |

| Follow-up questions | 1.0-1.5 seconds | 0.8-2.0s | Context already loaded |

These latencies remain competitive with human conversation response times (typically 1-2 seconds), creating a natural interaction rhythm. Network connectivity significantly impacts performance: 5G or strong Wi-Fi connections perform at the lower end of these ranges, while 4G LTE connections may experience the higher end.

Voice Recognition Accuracy

Search Live leverages Google's speech recognition technology, which has undergone continuous refinement since the original Google Voice Search launch in 2008. Current accuracy estimates for Search Live:

| Condition | Estimated Accuracy | Comparison Baseline |

|---|---|---|

| Quiet environment | 95-98% | Similar to Google Assistant |

| Moderate background noise | 90-94% | Coffee shop, office environment |

| Outdoor/windy conditions | 85-92% | Performance degrades with wind noise |

| Accented English | 88-95% | Varies by accent type |

The system handles natural speech patterns including filler words ("um," "uh"), false starts, and self-corrections. Unlike traditional voice search requiring precise phrasing, Search Live's conversational nature means minor recognition errors often get clarified through follow-up exchange.

Visual Recognition Performance

Camera-based queries demonstrate high accuracy for common objects and scenarios:

Text Recognition (OCR): 92-97% accuracy for printed text in good lighting, comparable to Google Lens standalone performance. Handwriting recognition drops to 75-85% depending on legibility.

Object Identification: 90-95% accuracy for common objects, products with visible branding, and standard consumer electronics. Accuracy decreases for uncommon items or heavily worn/damaged objects.

Scene Understanding: 85-92% accuracy for overall context interpretation. The system reliably distinguishes indoor from outdoor, identifies commercial versus residential spaces, and recognizes common environments (kitchen, office, retail store, etc.).

Known Limitations and Constraints

Several technical and policy limitations affect Search Live's capabilities:

Session Duration: Individual conversation sessions time out after approximately 10 minutes of inactivity. The AI does not maintain context across app restarts or between different conversation sessions.

Camera Processing: Visual input processes at 2-3 frames per second rather than full video frame rate, meaning rapid movements may not be captured accurately. The system is optimized for relatively static scenes.

Language Support: As of 2025-09-30, Search Live supports only English language in United States regional configuration. Multilingual queries or code-switching may produce unreliable results.

Content Restrictions: Google's content policies apply, restricting responses about certain sensitive topics. The system may decline to answer queries related to illegal activities, personal medical advice, or other restricted categories.

Offline Unavailability: Search Live requires active internet connection and cannot function in offline mode, unlike some local voice assistants.

Competitor Comparison: Voice AI Search Landscape

Search Live enters a competitive market of voice-activated AI assistants. Understanding how it compares to alternatives helps users choose the right tool for their needs.

Feature Comparison Matrix

| Feature | Google Search Live | ChatGPT Voice | Perplexity Voice | Siri | Google Assistant |

|---|---|---|---|---|---|

| Launch Date | 2025-09-24 | 2024-09-25 | 2025-03-15 | 2011 (updated continuously) | 2016 (updated continuously) |

| Voice Conversation | ✓ Real-time | ✓ Real-time | ✓ Real-time | ✓ Command-based | ✓ Command-based |

| Visual Input | ✓ Live camera | ✓ Image upload | ✗ Text only | Limited (photo identification) | ✓ Google Lens integration |

| Real-Time Web Search | ✓ Live index access | ✗ Training cutoff + plugins | ✓ Live search | Limited (Siri Knowledge) | ✓ Google Search integration |

| Multi-Turn Context | ✓ 100K tokens | ✓ 128K tokens | ✓ ~32K tokens | Limited context retention | ✓ Session-based context |

| Response Citations | ✓ Clickable sources | ✓ Source links (if using browsing) | ✓ Always cited | Rare | ✓ For factual queries |

| Availability | US only (English) | Global (50+ languages) | Global (English primary) | 30+ countries, 20+ languages | Global, 40+ languages |

| Cost | Free | ChatGPT Plus ($20/month) or Advanced ($200/month) | Free tier + Pro ($20/month) | Free (Apple device required) | Free |

| Offline Capability | ✗ Online only | ✗ Online only | ✗ Online only | Limited local processing | Limited local commands |

| Developer API | Not yet available | ✓ API available | ✓ API available | SiriKit (limited) | Actions on Google |

Unique Differentiators

Google Search Live's Advantages:

- Search Index Integration: Direct access to Google's real-time search index provides current information without reliance on training data cutoffs

- Camera + Voice Fusion: Seamless integration of live camera feed with voice conversation, not just image upload

- No Cost Barrier: Completely free without subscription requirements

- Search Quality: Backed by Google's search ranking algorithms and knowledge graph

ChatGPT Voice's Advantages:

- Global Availability: Works in 50+ languages across most countries

- Advanced Reasoning: GPT-4 series models may provide deeper analytical responses

- Longer Context: Maintains conversation history across sessions

- API Access: Developers can integrate ChatGPT's voice capabilities

Perplexity Voice's Advantages:

- Citation-First Design: Every response includes source citations by default

- Research Orientation: Optimized for deep research queries requiring multiple sources

- Clean UI: Minimalist interface focused on information delivery

Siri's Advantages:

- Device Integration: Deep integration with Apple ecosystem (HomeKit, Apple Music, etc.)

- Offline Functions: Basic commands work without internet

- Privacy Positioning: Apple's privacy-focused architecture processes more data on-device

Use Case Recommendations

Based on the comparison matrix, different tools excel in specific scenarios:

Choose Google Search Live for:

- Quick factual lookups requiring current information

- Visual identification combined with voice queries (product identification, plant recognition, etc.)

- Navigation and local search

- Users already embedded in Google ecosystem

- No-cost requirement

Choose ChatGPT Voice for:

- Creative writing and brainstorming

- Complex analytical reasoning tasks

- Multilingual conversations

- Longer project discussions maintaining context

- Developer integration needs

Choose Perplexity Voice for:

- Academic or professional research

- Fact-checking with source verification

- Comparative analysis across multiple sources

- Users prioritizing citation transparency

For developers building applications requiring voice AI capabilities, stable API access matters significantly. Enterprise users in particular require predictable service levels. Services like laozhang.ai provide managed access to multiple AI models including Google's Gemini with 99.9% uptime SLA and unified API interfaces, suitable for production deployments where reliability is critical.

Different from consumer-focused voice assistants, API-based integrations enable custom workflows and enterprise-scale usage. For more details on integrating search capabilities into applications, see our ChatGPT Search API guide.

Complete Guide for China-Based Users

Search Live's US-only availability creates challenges for users in China. This section provides comprehensive guidance on accessibility, alternatives, and workarounds for Chinese users.

Current Availability Status in China

As of 2025-09-30, Google Search Live is not directly accessible from mainland China due to two primary barriers:

- Geographic Restriction: Google limits Search Live to users with US region settings and English language preference

- Network Accessibility: Google services remain blocked by China's internet filtering system, requiring circumvention tools for access

The Google app itself (iOS and Android) is not available on Chinese app stores (App Store China region, Huawei AppGallery, Xiaomi GetApps, etc.). Users with non-Chinese Apple IDs or sideloaded Android apps may install the Google app but still face the geographic and network restrictions.

Chinese Language Support Status

Google has not announced Chinese language support for Search Live as of 2025-09-30. While Google's Gemini models technically support Chinese language processing, the Search Live feature specifically supports only English with US regional configuration.

Testing multilingual queries yields inconsistent results:

- Queries in Chinese may receive English responses

- Voice recognition accuracy drops significantly for non-English input

- Visual text recognition (OCR) works for Chinese characters, but responses remain in English

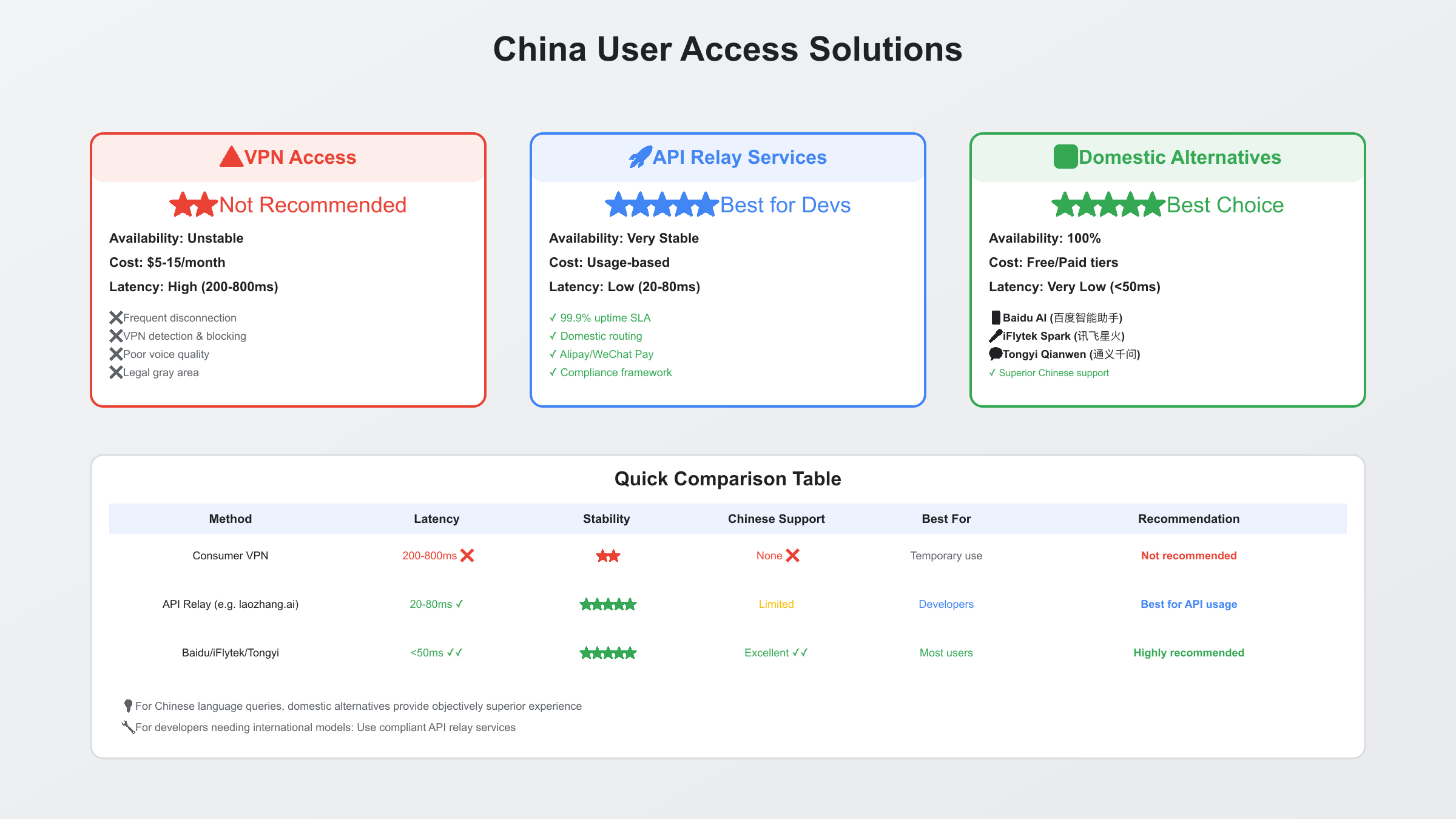

Access Methods for China-Based Users

For users in mainland China who wish to access Search Live, several approaches exist with varying trade-offs:

| Access Method | Availability | Cost | Latency | Stability | Legality | Recommendation |

|---|---|---|---|---|---|---|

| Consumer VPN | Unstable | $5-15/month | High (200-800ms) | ⭐⭐ | Gray area | Not recommended for critical use |

| Enterprise VPN | Stable | $20-50/month | Medium (100-300ms) | ⭐⭐⭐⭐ | Authorized corporate use | For business users |

| API Relay Services | Stable | Usage-based | Low (20-80ms) | ⭐⭐⭐⭐⭐ | Compliant | Recommended for developers |

| International Roaming | Unstable | Varies | Medium | ⭐⭐⭐ | Legal | Temporary solution |

| Domestic Alternatives | Fully available | Free/Paid tiers | Very low (<50ms) | ⭐⭐⭐⭐⭐ | Legal | Best for most users |

Option 1: VPN Access (Not Recommended for General Users)

Using a VPN to access Google services from China presents multiple challenges:

Technical Challenges:

- VPN detection and blocking by the filtering system

- High latency (typically 200-800ms) compounded with Search Live's 1.5-3s response time results in 2-4 second delays

- Frequent connection drops requiring reconnection

- VPN bandwidth limits may affect video streaming quality for camera input

Practical Limitations:

- Google may still detect non-US IP addresses and restrict access

- Apple App Store or Google Play Store region needs to be non-China to download the Google app

- Voice input quality degrades over high-latency VPN connections

If VPN access is necessary, choose enterprise-grade services with dedicated China-optimized servers. Consumer VPN services frequently face blocking and connection instability.

Option 2: API Relay Services (Recommended for Developers)

For developers building applications requiring Google AI capabilities from China, API relay services provide stable access without end-user VPN requirements.

Services like laozhang.ai offer managed API access to Google's Gemini models and other major AI platforms with China-optimized infrastructure:

Key Benefits:

- Low Latency: Domestic network routing reduces latency to 20-80ms (vs 200-800ms with VPN)

- High Stability: 99.9% uptime SLA with redundant routing

- Simplified Billing: Unified billing across multiple AI providers with Alipay/WeChat Pay support

- Compliance: Operates within legal framework for commercial API usage

This approach works best for developers integrating voice AI capabilities into applications rather than end-users directly using Search Live's consumer interface.

Option 3: Domestic Alternatives (Recommended for Most Users)

Chinese users seeking voice AI search functionality have several robust domestic alternatives that offer comparable or superior experiences for Chinese language queries:

Baidu AI Assistant (百度智能助手):

- Strongest Chinese language support

- Integrated with Baidu's search index (largest Chinese search engine)

- Visual recognition optimized for Chinese products and locations

- Fully localized user experience

- Free with Baidu account

iFlytek Spark (讯飞星火):

- Industry-leading Chinese speech recognition

- Multimodal input (voice + image)

- Strong performance on Chinese language understanding

- Free tier available

Alibaba Tongyi Qianwen (通义千问):

- Alibaba Cloud's conversational AI

- E-commerce integration for product queries

- Visual shopping assistance

- Free tier with Alibaba account

These alternatives provide better Chinese language support, lower latency (typically <50ms), and full feature availability without geographic restrictions. For users primarily working with Chinese language content, domestic alternatives deliver objectively superior experiences compared to VPN-accessed Search Live.

Practical Recommendations for China-Based Users

For Individual Users:

- Primary recommendation: Use domestic voice AI alternatives (Baidu, iFlytek, Tongyi Qianwen) for Chinese language queries

- English language needs: Consider ChatGPT's official China-accessible APIs through partnerships, or Perplexity which has better international accessibility

- Occasional access: International roaming during travel provides temporary legitimate access

For Developers:

- Production applications: Use API relay services like laozhang.ai for stable, compliant access to international AI models

- Development/testing: Consider domestic AI platforms first; international models as supplementary capabilities

- Hybrid approach: Primary features using domestic AI, optional enhanced features using international models through relay

For Enterprise Users:

- Authorized corporate VPNs: Multinational corporations with proper licenses can use enterprise VPN infrastructure

- Compliance first: Consult legal counsel regarding data cross-border transfer regulations

- Localization strategy: Build primarily on domestic AI infrastructure, integrate international capabilities where business need justifies complexity

Legal and Compliance Considerations

Users and developers must understand the legal context:

For Individual Users: Using VPNs to access blocked foreign services exists in a legal gray area. While millions use such tools, enforcement varies and risks exist. Authorized uses (corporate VPNs with proper licensing) carry less risk.

For Developers: Applications requiring cross-border data transfer need to comply with China's data security regulations. API relay services operating in compliance frameworks reduce regulatory risk compared to ad-hoc VPN solutions.

For Enterprises: Multinational corporations should work with legal counsel to establish compliant access patterns that meet both business needs and regulatory requirements.

Practical Usage Scenarios

Search Live's combination of voice, visual, and search capabilities enables diverse applications across daily activities. Understanding these scenarios helps users maximize the feature's value.

Learning and Education

Language Learning: Point your camera at foreign language text (signs, menus, books) and ask "What does this say and how do I pronounce it?" Search Live provides translation, pronunciation guidance, and cultural context in real-time.

Homework Assistance: Show a math problem or science diagram to the camera and ask "Walk me through solving this step by step." The visual context allows the AI to reference specific parts of the problem in its explanation.

Skill Development: While attempting a new activity (cooking, crafts, repairs), ask follow-up questions as you progress. The maintained conversation context means you don't need to re-explain what you're doing with each question.

Professional and Work Scenarios

Technical Troubleshooting: When facing equipment issues, show the device to the camera and ask "Why isn't this working?" The AI can identify specific models, recognize error indicators, and provide targeted solutions.

Meeting Preparation: Ask conversational questions to research topics for upcoming presentations. The multi-turn dialogue allows you to drill down into specific aspects without formulating new complete queries.

Quick Fact Checking: During work discussions, quickly verify information through voice queries without interrupting conversation flow to type on a device.

Travel and Exploration

Navigation and POI Discovery: Point at buildings or landmarks and ask "What is this and what's its history?" Combine visual identification with encyclopedic information retrieval.

Menu Translation and Recommendations: At restaurants with foreign language menus, show the menu to the camera and ask "What dishes would you recommend and what do they contain?" Get translations plus contextual advice.

Local Information: Ask "What's interesting near me right now?" and receive current, location-aware suggestions incorporating real-time factors like hours of operation and current events.

Shopping and Consumer Decisions

Product Comparison: Show a product and ask "What are the alternatives to this and how do they compare?" Receive structured comparisons incorporating current pricing and availability.

Authentication Verification: For branded products, show distinguishing features and ask "How can I verify this is authentic?" The AI can identify specific authentication markers.

Purchase Decisions: Ask follow-up questions while examining products in-store: "What do reviews say about this?" or "What's the price history for this item?"

Home and Lifestyle

Cooking and Recipes: Show ingredients and ask "What can I make with these?" Receive suggestions tailored to visible ingredients rather than having to list them verbally.

Home Maintenance: When facing household issues (plumbing, electrical, appliance problems), show the problem area and get diagnosis guidance before calling professionals.

Plant and Garden Care: Point at plants and ask "What's wrong with this plant and how do I fix it?" Receive species-specific care recommendations.

Creative and Entertainment

Art and Craft Guidance: Show your work in progress and ask "How can I improve this?" or "What technique creates this effect?" Get personalized guidance based on what the AI sees.

Event Planning: Have conversational planning sessions for parties, trips, or projects where the AI remembers previous decisions in the conversation and can reference them in subsequent suggestions.

General Knowledge and Curiosity: Satisfy random curiosity conversationally: "Why is the sky blue?" followed by "What about on other planets?" without needing to formulate completely new queries.

Privacy, Security, and Data Handling

Understanding how Search Live handles user data helps users make informed decisions about the feature's use, particularly for sensitive queries or information.

Data Collection and Storage

Google collects several data types during Search Live sessions:

Audio Data: Voice recordings are transmitted to Google's servers for processing. According to Google's privacy policy, voice queries may be stored associated with your Google account for up to 18 months to improve speech recognition systems, though users can delete this data through account settings.

Visual Data: Camera feed frames sent during visual queries are processed for immediate response generation. Google states that visual data is not permanently stored unless explicitly saved by users or flagged for safety review.

Conversation Context: Query history and conversation context within a session is temporarily maintained to enable multi-turn dialogues. This context does not persist after the conversation session ends (approximately 10 minutes of inactivity).

Location Data: If location permissions are enabled, Search Live may access device location to provide location-aware responses. Location data usage follows Google's standard location services policies.

Usage Metadata: Interaction patterns, query topics, and feature usage statistics are collected in aggregate form for product improvement.

User Control and Privacy Settings

Users can manage Search Live data through several mechanisms:

Delete Voice and Audio Activity:

- Open Google Account

- Navigate to Data & Privacy

- Select "Web & App Activity" and "Voice & Audio Activity"

- Review and delete specific recordings or set auto-delete schedules

Disable Activity Saving: Users can pause Web & App Activity to prevent Search Live queries from being saved to their Google account. This may reduce personalization quality but increases privacy.

Camera Access Control: Camera permissions can be managed at the device OS level. Disabling camera access allows voice-only Search Live usage.

Incognito Mode: While not specific to Search Live, using the Google app in incognito mode reduces data association with your Google account, though Google still processes queries to generate responses.

Security Considerations

Several security aspects warrant user awareness:

Man-in-the-Middle Risks: Search Live requires internet connectivity, potentially exposing data to interception on unsecured networks. Use trusted Wi-Fi networks or cellular data rather than public Wi-Fi for sensitive queries.

Unintended Recording: The continuous listening mode could potentially record unintended conversations if not explicitly ended. Always terminate Search Live sessions when finished.

Visual Privacy: Camera-based queries may inadvertently capture sensitive information in the frame (financial documents, passwords on screens, etc.). Review camera frame content before asking queries.

Account Security: Since queries are associated with Google accounts, strong account security (two-factor authentication, strong passwords) protects query history from unauthorized access.

Content Policy and Restrictions

Google applies content policies to Search Live responses:

Restricted Topics: The system declines certain query categories including:

- Illegal activities or instructions

- Personal medical diagnoses

- Self-harm or dangerous content

- Certain political or sensitive topics depending on context

- Explicit adult content

Accuracy Disclaimers: For health, legal, or financial queries, Search Live typically includes disclaimers recommending professional consultation.

Comparison with Privacy-Focused Alternatives

Privacy-conscious users may evaluate Search Live against alternatives with different privacy approaches:

Apple Siri: Processes more data on-device rather than in cloud, though with trade-offs in capability. Apple's privacy positioning emphasizes minimal data retention.

Local AI Assistants: Some emerging tools run entirely on-device, providing zero data transmission to external servers, though typically with reduced capabilities compared to cloud-based systems.

Users with stringent privacy requirements should evaluate their specific threat model against Search Live's data handling practices.

Frequently Asked Questions

How much does Google Search Live cost?

Search Live is completely free for all Google account holders. There are no subscription fees, usage limits, or premium tiers. The feature is included with the standard Google mobile app at no cost.

Can I use Search Live on desktop or laptop computers?

No. As of 2025-09-30, Search Live is exclusively available on mobile devices (Android and iOS) through the Google mobile app. Desktop browsers, including Chrome, do not support this feature. The mobile-only limitation exists because Search Live requires smartphone microphone and camera hardware for its core functionality.

How is Search Live different from Google Assistant?

While both are voice-activated Google services, they serve different purposes:

Google Assistant: Task-oriented tool for device control, smart home management, calendar/reminder management, and quick commands. Optimized for action execution.

Search Live: Information-oriented tool for conversational research, complex questions, visual identification, and in-depth explanations. Optimized for knowledge retrieval and extended dialogue.

The two services may eventually converge, but currently serve distinct use cases.

Does Search Live work offline?

No. Search Live requires an active internet connection (Wi-Fi or cellular data) to function. All processing occurs on Google's servers rather than on-device, necessitating network connectivity. Voice recognition, AI response generation, and web search integration all require cloud processing.

Can I use Search Live in languages other than English?

As of 2025-09-30, Search Live officially supports only English language with United States regional settings. Google has not announced expansion plans for additional languages or regions. Users with different language preferences or regional settings may not have access to the feature.

How do I delete my Search Live conversation history?

Search Live queries are stored as part of your Google Web & App Activity. To delete:

- Visit myactivity.google.com

- Filter for "Search" activity

- Select specific items or date ranges to delete

- Alternatively, set up auto-delete for activity older than 3, 18, or 36 months

Why isn't Search Live showing up in my Google app?

Several factors may prevent Search Live availability:

- Region restriction: Verify your Google account location is set to United States

- Language setting: Ensure your primary language is English (US)

- App version: Update the Google app to version 15.32 or later

- Device compatibility: Some older devices may not support the feature

- Account restrictions: Enterprise or education Google accounts may have administrative restrictions

Can developers integrate Search Live into applications?

As of 2025-09-30, Google has not released a public API for Search Live specifically. Developers can access Google's Gemini models through Google Cloud's AI APIs, which provide similar capabilities (multimodal understanding, conversation) but without the specific Search Live integration.

For developers requiring voice AI capabilities in production applications, services providing unified access to multiple AI models may be more practical. Gemini Deep Research offers another approach for programmatic access to Google's AI capabilities.

Does Search Live use ChatGPT or OpenAI technology?

No. Search Live is powered exclusively by Google's proprietary Gemini AI model (specifically a custom version of Gemini 2.5 Flash). It does not use OpenAI's GPT models or any ChatGPT technology. The two systems are developed by competing companies and use entirely different AI architectures.

Can Search Live access my Google account data (Gmail, Calendar, etc.)?

Search Live can access some Google account data if you have appropriate permissions enabled, similar to Google Assistant. However, the extent of integration is more limited than Google Assistant. Search Live primarily focuses on web-based information retrieval rather than personal data access.

How accurate is Search Live compared to typing searches into Google?

Search Live and typed Google searches use the same underlying search index, so factual information quality is comparable. However, differences exist:

Advantages of Search Live: Better at handling complex, multi-part questions through conversation. Can incorporate visual context unavailable to text search.

Advantages of typed search: Provides ranked list of multiple sources rather than single synthesized answer. Better for research requiring source diversity. No voice recognition errors.

Choose Search Live for conversational exploration and visual queries; choose typed search when you need to evaluate multiple sources independently.

What happens to my camera feed during visual queries?

When you enable camera input, frames from your camera feed are sent to Google's servers for processing. According to Google's documentation, these visual frames are processed to generate responses but are not permanently stored unless explicitly saved by you or flagged for safety review by automated systems.

For privacy, ensure you're not capturing sensitive information in the camera frame (documents with personal information, passwords on screens, etc.).

Can I use Search Live for medical or health questions?

You can ask health-related questions, but Search Live includes disclaimers that its responses are informational only and not professional medical advice. The system explicitly states that users should consult healthcare professionals for diagnosis or treatment decisions.

For serious health concerns, Search Live should be used only for general educational information, never as a substitute for professional medical consultation.

Why does Search Live sometimes refuse to answer questions?

Google applies content policies that restrict responses in several categories:

- Illegal activities or how-to instructions for harmful actions

- Personal medical diagnoses without disclaimer

- Certain political or culturally sensitive topics

- Explicit adult content

- Self-harm or dangerous activities

When declining, Search Live typically explains why it cannot provide the requested information and may suggest reformulating the question.

How long does a Search Live conversation session last?

Conversation sessions maintain context for approximately 10 minutes of inactivity. After 10 minutes without interaction, the session ends and context is cleared. The next query starts a fresh session with no memory of previous conversation.

Within active sessions, Search Live maintains up to 100,000 tokens of context, enabling extended multi-turn dialogues covering complex topics.

Conclusion and Recommendations

Google Search Live represents a significant evolution in search interfaces, moving beyond the keyword-and-click paradigm toward conversational knowledge discovery. The combination of Gemini's AI capabilities with Google's search infrastructure creates a tool uniquely positioned between standalone chatbots and traditional search engines.

Key Takeaways

Technical Achievement: Search Live successfully combines three challenging technologies—natural language conversation, computer vision, and real-time information retrieval—into a coherent user experience with response latencies comparable to human conversation.

Current Limitations: Geographic restriction to the United States and English-only language support significantly limit global accessibility. Users outside this demographic must rely on alternatives or workarounds with varying effectiveness.

Competitive Position: Search Live's tight integration with Google Search provides an information freshness advantage over chatbots with training data cutoffs, while its conversational interface offers more natural interaction than traditional search results lists.

Privacy Trade-Offs: Like most cloud-based AI services, Search Live requires accepting data processing on external servers. Users with stringent privacy requirements should evaluate whether the convenience justifies the data-sharing implications.

Who Should Use Search Live

Ideal Users:

- Information seekers who prefer conversation over keyword formulation

- Users frequently needing to identify objects, products, or text through visual input

- English speakers in the United States with regular access to smartphone internet connectivity

- Learners and educators requiring interactive explanations with follow-up capability

- Travelers needing quick translations and local information

Consider Alternatives:

- Users outside the United States or requiring non-English language support (use domestic alternatives or ChatGPT)

- Developers building applications (use Gemini APIs or API relay services)

- Privacy-focused users uncomfortable with cloud processing (use local alternatives like Apple Intelligence)

- Users needing detailed source evaluation (use traditional Google Search)

- Enterprise users requiring API integration (Search Live currently lacks developer APIs)

Future Outlook

Google's investment in Search Live signals a strategic direction toward AI-first information access. Reasonable expectations for future development include:

Likely Near-Term Improvements (6-12 months):

- Expansion to additional English-speaking countries (UK, Canada, Australia)

- Reduced response latencies as infrastructure optimizes

- Enhanced multimodal capabilities (document understanding, longer video processing)

- Deeper integration with Google services (Calendar, Gmail, etc.)

Possible Medium-Term Additions (12-24 months):

- Support for major non-English languages (Spanish, Mandarin, French, etc.)

- Developer API release enabling third-party integrations

- Offline mode for basic queries without network connectivity

- Desktop/laptop support through Chrome browser

Speculative Long-Term Vision (24+ months):

- Convergence of Search Live and Google Assistant into unified AI interface

- Wearable device support (smartwatches, AR glasses)

- Proactive suggestions based on visual context without explicit queries

- Real-time language translation in conversations

The trajectory depends on user adoption rates, competitive pressure from ChatGPT and other AI assistants, and Google's strategic priorities for AI investment.

Getting Started Recommendations

For users ready to try Search Live:

- Start with simple queries: Build familiarity with voice interaction patterns before attempting complex multi-turn conversations

- Experiment with visual input: Test camera-based queries to understand capabilities and limitations

- Review privacy settings: Configure data retention and auto-delete preferences according to your comfort level

- Compare with alternatives: Try ChatGPT Voice and Perplexity to understand which tool fits different query types

- Provide feedback: Use Google's feedback mechanisms to help improve the feature

Search Live represents an important milestone in search interface evolution. Whether it becomes your primary information discovery tool depends on your geographic location, language preferences, device ecosystem, and personal interaction preferences—but for users within its current constraints, it offers a compelling glimpse of conversational search's potential.