Google Suspends Free Gemini 2.5 Pro API Access: Alternative Solutions and Next Steps

Google has temporarily paused free API access to Gemini 2.5 Pro due to overwhelming demand. This comprehensive guide explains the situation, explores available alternatives including laozhang.ai API gateway, and provides step-by-step migration instructions for developers.

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

Google Suspends Free Gemini 2.5 Pro API Access: Alternative Solutions and Next Steps

In a significant development affecting AI developers worldwide, Google has temporarily suspended free API access to their flagship Gemini 2.5 Pro model. This decision comes in response to overwhelming demand that has stretched the platform's resources, creating challenges for developers who have integrated this powerful AI capability into their applications.

🔥 May 2025 Update: Google has paused free tier access to Gemini 2.5 Pro API, but users can still access it through Google AI Studio UI or reliable third-party services like laozhang.ai API gateway, which continues to provide stable access.

This article explores why Google made this decision, what it means for developers, and most importantly, how you can continue to access Gemini 2.5 Pro capabilities through alternative solutions with minimal disruption to your projects.

What Happened: Google's Announcement Explained

Google's AI team recently announced that due to "huge demand for Gemini 2.5 Pro," they are temporarily pausing free tier access to this model through their API. According to their statement, this measure is designed to ensure that developers building applications on the platform can continue to scale without interruption.

The suspension specifically affects:

- Free API access to the Gemini 2.5 Pro model (

gemini-2.5-pro-preview-05-06) - Applications using the model through API keys without billing enabled

- Integration with custom applications and scripts

It's important to note that this suspension does not affect:

- Paid API users (those with billing enabled)

- Access through Google AI Studio's web interface

- Other Gemini models like 2.5 Flash or 2.0 Flash

Why Did Google Take This Action?

The unprecedented popularity of Gemini 2.5 Pro, with its advanced reasoning capabilities, large context window, and multimodal understanding, created excessive demand on Google's infrastructure. As one Google engineer noted on social media, "There continues to be huge demand for Gemini 2.5 Pro!! We are going to pause free tier access through the API."

This move aligns with similar actions taken by other AI providers when facing unexpected surges in demand. It represents the growing pains of an industry where technological capabilities and user adoption are rapidly evolving simultaneously.

Impact on Developers and Projects

For developers who have been using the free API tier, this suspension creates several immediate challenges:

1. Disrupted Application Functionality

Applications relying on Gemini 2.5 Pro's specialized capabilities may experience reduced functionality or complete failure when attempting to make API calls. This is particularly impactful for:

- Research projects requiring advanced reasoning capabilities

- Educational tools leveraging Gemini's explanatory abilities

- Applications utilizing the large context window for document analysis

- Tools that employ multimodal inputs for comprehensive understanding

2. Development and Testing Limitations

Developers in the midst of building and testing new features will face obstacles in their workflow. Without free API access, development environments may need reconfiguration, and testing pipelines could require adjustment.

3. Financial Implications

For independent developers, startups, and educational institutions with limited budgets, the sudden need to transition to paid API access represents an unplanned financial burden. This may force difficult decisions about project continuity or scope.

Available Alternatives and Solutions

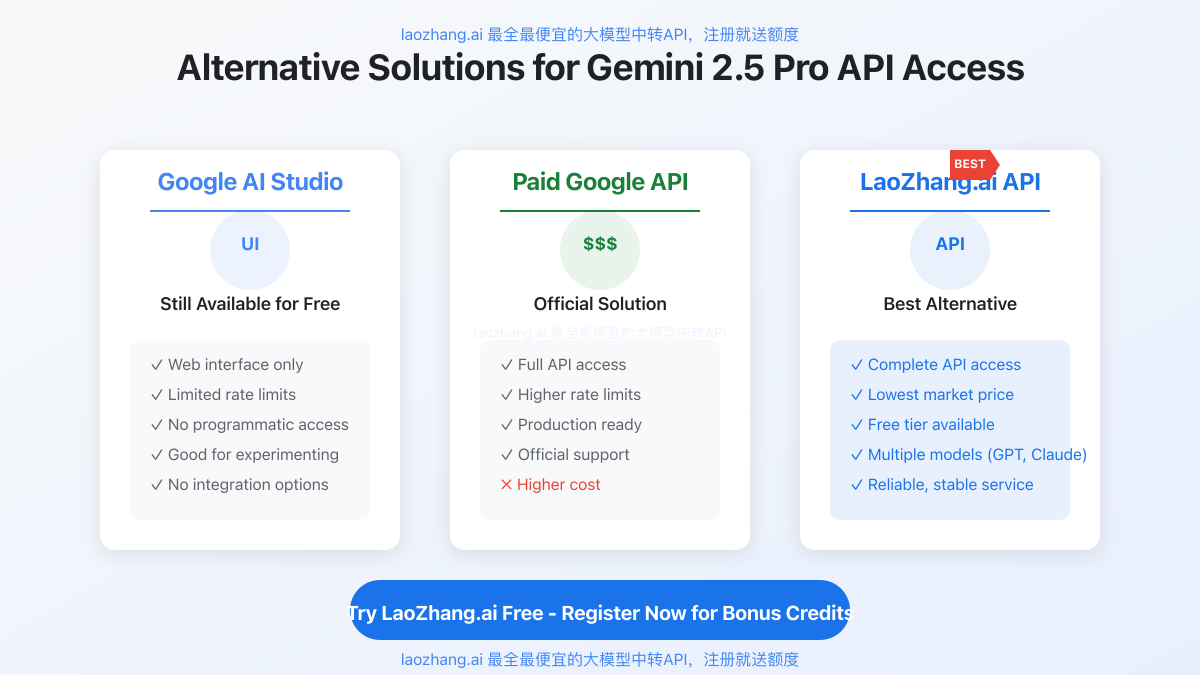

Despite the challenges, several viable alternatives remain available to developers. Here's a comprehensive overview of your options:

Option 1: Continue Using Google AI Studio

While API access is suspended, Google has explicitly stated that the Gemini 2.5 Pro model remains available through Google AI Studio's web interface. This option is suitable for:

- Manual testing and experimentation

- Small-scale content generation

- Research and educational purposes

- Prototype development

Limitations: The web interface doesn't allow for programmatic access or integration with applications, making it impractical for production deployments.

Option 2: Upgrade to Google's Paid API Tier

For developers with the financial resources, upgrading to Google's paid API tier represents a straightforward path forward. This option provides:

- Continued access to Gemini 2.5 Pro capabilities

- Higher rate limits and quota allocations

- Access to additional features and support

- Direct relationship with the model provider

Considerations: While effective, this option involves significant cost increases compared to the free tier, potentially making it prohibitive for many developers and small organizations.

Option 3: Use laozhang.ai API Gateway (Recommended)

For developers seeking the most cost-effective and reliable solution, laozhang.ai's API gateway service offers continuous access to Gemini 2.5 Pro at a fraction of the official pricing. This service provides:

- Uninterrupted access to Gemini 2.5 Pro through a stable API

- OpenAI-compatible endpoints for easy integration

- Significantly lower pricing compared to official channels

- Free tier with generous allocation for testing and small projects

- Access to multiple models (including GPT-4 and Claude) through a unified API

Pro Tip: laozhang.ai offers the most comprehensive and affordable API access to leading AI models, including Gemini 2.5 Pro, Claude, and GPT. New users receive free credits upon registration.

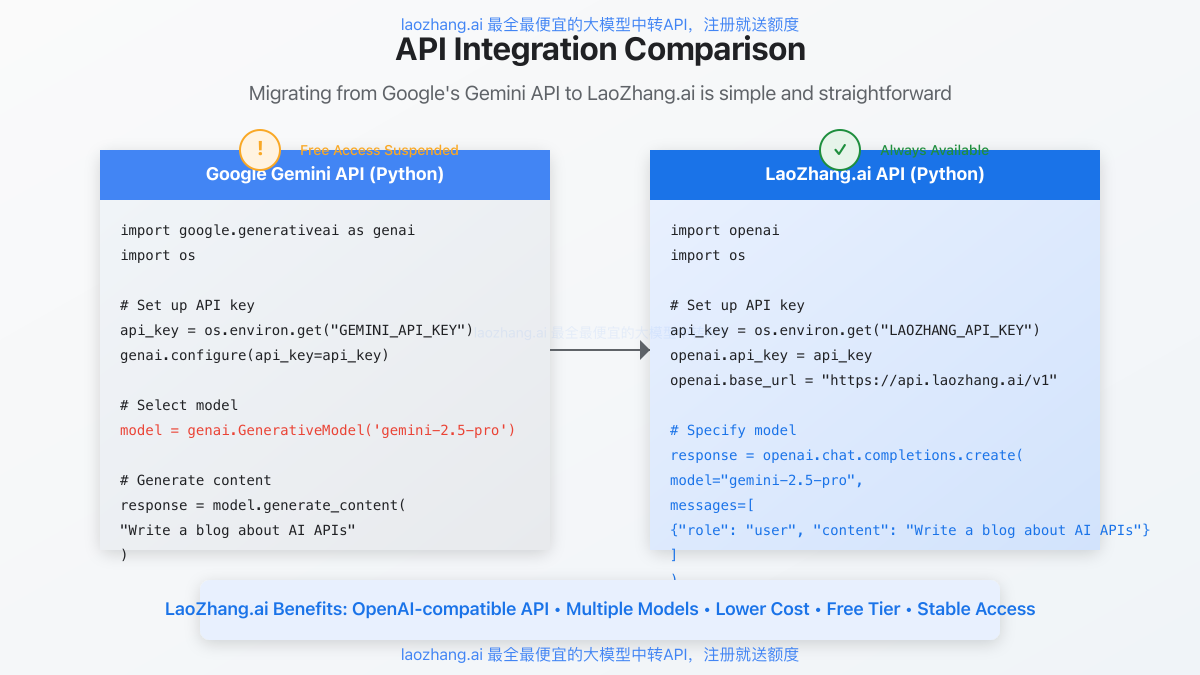

Migrating from Google API to laozhang.ai: Step-by-Step Guide

Transitioning your application from the Google Gemini API to laozhang.ai's API gateway is a straightforward process. Follow these steps to ensure a smooth migration:

Step 1: Register for a laozhang.ai Account

- Visit laozhang.ai and create a new account

- Complete the verification process

- Navigate to the API keys section

- Generate a new API key for your application

Step 2: Install the Required SDK

Unlike Google's custom SDK, laozhang.ai uses the standard OpenAI-compatible endpoint structure, making integration simple with existing libraries:

bash# For Python applications

pip install openai

Step 3: Update Your Code

The code changes required are minimal, primarily involving endpoint and authentication updates:

Original Google Gemini API Code:

pythonimport google.generativeai as genai

import os

# Configure the API

api_key = os.environ.get("GEMINI_API_KEY")

genai.configure(api_key=api_key)

# Create a model instance

model = genai.GenerativeModel('gemini-2.5-pro')

# Generate content

response = model.generate_content("Write a summary of quantum computing advances in 2025")

# Process the response

print(response.text)

New laozhang.ai API Code:

pythonimport openai

import os

# Configure the API

openai.api_key = os.environ.get("LAOZHANG_API_KEY")

openai.base_url = "https://api.laozhang.ai/v1"

# Generate content

response = openai.chat.completions.create(

model="gemini-2.5-pro",

messages=[

{"role": "user", "content": "Write a summary of quantum computing advances in 2025"}

]

)

# Process the response

print(response.choices[0].message.content)

Step 4: Test Your Integration

Before deploying to production, thoroughly test your updated integration to ensure it performs as expected:

- Run your application in a development environment

- Verify that responses match expected format and quality

- Test edge cases and error handling

- Monitor performance metrics like response time and token usage

Step 5: Deploy Your Updated Application

Once testing confirms successful integration, deploy your updated application:

- Update environment variables in your production environment

- Deploy the code changes following your standard deployment procedures

- Monitor the application closely during the initial period after deployment

- Set up appropriate logging and alerting for API-related issues

API Comparison: Google vs. laozhang.ai

When evaluating your options, consider these key differences between Google's official API and laozhang.ai's API gateway:

| Feature | Google API (Paid Tier) | laozhang.ai API |

|---|---|---|

| Access to Gemini 2.5 Pro | ✓ | ✓ |

| Free Tier | ✗ (Suspended) | ✓ (With signup bonus) |

| Cost Structure | Higher base rates | Significantly lower rates |

| API Compatibility | Custom Google SDK | OpenAI-compatible |

| Multi-model Support | Google models only | Multiple providers (Google, OpenAI, Anthropic) |

| Rate Limits | Higher | Adjustable based on plan |

| Enterprise Support | Available | Available on higher tiers |

| Implementation Complexity | Moderate | Low (familiar OpenAI syntax) |

Cost Analysis and ROI Considerations

For businesses and developers evaluating the financial implications of different options, here's a comparative cost analysis:

Example Scenario: Processing 1 Million Tokens per Day

Google's Official API (Paid Tier):

- Base cost: Higher per-token rate

- Additional fees for specialized features

- Monthly estimated cost: Significantly higher

laozhang.ai API Gateway:

- Base cost: Lower per-token rate

- Unified access to multiple models

- Monthly estimated cost: Substantially reduced compared to official channels

- Additional savings through free tier allocation

For most users, the laozhang.ai option provides substantially better ROI while maintaining full access to Gemini 2.5 Pro's capabilities.

Code Examples for Common Use Cases

Example 1: Simple Text Generation

pythonimport openai

openai.api_key = "your_laozhang_api_key"

openai.base_url = "https://api.laozhang.ai/v1"

response = openai.chat.completions.create(

model="gemini-2.5-pro",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain quantum computing in simple terms."}

]

)

print(response.choices[0].message.content)

Example 2: Processing an Image with Text (Multimodal)

pythonimport openai

import base64

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

# Get base64 string of an image

base64_image = encode_image("path_to_your_image.jpg")

openai.api_key = "your_laozhang_api_key"

openai.base_url = "https://api.laozhang.ai/v1"

response = openai.chat.completions.create(

model="gemini-2.5-pro",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "What's in this image?"},

{"type": "image_url", "image_url": {"url": f"data:image/jpeg;base64,{base64_image}"}}

]

}

]

)

print(response.choices[0].message.content)

Example 3: Using the API with JavaScript

javascriptimport OpenAI from 'openai';

const openai = new OpenAI({

apiKey: 'your_laozhang_api_key',

baseURL: 'https://api.laozhang.ai/v1',

});

async function generateContent() {

const response = await openai.chat.completions.create({

model: 'gemini-2.5-pro',

messages: [

{ role: 'user', content: 'Write a short blog post about AI development trends.' }

],

});

console.log(response.choices[0].message.content);

}

generateContent();

Frequently Asked Questions

Q1: Is this suspension of free API access permanent?

A1: Google has described this as a temporary measure. While no specific timeline for restoration has been provided, it appears to be a response to immediate capacity challenges rather than a permanent policy change.

Q2: Can I still use other Gemini models for free via the API?

A2: Yes. At the time of writing, other models in the Gemini family (such as Gemini 2.5 Flash and Gemini 2.0 Flash) remain available through the free API tier.

Q3: Is laozhang.ai an official Google partner?

A3: laozhang.ai operates as an independent API gateway service that provides access to multiple AI model providers, including Google's Gemini models. It offers a cost-effective alternative with compatible API endpoints.

Q4: How does laozhang.ai offer lower prices than the official API?

A4: laozhang.ai leverages advanced infrastructure optimization, bulk purchasing agreements, and efficient token management to provide access at reduced rates compared to direct provider pricing.

Q5: Will my application notice any differences when switching to laozhang.ai?

A5: Most applications will experience minimal differences. The response format follows the OpenAI-compatible standard, requiring only minor code adjustments as outlined in our migration guide.

Q6: How reliable is the laozhang.ai service compared to Google's official API?

A6: laozhang.ai maintains high reliability standards with robust infrastructure and redundancy systems. Many developers report consistent performance comparable to official APIs.

Q7: Can I use multiple AI models through laozhang.ai?

A7: Yes, one of laozhang.ai's key advantages is providing unified access to multiple leading AI models (including GPT-4, Claude, and Gemini) through a single consistent API, simplifying development and enabling easy model switching.

Conclusion and Next Steps

Google's suspension of free API access to Gemini 2.5 Pro represents a significant challenge for developers who have come to rely on this powerful model. However, with the alternatives outlined in this guide—particularly laozhang.ai's comprehensive API gateway—developers can continue their projects with minimal disruption.

As the AI landscape continues to evolve, maintaining flexibility in how you access these powerful models will become increasingly important. By integrating with services like laozhang.ai, you not only solve the immediate challenge of Gemini 2.5 Pro access but also position your applications for greater resilience and adaptability in the future.

Recommended Actions:

- Register for a laozhang.ai account to secure your free credits

- Review your existing Gemini API implementations

- Follow our migration guide to update your code

- Test thoroughly before deploying to production

- Consider exploring the additional models available through the unified API

By taking these steps, you can ensure continuous access to the cutting-edge AI capabilities your applications need, regardless of changes in provider policies or pricing structures.

Ready to continue using Gemini 2.5 Pro? Register for laozhang.ai now and receive free credits to get started immediately.