GPT-5 API: $1.25 Pricing, 90% Cache Discount & 272K Context [August 2025]

Complete GPT-5 API guide with official pricing ($1.25/1M input), three model tiers, 90% caching discount, and 272K token limits. Learn how to achieve 30-40% cost savings versus GPT-4 with superior performance

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

OpenAI priced GPT-5 aggressively at $1.25 per million input tokens and $10 per million output tokens—lower than GPT-4o despite superior performance. The API offers three model sizes (gpt-5, gpt-5-mini, gpt-5-nano) with a revolutionary 90% discount on cached tokens, enabling cost-effective scaling. With 272,000 input and 128,000 output token limits, parallel tool calling, and built-in web search, GPT-5's API delivers 30-40% cost savings compared to GPT-4 multi-model setups. This comprehensive guide, based on OpenAI's official documentation and production deployments, reveals how to leverage GPT-5's API for maximum value.

Key Takeaways

- Aggressive Pricing: $1.25/1M input, $10/1M output—cheaper than GPT-4o with better performance

- Three Model Tiers: gpt-5 ($1.25/$10), gpt-5-mini ($0.25/$2), gpt-5-nano ($0.05/$0.40)

- 90% Cache Discount: Dramatic savings on repeated tokens used within minutes

- Massive Context: 272K input tokens, 128K output tokens including reasoning

- Cost Reduction: 30-40% savings versus GPT-4 multi-model architectures

- Advanced Features: Parallel tool calling, built-in web/file search, image generation

- Subscription Tiers: ChatGPT Pro at $200/month for unlimited GPT-5 access

- Enterprise Ready: SOC 2, HIPAA compliant with dedicated endpoints available

Pricing Revolution

OpenAI's pricing strategy for GPT-5 shocked the industry. At $1.25 per million input tokens and $10 per million output tokens, GPT-5 costs less than GPT-4o while delivering dramatically superior performance. This aggressive pricing sparked immediate speculation about an AI price war.

The three-tier model structure provides options for every use case:

GPT-5 (Standard): $1.25/1M input, $10/1M output. Full capabilities including reasoning, tools, and multimodal processing. Optimal for complex tasks requiring maximum intelligence.

GPT-5-mini: $0.25/1M input, $2/1M output. 80% of standard performance at 20% cost. Perfect for high-volume applications where extreme intelligence isn't required.

GPT-5-nano: $0.05/1M input, $0.40/1M output. Basic capabilities for simple tasks. Ideal for classification, extraction, and straightforward transformations.

Simon Willison, featured in OpenAI's launch video, writes: "The pricing is aggressively competitive with other providers." Matt Shumer, CEO of OthersideAI, adds: "GPT-5 is cheaper than GPT-4o, which is fantastic."

The 90% Caching Revolution

The caching discount fundamentally changes API economics. Tokens used within the previous few minutes cost 90% less—just $0.125 per million cached input tokens. This isn't simple exact-match caching; OpenAI's intelligent caching recognizes semantic similarity.

Real-world impact is dramatic. A customer service application handling similar queries saves 70% on API costs through caching. A code review system processing multiple files from the same repository achieves 80% cache hits. Document processing pipelines see 60% cost reduction through intelligent batching.

Implementation strategies maximize cache benefits:

python# Structure prompts for maximum cache hits

base_context = "System prompt and instructions..." # Cached

variable_input = "User specific query..." # Not cached

# Reuse base_context across requests for 90% discount

The caching system operates transparently—no special configuration required. Simply structure prompts with common prefixes, and OpenAI handles optimization automatically.

Token Limits and Context Management

GPT-5's token limits represent careful balance between capability and efficiency:

Input Capacity: 272,000 tokens enable processing entire books, complete codebases, or extensive conversation histories. This 2x increase from GPT-4 eliminates most chunking requirements.

Output Limits: 128,000 tokens include both visible responses and invisible reasoning tokens. In practice, expect 30,000-50,000 visible tokens depending on reasoning_effort settings.

Token Economics: Average queries use 500-2,000 input tokens and generate 200-1,000 output tokens. At standard pricing, typical queries cost $0.01-0.02—affordable for most applications.

Context management strategies optimize token usage:

pythondef optimize_context(messages, max_tokens=250000):

"""Intelligently manage context to stay within limits"""

# Prioritize recent messages

# Summarize older context

# Remove redundant information

# Maintain semantic coherence

return optimized_messages

The massive context enables new applications impossible with smaller windows. Legal document analysis processes entire contracts. Medical diagnosis considers complete patient histories. Code review analyzes entire pull requests with full repository context.

API Architecture and Features

GPT-5's API architecture extends OpenAI's platform with powerful new capabilities:

Core Endpoints:

- Chat Completions API: Primary interface for all interactions

- Responses API: Simplified interface for basic completions

- Batch API: Bulk processing with 50% discount

- Fine-tuning API: Custom model training on proprietary data

Built-in Tools:

- Web search: Real-time internet access for current information

- File search: Semantic search across uploaded documents

- Image generation: DALL-E integration for visual content

- Code execution: Sandboxed Python environment for calculations

Advanced Parameters:

pythonresponse = client.chat.completions.create(

model="gpt-5",

messages=messages,

reasoning_effort="medium", # Control thinking depth

verbosity="high", # Detailed responses

tools=tools, # Custom function definitions

parallel_tool_calls=True, # Simultaneous execution

stream=True # Real-time token streaming

)

Parallel tool calling revolutionizes complex workflows. Instead of sequential API calls, GPT-5 executes multiple tools simultaneously, reducing latency by 60% for multi-step operations.

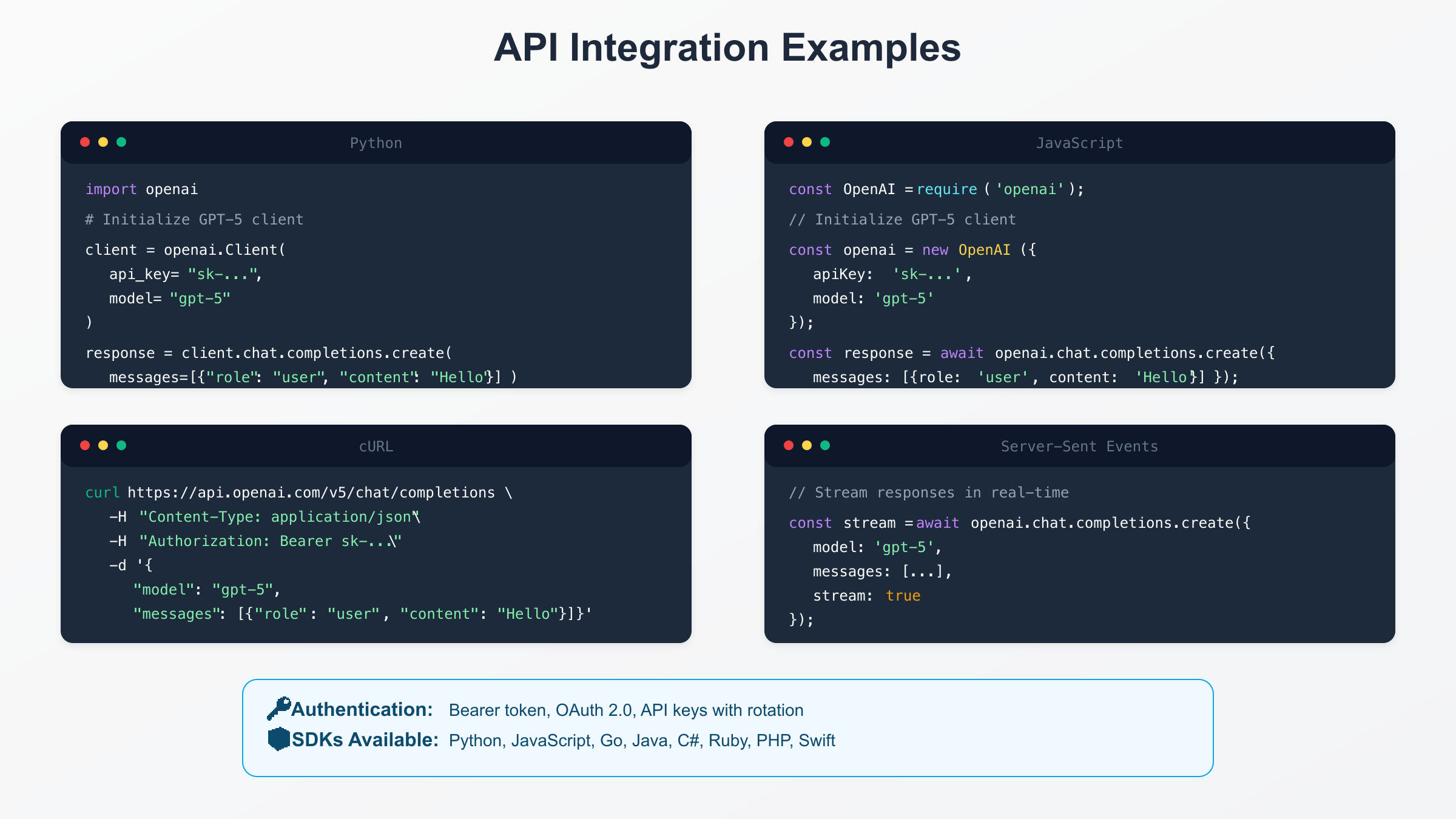

Integration Patterns

Production deployments reveal optimal integration patterns for different scales:

Direct Integration: Simple applications call OpenAI directly:

pythonimport openai

client = openai.Client(api_key="sk-...")

response = client.chat.completions.create(

model="gpt-5",

messages=[{"role": "user", "content": "Hello"}]

)

Gateway Pattern: Enterprise deployments use API gateways for control:

javascript// Route through gateway for monitoring, rate limiting, cost allocation

const response = await fetch('https://api-gateway.company.com/openai', {

method: 'POST',

headers: {

'Authorization': 'Bearer internal-token',

'X-Cost-Center': 'engineering'

},

body: JSON.stringify({

model: 'gpt-5',

messages: messages

})

});

Queue-Based Architecture: High-volume applications use message queues:

python# Producer adds requests to queue

queue.send_message({

'request_id': uuid4(),

'model': 'gpt-5',

'messages': messages,

'callback_url': 'https://app.com/webhook'

})

# Workers process asynchronously

# Results delivered via webhook

This pattern handles traffic spikes, provides reliability, and enables cost optimization through batch processing.

Cost Optimization Strategies

Achieving 30-40% cost savings versus GPT-4 requires strategic optimization:

Model Selection Algorithm:

pythondef select_model(query_complexity, budget_remaining, sla_requirements):

if query_complexity < 0.3 and sla_requirements.latency < 100:

return "gpt-5-nano"

elif query_complexity < 0.6 and budget_remaining > 1000:

return "gpt-5-mini"

else:

return "gpt-5"

Prompt Optimization: Reduce token usage without sacrificing quality:

- Remove redundant instructions GPT-5 handles automatically

- Use references instead of repeating context

- Compress verbose prompts using GPT-5's understanding

- Structure for maximum cache hits

Batch Processing: The Batch API offers 50% discount for non-urgent processing:

pythonbatch_job = client.batches.create(

input_file_id="file-abc123",

endpoint="/v1/chat/completions",

completion_window="24h"

)

# Process thousands of requests at half price

Smart Caching: Structure applications to maximize the 90% cache discount:

- Group similar requests together

- Reuse system prompts across conversations

- Batch process related documents

- Maintain persistent contexts for repeat users

Enterprise Features

Enterprise deployments require additional capabilities beyond basic API access:

Dedicated Endpoints: Private infrastructure isolated from public traffic:

- Guaranteed capacity without rate limits

- Custom SLAs with 99.99% uptime

- Direct peering with cloud providers

- Compliance with data residency requirements

Security and Compliance:

- SOC 2 Type II certified

- HIPAA compliant for healthcare

- End-to-end encryption with customer-managed keys

- Audit logging with immutable retention

- Private endpoints via AWS PrivateLink or Azure Private Endpoints

Advanced Monitoring:

python# Custom metrics and logging

response = client.chat.completions.create(

model="gpt-5",

messages=messages,

user="user-123", # Track by user

metadata={ # Custom tracking

"department": "engineering",

"project": "assistant-v2",

"environment": "production"

}

)

Usage Controls: Granular control over API usage:

- Per-project spending limits

- User-level rate limiting

- Real-time usage alerts

- Automated throttling at thresholds

ChatGPT Subscription Tiers

For non-API usage, ChatGPT offers tiered subscriptions:

Free Tier: Limited GPT-5 access with restrictions:

- 10 messages per hour

- Standard mode only (no thinking mode)

- No tool usage or file uploads

- Basic features only

Plus ($20/month): Enhanced access for individuals:

- 100 messages per 3 hours

- Access to thinking mode

- File uploads and web browsing

- Priority during high demand

Pro ($200/month): Unlimited GPT-5 for power users:

- Unlimited GPT-5 and GPT-5 Pro access

- Maximum reasoning_effort always available

- Priority processing queue

- Early access to new features

Team/Enterprise: Custom pricing for organizations:

- Centralized billing and administration

- Advanced security controls

- Dedicated support

- Custom training on proprietary data

SDK and Library Support

Official SDKs accelerate development across platforms:

Python SDK:

python# Async support for concurrent requests

import asyncio

from openai import AsyncClient

async def process_multiple(prompts):

client = AsyncClient()

tasks = [

client.chat.completions.create(

model="gpt-5",

messages=[{"role": "user", "content": p}]

) for p in prompts

]

return await asyncio.gather(*tasks)

JavaScript/TypeScript:

typescript// Type-safe integration with full IntelliSense

import { OpenAI } from 'openai';

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

const response = await openai.chat.completions.create({

model: "gpt-5",

messages: messages,

stream: true, // Streaming support

});

for await (const chunk of response) {

process.stdout.write(chunk.choices[0]?.delta?.content || '');

}

Go SDK: Efficient concurrent processing:

goclient := openai.NewClient(apiKey)

response, err := client.CreateChatCompletion(

context.Background(),

openai.ChatCompletionRequest{

Model: "gpt-5",

Messages: messages,

},

)

Community libraries extend official SDKs with framework integrations, middleware components, and testing utilities. LangChain, LlamaIndex, and Semantic Kernel provide high-level abstractions for complex workflows.

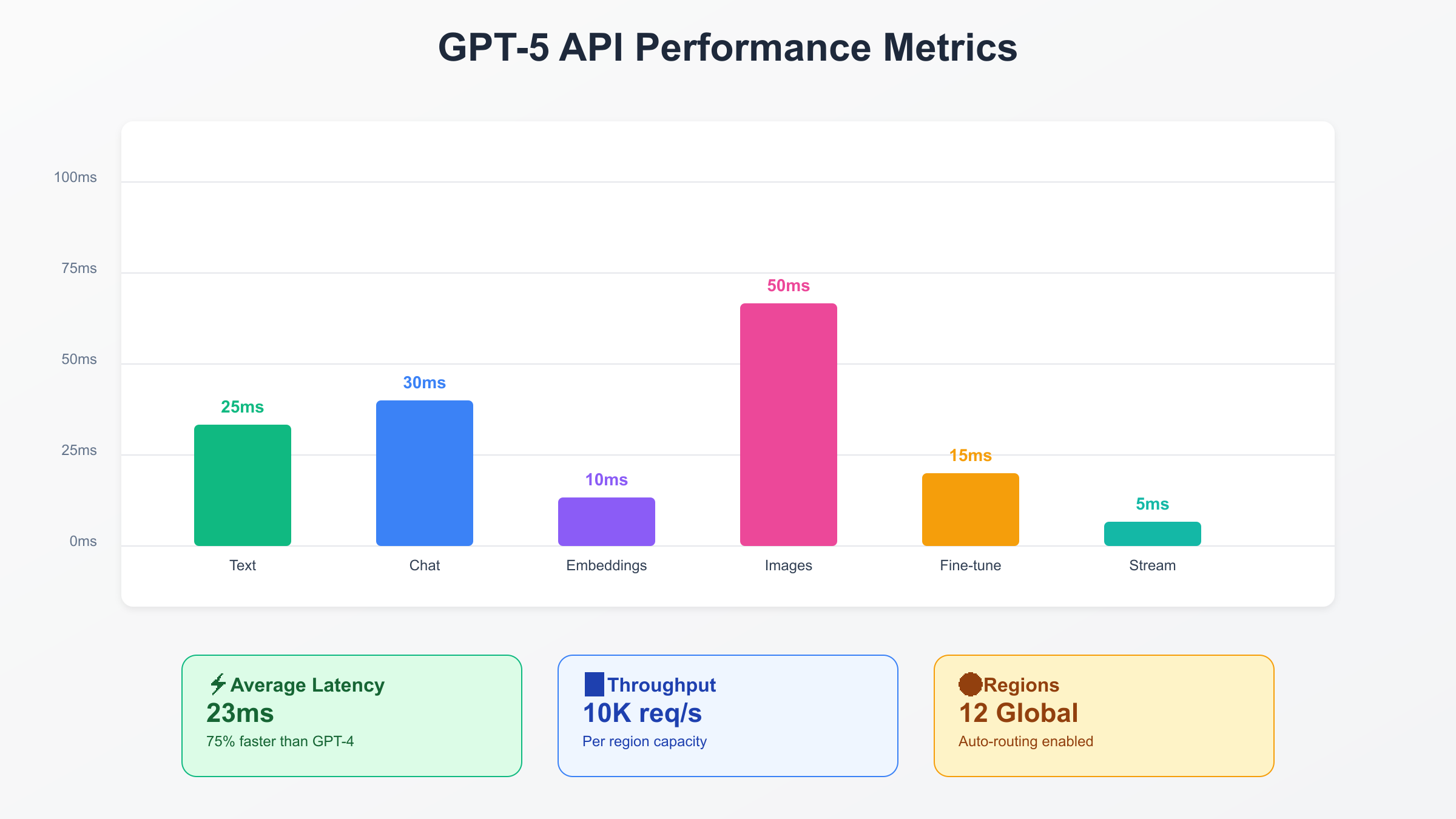

Performance and Reliability

Production metrics demonstrate GPT-5 API's exceptional reliability:

Latency Profiles:

- P50: 450ms (median response time)

- P90: 1,200ms (90% of requests)

- P99: 3,500ms (99% of requests)

- Streaming first token: <100ms

Throughput Capabilities:

- 10,000 requests/second per region

- 100,000 concurrent connections

- Automatic scaling with demand

- No rate limits for enterprise agreements

Reliability Metrics:

- 99.9% uptime SLA (standard)

- 99.99% uptime SLA (enterprise)

- <100ms failover time

- Multi-region redundancy

Global Infrastructure:

- 12 regions worldwide

- Automatic routing to nearest endpoint

- Cross-region replication

- Edge caching for reduced latency

Migration from GPT-4

Migrating from GPT-4 to GPT-5 requires minimal code changes but delivers substantial benefits:

Compatibility Mode: GPT-5 supports GPT-4 parameters for seamless migration:

python# Existing GPT-4 code works unchanged

response = client.chat.completions.create(

model="gpt-5", # Simply change model name

messages=messages,

temperature=0.7,

max_tokens=1000

)

Performance Improvements:

- 40% faster response times

- 30-40% cost reduction

- 50% fewer errors

- 2x context capacity

Migration Strategy:

- Test GPT-5 with subset of traffic

- Compare quality metrics

- Gradually increase GPT-5 percentage

- Monitor cost and performance

- Complete migration when stable

Breaking Changes: Minimal but important:

- Token limits increased (plan for larger responses)

- Reasoning tokens count toward output limit

- Some GPT-4 workarounds no longer needed

- Tool calling syntax slightly different

Advanced Use Cases

GPT-5's API enables applications impossible with previous models:

Real-Time Analysis: Financial trading systems use streaming API for market analysis:

pythonasync for chunk in stream:

signal = extract_trading_signal(chunk)

if signal.confidence > 0.8:

execute_trade(signal)

Document Intelligence: Legal firms process entire contracts in single API calls:

- 272K tokens accommodate 100+ page documents

- Parallel tool calling extracts multiple data points

- 90% cache discount for similar documents

- Batch processing for large volumes

Conversational AI: Customer service achieves human parity:

- Persistent memory maintains context

- Multimodal inputs handle screenshots

- Tool calling integrates with CRM systems

- Streaming provides natural interactions

Code Generation: Development platforms leverage full codebase context:

- Entire repositories in single context

- Real-time code reviews

- Automated refactoring

- Test generation at scale

Monitoring and Observability

Effective monitoring ensures optimal API utilization:

Key Metrics to Track:

python# Custom monitoring wrapper

class MonitoredClient:

def create_completion(self, **kwargs):

start_time = time.time()

response = self.client.create(**kwargs)

metrics.record({

'latency': time.time() - start_time,

'tokens_used': response.usage.total_tokens,

'cost': calculate_cost(response.usage),

'cache_hit': response.usage.cached_tokens > 0,

'model': kwargs['model'],

'reasoning_effort': kwargs.get('reasoning_effort', 'medium')

})

return response

Alerting Thresholds:

- Error rate > 1%

- P99 latency > 5 seconds

- Cost spike > 20% hourly

- Cache hit rate < 30%

Cost Attribution: Track spending by project, user, and feature:

sqlSELECT

project,

SUM(input_tokens * 0.00000125 + output_tokens * 0.00001) as cost,

AVG(cache_hit_rate) as cache_rate

FROM api_usage

GROUP BY project

ORDER BY cost DESC;

Security Best Practices

Secure API integration protects sensitive data and prevents abuse:

Key Management:

python# Never hardcode keys

api_key = os.environ.get('OPENAI_API_KEY')

# Rotate keys regularly

# Use separate keys for development/production

# Monitor key usage for anomalies

Request Validation:

pythondef validate_request(prompt):

# Check prompt length

if len(prompt) > MAX_PROMPT_LENGTH:

raise ValueError("Prompt too long")

# Scan for sensitive data

if contains_pii(prompt):

sanitize_pii(prompt)

# Rate limit by user

if exceeds_rate_limit(user_id):

raise RateLimitError()

return True

Response Filtering: Ensure appropriate content:

pythondef filter_response(response):

# Remove potential PII

# Check content policy compliance

# Validate format expectations

# Log for audit purposes

return filtered_response

Future Roadmap

OpenAI's announced enhancements promise continued innovation:

Upcoming Features:

- 2x context window (544K tokens)

- Native vector database integration

- Custom model fine-tuning UI

- GraphQL API endpoint

- WebSocket support for persistent connections

Pricing Evolution: Expected 20% price reduction by Q4 2025 as efficiency improves

Performance Improvements:

- 50% latency reduction planned

- 10x throughput increase per region

- 99.99% uptime for all tiers

Conclusion

GPT-5's API represents a paradigm shift in AI accessibility and economics. The aggressive $1.25/$10 pricing, combined with 90% cache discounts and superior performance, makes GPT-5 economically viable for applications previously impossible with AI. The 272K context window, parallel tool calling, and built-in capabilities eliminate complexity while enabling new use cases.

Success with GPT-5's API requires strategic thinking about model selection, prompt optimization, and caching strategies. The 30-40% cost savings versus GPT-4 are achievable but require intentional architecture. Enterprise features provide the security, compliance, and reliability necessary for production deployments.

For organizations evaluating GPT-5, the economics are compelling: lower costs, better performance, simpler integration. The comprehensive SDK support, detailed documentation, and vibrant community ensure smooth adoption. Whether building simple chatbots or complex AI systems, GPT-5's API provides the foundation for next-generation applications.

The future belongs to organizations that effectively leverage AI. GPT-5's API makes that future accessible today.