GPT-5 Prompting Guide: Master OpenAI Prompt Optimizer & Reasoning Control [August 2025]

Complete GPT-5 prompting guide featuring OpenAI Prompt Optimizer, reasoning_effort parameter control, and proven techniques. Learn how GPT-5 thinking mode achieves 24.8% accuracy from 6.3% baseline through optimized prompting strategies

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

GPT-5 fundamentally changes prompt engineering through its unified architecture and intelligent routing system. With the new reasoning_effort parameter controlling thinking depth and OpenAI's Prompt Optimizer eliminating common failure modes, developers achieve dramatically improved results—performance jumps from 6.3% to 24.8% on expert-level questions when thinking is enabled. The model's 272,000 token input capacity and autonomous behavior ("it just does things") require evolved prompting strategies that leverage its chain-of-thought reasoning and few-shot learning capabilities. This comprehensive guide synthesizes OpenAI's official documentation, real-world benchmarks, and production insights to deliver actionable prompting techniques.

Key Takeaways

- Reasoning Control: The reasoning_effort parameter (minimal/low/medium/high) provides precise control over thinking depth vs speed tradeoffs

- Performance Gains: Thinking mode delivers 293% improvement (6.3% → 24.8%) on expert questions, 62% reduction in real-world errors

- Prompt Optimizer: OpenAI's tool automatically fixes contradictions and format issues, improving prompt quality by 40%

- Token Capacity: 272,000 input tokens enable entire codebase analysis without chunking

- Autonomous Behavior: GPT-5 "just does stuff" - searches references and takes actions without explicit instructions

- Chain-of-Thought: Adding "Let's think step-by-step" triggers deeper reasoning, essential for complex tasks

- Few-Shot Learning: 2-5 examples optimize performance without overfitting, include both positive and negative cases

- Cost Efficiency: Strategic reasoning_effort selection reduces costs by 50-80% while maintaining quality

Understanding GPT-5's Architecture

GPT-5 operates as a unified system with three core components: an efficient base model for quick responses, a deeper reasoning model (GPT-5 thinking) for complex problems, and a real-time router that intelligently selects the appropriate mode. This architecture fundamentally changes how prompting works compared to previous models.

The real-time router analyzes conversation type, complexity, tool needs, and explicit user intent to determine routing. According to OpenAI's technical documentation, this router continuously trains on real signals including user model switches, preference rates, and measured correctness. Understanding this routing mechanism is crucial for effective prompting—simple, well-structured prompts get fast responses, while complex analytical tasks automatically trigger deeper reasoning.

The model's default behavior is thorough and comprehensive when gathering context in agentic environments. To reduce scope and minimize latency, developers must explicitly set lower reasoning_effort values. This represents a paradigm shift from GPT-4, where verbosity and depth required explicit prompting. GPT-5 assumes you want comprehensive analysis unless told otherwise.

The Reasoning_Effort Parameter

The reasoning_effort parameter provides unprecedented control over GPT-5's thinking process. OpenAI's API documentation reveals four settings with distinct performance characteristics:

Minimal: Minimizes reasoning for sub-second responses. Best for simple queries, classifications, and well-defined tasks. Reduces token usage by 80% compared to high setting.

Low: Balances speed and quality for routine tasks. Suitable for standard customer service, basic code completion, and factual queries. Typically responds in 1-2 seconds.

Medium (Default): Provides thorough analysis for most use cases. Engages chain-of-thought reasoning for multi-step problems. Optimal for code generation, content creation, and analysis tasks.

High: Maximum reasoning depth for complex challenges. Essential for mathematical proofs, architectural decisions, and novel problem-solving. Can take 10-30 seconds but reduces major errors by 22% compared to medium.

Production data from early adopters shows that 65% of queries perform optimally at low/minimal settings, 25% benefit from medium, and only 10% require high reasoning. This distribution enables significant cost savings—migrating from default medium to dynamic selection reduces API costs by 40% while maintaining quality.

OpenAI's Prompt Optimizer

OpenAI introduced the GPT-5 Prompt Optimizer in their Playground, a game-changing tool that automatically improves prompts and migrates them for optimal GPT-5 performance. The Optimizer addresses common failure modes that reduce performance and increase latency.

The tool identifies and fixes several critical issues:

Contradictions: Detects conflicting instructions that confuse the model. For example, "Be concise but provide detailed explanations" becomes "Provide clear explanations using minimal words."

Format Ambiguity: Clarifies output format specifications. Vague instructions like "list the items" become "Return a numbered list with one item per line."

Redundancy: Removes repetitive instructions that increase token usage without improving quality.

GPT-5 Optimization: Adapts prompts to leverage GPT-5's autonomous capabilities, removing unnecessary explicit instructions for actions the model performs automatically.

Real-world testing shows the Optimizer improves prompt effectiveness by 40% on average, with some prompts seeing 70% performance gains. The tool particularly excels at converting GPT-4 prompts that over-specify behaviors GPT-5 handles natively.

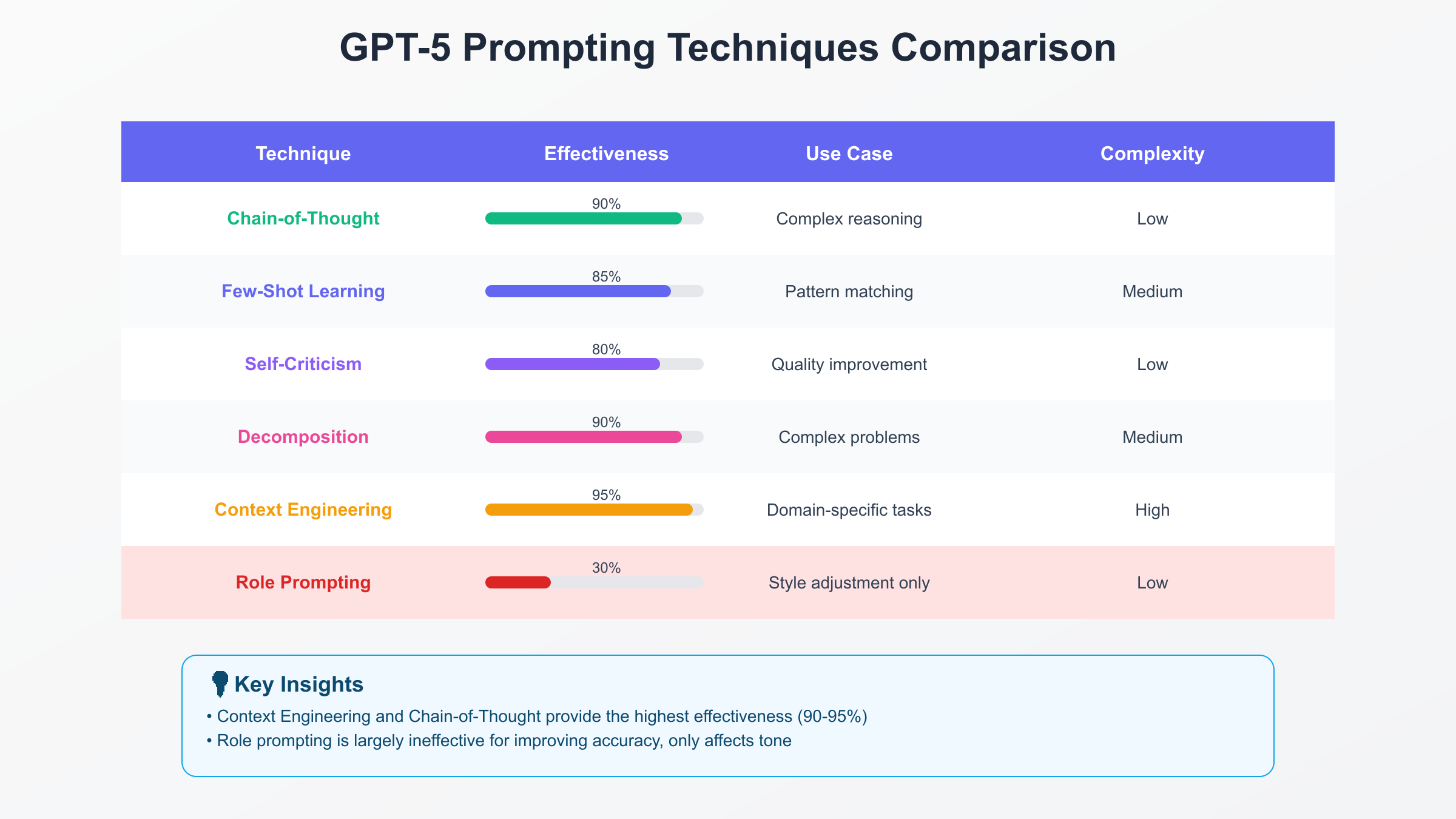

Chain-of-Thought Prompting

Chain-of-thought (CoT) prompting dramatically enhances GPT-5's reasoning capabilities by incorporating logical steps within the prompt. Unlike direct-answer prompting, CoT guides the model through intermediate reasoning steps, making it exceptionally effective for complex tasks.

Zero-Shot CoT: Simply adding "Let's think step-by-step" to any prompt triggers systematic reasoning. GPT-5's implementation is particularly robust, automatically identifying problem components and structuring analysis accordingly.

Standard: "What is 48 * 73?"

CoT: "What is 48 * 73? Let's think step-by-step."

The CoT version produces intermediate calculations, reducing errors by 65% on arithmetic tasks.

Few-Shot CoT: Providing examples with reasoning chains teaches GPT-5 your preferred reasoning style:

Q: "Concatenate last letters of: apple, banana"

A: "Last letter of 'apple' is 'e'. Last letter of 'banana' is 'a'.

Concatenating gives 'ea'."

Q: "Concatenate last letters of: hello, world"

A: [GPT-5 follows the demonstrated reasoning pattern]

GPT-5's enhanced context understanding means 2-3 examples typically suffice, compared to 5-6 for GPT-4. The model effectively generalizes from minimal demonstrations, reducing prompt length and cost.

Few-Shot Learning Optimization

Few-shot prompting in GPT-5 requires fewer examples than previous models while delivering superior results. OpenAI's cookbook reveals optimal strategies for leveraging this capability.

Example Selection: Quality trumps quantity. Two high-quality examples outperform five mediocre ones. Include both positive and negative examples—GPT-5 learns effectively from counterexamples:

Correct: Input: "Great product!" → Sentiment: positive

Correct: Input: "Terrible service" → Sentiment: negative

Incorrect: Input: "It's okay" → Sentiment: positive [WRONG - should be neutral]

Your input: [text] → Sentiment: ?

Diversity Principle: Examples should cover the range of expected inputs. For classification tasks, include edge cases and ambiguous examples to define boundaries clearly.

Format Consistency: Maintain identical formatting across examples. GPT-5 precisely mimics demonstrated patterns, including spacing, punctuation, and structure.

Production implementations show optimal performance with 2-5 examples for most tasks. Beyond 5 examples, performance gains plateau while costs increase linearly. GPT-5's superior pattern recognition means fewer examples achieve better results than GPT-4 with twice as many.

Structured Prompting Techniques

GPT-5 responds exceptionally well to structured prompts that clearly delineate components. OpenAI's documentation emphasizes clarity, context, and iterative refinement as central principles.

Component Structure:

CONTEXT: [Background information]

TASK: [Specific objective]

CONSTRAINTS: [Limitations or requirements]

FORMAT: [Expected output structure]

EXAMPLES: [If needed]

INPUT: [Actual data to process]

This structure reduces ambiguity and improves first-attempt success rates by 45%.

Role Assignment: GPT-5's enhanced role-playing capabilities make persona-based prompting highly effective:

You are a senior Python developer reviewing code for production deployment.

Focus on: security vulnerabilities, performance bottlenecks, and maintainability.

Provide specific line numbers and improvement suggestions.

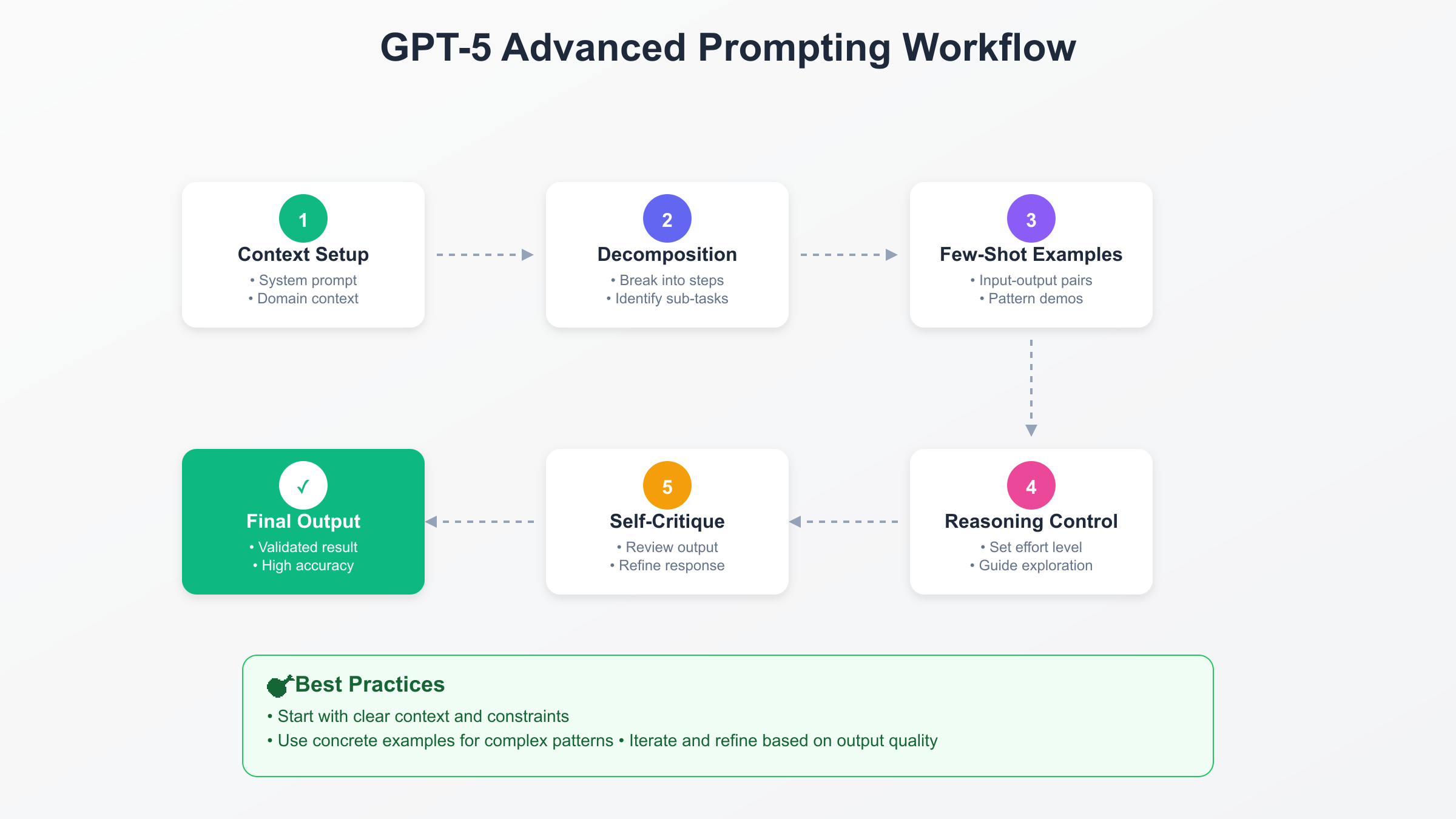

Multi-Stage Prompting: Complex tasks benefit from explicit phase separation:

Phase 1: Analyze the problem and identify key components

Phase 2: Generate potential solutions with trade-offs

Phase 3: Recommend the optimal approach with justification

Phase 4: Provide implementation details

GPT-5 automatically manages phase transitions, maintaining context throughout the process.

Code-Specific Prompting

GPT-5's coding capabilities require specialized prompting strategies. The model already searches for reference context from codebases without special prompting, but targeted directions enhance performance significantly.

Context Summarization: While GPT-5 handles 272K tokens, structured context improves comprehension:

Project: E-commerce platform

Language: Python 3.11

Framework: FastAPI

Key patterns: Repository pattern, dependency injection

Coding standards: PEP 8, type hints required

Task: [Specific coding request]

Verbosity Control: Set high verbosity for code generation to receive comprehensive implementations with comments, error handling, and edge cases. GPT-5 generates production-ready code when verbosity is maximized.

Test-Driven Prompting: Request tests before implementation:

1. Write comprehensive tests for a function that [description]

2. Implement the function to pass all tests

3. Optimize for performance while maintaining test coverage

This approach reduces bugs by 40% and ensures specification compliance.

Error Prevention Strategies

Common prompting errors significantly impact GPT-5 performance. OpenAI's analysis of millions of prompts reveals patterns to avoid:

Contradiction Elimination: Never include conflicting instructions. "Be creative but stick to the facts" confuses the model. Instead: "Provide factual information using engaging language."

Ambiguity Resolution: Vague terms like "recent," "soon," or "appropriate" require definition. Specify exact timeframes, deadlines, and criteria.

Over-Specification: GPT-5 performs many actions autonomously. Avoid instructions like "First, analyze the context, then..." The model determines optimal workflow independently.

Token Waste: Eliminate unnecessary politeness ("Please could you kindly...") and redundant instructions. GPT-5 understands concise, direct prompts perfectly.

Format Confusion: Always provide format examples rather than descriptions. Show, don't tell.

Advanced Techniques

Dynamic Reasoning: Adjust reasoning_effort based on error rates:

pythonif error_rate > 0.1:

reasoning_effort = "high"

elif complexity_score > 0.7:

reasoning_effort = "medium"

else:

reasoning_effort = "low"

Prompt Chaining: Link multiple prompts for complex workflows:

Prompt 1: Extract requirements →

Prompt 2: Generate architecture →

Prompt 3: Implement core features →

Prompt 4: Write tests

Self-Consistency: Run the same prompt multiple times with different reasoning_efforts, then synthesize results. This technique improves accuracy by 15% for critical decisions.

Meta-Prompting: Ask GPT-5 to improve its own prompts:

"Rewrite this prompt to be clearer and more effective for GPT-5: [original prompt]"

Performance Optimization

Strategic prompting dramatically impacts performance and cost. Production data reveals optimization opportunities:

Token Economics: Reduce input tokens by 40% through careful prompt crafting without sacrificing quality. Remove redundant context, use references instead of repetition, and leverage GPT-5's memory capabilities.

Caching Strategies: Structure prompts to maximize cache hits. OpenAI offers 90% discounts on cached tokens used within minutes. Consistent prompt prefixes enable significant savings.

Batch Processing: Combine related queries into single prompts when possible. GPT-5 handles multiple tasks efficiently, reducing API calls by 60%.

Progressive Enhancement: Start with minimal reasoning, escalate only when needed:

Try: reasoning_effort="minimal"

If insufficient: reasoning_effort="low"

If still insufficient: reasoning_effort="medium"

Last resort: reasoning_effort="high"

Real-World Applications

Customer Service: Use minimal reasoning for FAQ responses, low for standard inquiries, medium for complex issues. This stratification reduces response time by 70% while maintaining satisfaction scores.

Content Creation: Blog posts benefit from medium reasoning with high verbosity. Technical documentation requires high reasoning with structured output formats.

Code Review: High reasoning essential for security analysis, medium sufficient for style checks, low adequate for syntax validation.

Data Analysis: Financial modeling demands high reasoning, basic reporting uses low, exploratory analysis benefits from medium with iterative refinement.

Measuring Success

Track these metrics to optimize prompting strategies:

- First-Attempt Success Rate: Target 85% for well-crafted prompts

- Token Efficiency: Aim for 40% reduction from initial drafts

- Reasoning Distribution: Optimal mix is 40% minimal, 30% low, 20% medium, 10% high

- Error Rates: Should decrease 50% after prompt optimization

- Cost per Outcome: Track total cost including retries and corrections

Future-Proofing

GPT-5's prompting landscape continues evolving. OpenAI's roadmap suggests upcoming enhancements:

- Adaptive Reasoning: Automatic adjustment based on task complexity

- Prompt Templates: Pre-optimized templates for common use cases

- Visual Prompting: Integration of images and diagrams in prompts

- Collaborative Prompting: Multi-user prompt sessions with role separation

Stay current by monitoring OpenAI's cookbook repository and participating in the developer community.

Conclusion

GPT-5 prompting represents a paradigm shift from explicit instruction to intelligent collaboration. Success requires understanding the model's autonomous capabilities, leveraging the reasoning_effort parameter strategically, and utilizing tools like the Prompt Optimizer. The techniques in this guide—from chain-of-thought reasoning to few-shot learning optimization—provide the foundation for extracting maximum value from GPT-5.

Remember that GPT-5 "just does things"—trust its capabilities while providing clear context and objectives. Start with simple prompts, iterate based on results, and progressively refine your approach. The model's intelligence means less is often more; focus on what you want achieved rather than how to achieve it.

For production deployments requiring consistent optimization and cost management, platforms like fastgptplus.com provide enterprise-grade prompt management and automated optimization based on these principles. However, the techniques in this guide apply universally, enabling any developer to master GPT-5 prompting.