Sora 2: How to Use Real People in AI-Generated Videos (Complete Guide 2025)

Master Sora 2 Cameos feature to integrate real people into AI videos. Covers consent verification, international access, quality comparison, and step-by-step setup guide.

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

OpenAI's Sora 2 launched on September 30, 2025, introducing a revolutionary capability that distinguishes it from competitors: the Cameos feature. This breakthrough allows users to integrate real people into AI-generated videos by uploading a brief verification recording. Instead of relying solely on synthetic characters, creators can now place themselves, colleagues, or consenting participants into any AI-generated environment with unprecedented realism.

The Cameos feature addresses a critical limitation in AI video generation—the uncanny valley effect and lack of personal authenticity. When OpenAI CEO Sam Altman demonstrated Sora 2 by generating a video of himself climbing El Capitan, the result showcased both the technical capability and the ethical framework built into the system. Unlike traditional deepfake tools, Sora 2 requires explicit consent verification, liveness checks, and provides granular permission controls.

However, accessing this feature isn't straightforward for everyone. The iOS app launched exclusively in the United States and Canada, creating access barriers for international users. The consent verification process, while essential for ethical AI use, introduces complexity that many users struggle to navigate. Quality considerations—when real people enhance realism versus when AI-generated characters suffice—remain unclear to most creators.

This comprehensive guide examines how to use real people in Sora 2, addressing technical setup, consent requirements, international access strategies, and quality optimization. We'll explore the complete workflow from verification to video generation, compare real people integration against pure AI generation, and provide troubleshooting solutions for common errors. For professionals concerned about legal liability, we'll detail the ethical framework and jurisdictional considerations essential for compliant use.

What is Sora 2's Cameos Feature?

The Cameos feature represents OpenAI's solution to integrating real human subjects into AI-generated video content while maintaining ethical guardrails. At its core, Cameos allows the model to observe a brief video recording of a person—capturing appearance, voice, movement patterns, and distinctive characteristics—then recreate that individual within any AI-generated environment specified through text prompts.

This capability extends beyond simple face-swapping or deepfake technology. The system captures three-dimensional understanding of facial structure, body language nuances, voice tonality, and natural movement patterns. When you generate a video featuring your Cameo, Sora 2 doesn't merely paste your face onto a generic body; it reconstructs your authentic presence within the generated scene, maintaining consistency in lighting, perspective, physics, and environmental interaction.

The feature launched as part of Sora 2's broader advancement in text-to-video AI generation, which OpenAI positioned as a "GPT-3.5 moment for video"—signaling a fundamental leap in capability and accessibility. According to the official announcement, Cameos work for any human, animal, or object, though the primary use case focuses on human subjects given the consent and verification requirements.

Key technical capabilities include synchronized audio generation (your Cameo speaks with your actual voice characteristics), physics-accurate interaction with generated environments (realistic shadow casting, proper occlusion), and temporal consistency (your Cameo maintains appearance coherence across multi-second clips). The system handles complex scenarios like multiple camera angles, different lighting conditions, and varied action sequences while preserving the recognizable identity of the Cameo subject.

However, Cameos introduce specific constraints compared to pure AI-generated characters. The initial verification recording must meet technical quality standards regarding resolution, lighting, and audio clarity. The subject must perform specific actions during verification to enable liveness detection, preventing unauthorized use of static photos or pre-recorded footage. Processing time increases when incorporating Cameos versus generating videos with purely synthetic characters, as the system performs additional identity verification and consistency checks.

The broader AI video generation landscape includes multiple approaches to incorporating human subjects, ranging from full synthesis (no real people) to template-based animation (static image animated) to Cameos-style integration (full motion and voice capture). Sora 2's approach prioritizes authenticity and consent over convenience, differentiating it from competitors that allow image uploads without verification.

Understanding what Cameos can and cannot do shapes realistic expectations for the feature. It excels at placing recognizable individuals into scenarios impossible or impractical to film—historical settings, fantastical environments, dangerous situations, or simply locations unavailable to the subject. It struggles with highly specific facial expressions not captured in the verification recording, extreme close-ups that exceed the resolution of the source material, and scenarios requiring precise lip-sync to pre-written dialogue (the system generates both visuals and synchronized audio, rather than animating to provided audio).

How Cameos Works: Technical Overview

The Cameos workflow operates through a three-stage process: verification recording, identity encoding, and generation-time integration. Each stage employs distinct technical mechanisms to balance realism, consent enforcement, and creative flexibility.

During the verification stage, users record a 5-15 second clip through the Sora iOS app performing specific prompted actions. The app instructs users to speak a brief phrase, turn their head to show different angles, and display natural movement. This multi-modal capture allows the system to build a comprehensive identity representation including facial geometry from multiple perspectives, voice characteristics across different phonemes, natural movement patterns, and distinguishing features like hairstyle, body proportions, and typical expressions.

The app performs real-time liveness detection during recording, verifying that the subject is physically present rather than presenting a photo, pre-recorded video, or manipulated footage. Liveness checks analyze micro-movements in facial features, detect depth through parallax as the subject moves, verify that audio synchronizes naturally with lip movements, and confirm that lighting and shadow behavior matches a three-dimensional subject. These checks prevent unauthorized creation of Cameos from stolen images or videos.

Once verification succeeds, OpenAI's systems encode the identity into a compact representation compatible with Sora 2's video generation model. This encoding process extracts invariant features—characteristics that remain consistent across different contexts—while allowing variation in controllable aspects like pose, expression, action, and environmental interaction. The technical implementation resembles how face recognition systems create embeddings, but extended to capture full-body appearance, voice, and motion dynamics.

During video generation, users write text prompts specifying the desired scene, and the system integrates the Cameo identity into the generated output. The model receives both the text prompt (describing environment, action, style) and the identity encoding (representing the specific person). It then generates video frames where the person appears naturally integrated into the scene, maintaining their recognizable appearance while adapting pose, expression, and interaction to match the prompt.

| Aspect | Cameos (Real People) | Pure AI Generation |

|---|---|---|

| Realism | Photorealistic identity preservation | Synthetic characters, variable realism |

| Setup Required | 5-15 second verification recording | None |

| Consent Needed | Mandatory verification with liveness check | Not applicable |

| Generation Speed | Slower (additional identity processing) | Faster (no verification overhead) |

| Quality Control | Depends on source video quality | Consistent but may lack authenticity |

| Flexibility | Limited to verified individuals | Unlimited character variations |

| Best Use Cases | Personal content, testimonials, education, scenarios requiring specific people | Entertainment, abstract concepts, fictional characters, bulk generation |

The table illustrates fundamental trade-offs between approaches. Cameos require upfront effort and introduce processing overhead, but deliver authentic representation of specific individuals—critical for personal content, professional testimonials, educational materials featuring instructors, and any scenario where audience recognition of the subject matters. Pure AI generation offers unlimited creative freedom and faster iteration but lacks the authenticity and personal connection that real people provide.

For creators deciding between approaches, consider whether audience recognition of the subject adds value. If viewers need to recognize you as the instructor, team member, or spokesperson, Cameos justify the additional setup. If the character serves a purely functional or artistic role where any appearance suffices, pure AI generation streamlines the workflow. The complete Sora 2 guide covers pure AI generation workflows for scenarios where Cameos aren't necessary.

Understanding the technical mechanism also clarifies limitations. Because the system learns identity from a brief verification clip, it performs best when generating scenarios that don't require highly specific expressions or movements not represented in the source recording. Extreme emotional expressions, precise choreography, or highly technical movements may appear less natural than general scenarios like walking, talking, gesturing, or interacting with objects.

The consent architecture built into this technical workflow distinguishes Sora 2 from unregulated deepfake tools. Every Cameo creation requires the explicit, verified consent of the subject through liveness-checked recording. This approach prevents unauthorized use while enabling legitimate creative and professional applications.

Platform Availability and Access

Sora 2 launched with geographic and platform restrictions that significantly impact who can access the Cameos feature. Understanding these limitations and available workarounds determines whether you can immediately begin creating content or need alternative strategies.

The iOS app became available exclusively in the United States and Canada starting September 30, 2025. This restriction applies at the App Store level—users must have an Apple ID registered to the US or Canadian App Store to download the application. Android users, regardless of location, cannot access Sora 2 at launch, as OpenAI prioritized iOS for the initial release. Web-based access exists through the OpenAI website for general Sora 2 features, but Cameos functionality requires the mobile app for verification recording due to liveness check requirements.

Access operates through an invitation system during the rollout phase. Each user who gains access receives four invitation codes to share with others. This graduated approach allows OpenAI to manage server load and gather feedback before full public release. Users without invitations join a waitlist, with access granted in rolling waves based on demand and system capacity.

Three pricing tiers determine feature availability and usage limits:

| Tier | Monthly Cost | Cameos Access | Video Generation Credits | Max Video Length | Max Resolution | Priority Queue | Best For |

|---|---|---|---|---|---|---|---|

| Free | $0 | Limited (watermarked) | 50 credits/month | 5 seconds | 720p | Standard | Testing, personal experimentation |

| Plus | $20 | Full access | 500 credits/month | 20 seconds | 1080p | Priority | Content creators, small businesses |

| Pro | $200 | Full access | 5,000 credits/month | 60 seconds | 4K | Highest priority | Professional studios, enterprises |

The free tier allows Cameos creation but applies visible watermarks to generated content, limiting professional use. Credit consumption varies based on video length and resolution—a typical 10-second 1080p video with Cameos consumes approximately 20-30 credits due to the additional processing required for identity integration. Plus tier users can generate roughly 15-20 Cameo-enhanced videos monthly, while Pro tier supports sustained professional production at 150+ videos per month.

Platform restrictions create accessibility challenges for users outside North America or without iOS devices. International users face three primary barriers: geographic App Store restrictions, potential network latency or connectivity issues when accessing OpenAI's servers, and absence of localized payment methods in many regions. The invitation system adds a fourth barrier—requiring an existing user connection to bypass the waitlist.

For creators evaluating whether Sora 2 fits their workflow, consider total cost of ownership beyond subscription fees. Professional use at Plus tier ($20/month) supports moderate content creation but may require upgrading to Pro tier for sustained production. Credit-based consumption means variable costs depending on output quality and volume—higher resolution and longer videos consume credits faster. Users who frequently regenerate content to achieve desired results will deplete credits more rapidly than those satisfied with first-generation outputs.

The geographic restriction particularly impacts international markets where AI video demand is high. Chinese creators, European studios, and Latin American content producers all face access barriers despite strong use cases for the technology. OpenAI has not announced a specific timeline for global expansion, though historical patterns with ChatGPT and DALL-E suggest gradual international rollout over 6-12 months following initial launch.

Understanding these access parameters shapes realistic adoption timelines. US and Canadian iOS users can begin immediately upon receiving an invitation or waitlist approval. International users need workaround strategies, which we'll detail in Chapter 9, or must wait for geographic expansion. Budget-conscious creators should calculate credit consumption based on anticipated production volume to determine whether Plus or Pro tier makes economic sense for their use case.

Step-by-Step: Setting Up Your Cameo

Creating your first Cameo requires completing a one-time verification process through the Sora iOS app. This workflow balances security requirements with user convenience, typically requiring 5-10 minutes from start to completion.

Prerequisites and Preparation

Before beginning, ensure you meet technical and environmental requirements. You'll need an iOS device (iPhone or iPad) running iOS 15 or later with the Sora app installed from the US or Canadian App Store. Verify adequate storage space—the app requires approximately 500MB, and verification videos consume an additional 50-100MB during the recording process before upload.

Choose a recording environment with consistent, even lighting. Avoid backlighting (windows behind you), harsh shadows, or dim conditions. The verification process analyzes facial features for liveness detection, which performs poorly in suboptimal lighting. Natural daylight or soft artificial lighting works best. Ensure a quiet environment with minimal background noise, as the system captures voice characteristics that require clear audio quality.

Position yourself at arm's length from the camera with your full head and shoulders visible in the frame. The app will guide you to turn your head side-to-side during recording, so ensure sufficient space around your face within the frame. Remove accessories that obscure facial features—hats, sunglasses, or masks will cause verification failure as the system cannot adequately capture your appearance.

Verification Recording Process

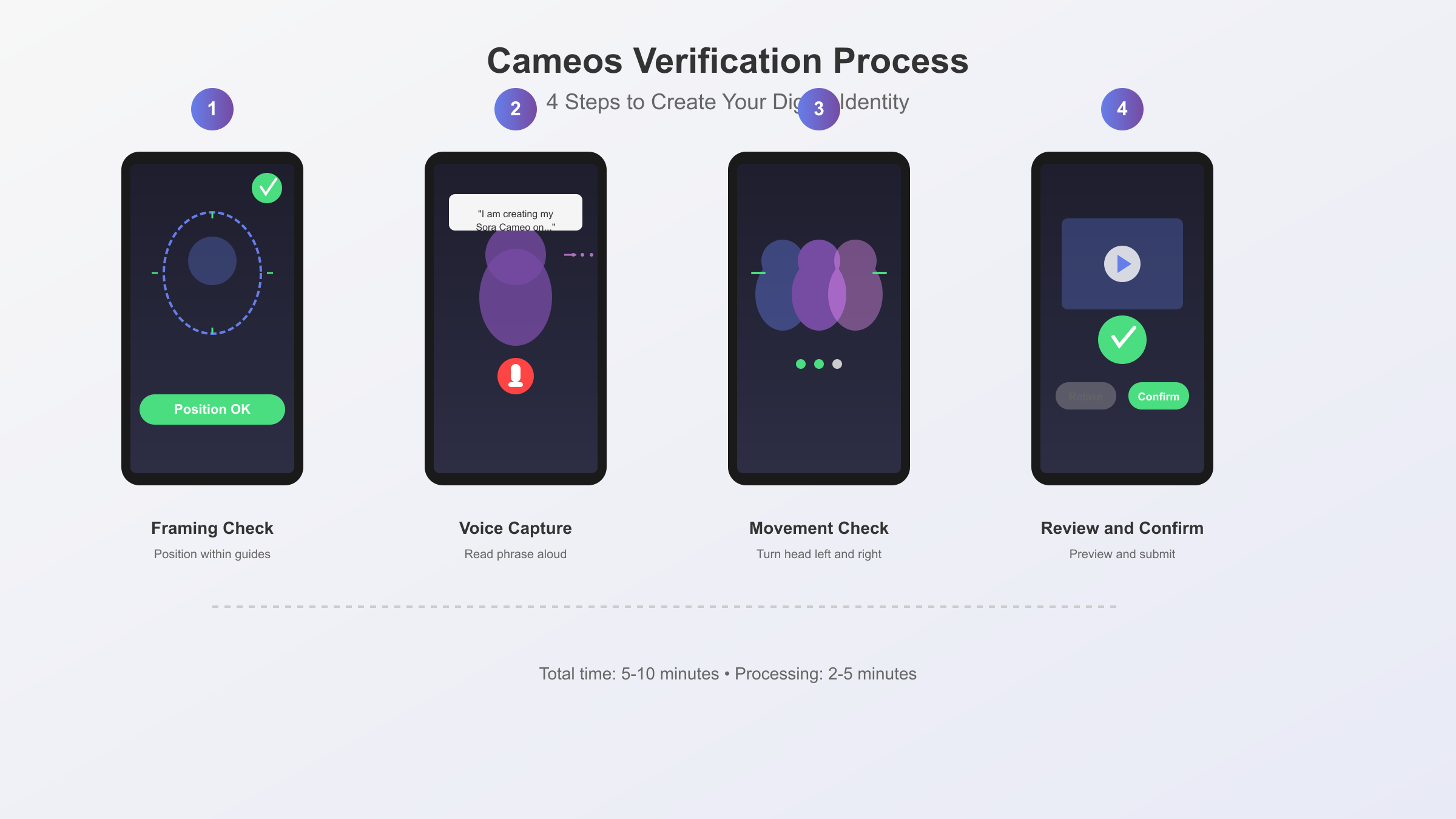

Launch the Sora app and navigate to Settings > Cameos > Create New Cameo. The app presents an overview explaining that you'll record a brief video for identity verification, highlighting consent and privacy controls. Review the consent agreement, which specifies that your Cameo can only be used by people you explicitly grant access to, and that you can revoke permissions or delete your Cameo at any time.

Tap "Begin Verification" to start the recording interface. The app displays real-time guidance:

-

Framing Check: Position yourself within the indicated frame boundaries. The app verifies adequate lighting and background contrast before allowing you to proceed.

-

Voice Capture: The app displays a phrase to read aloud—typically a sentence like "I am creating my Sora Cameo on [current date]." Speak naturally at normal volume. This captures voice characteristics and verifies audio-visual synchronization.

-

Movement Verification: Follow on-screen prompts to slowly turn your head left, then right, then back to center. This multi-angle capture enables the system to build a three-dimensional representation of your facial structure. The app provides real-time feedback if you move too quickly or if features become obscured.

-

Completion Review: After recording, the app displays a preview. Review the footage to ensure clear visibility and audio quality. You can retake the verification if unsatisfied with quality.

The entire recording process takes 8-15 seconds of actual footage. The app then uploads this verification video to OpenAI's servers for processing, which typically completes within 2-5 minutes. You'll receive a notification when your Cameo is ready for use.

Identity Verification and Approval

After upload, OpenAI's systems perform automated analysis to verify the recording meets technical standards and passes liveness checks. The system examines whether facial features display micro-movements consistent with a live person, whether depth cues indicate three-dimensional presence, whether audio-visual synchronization matches natural speech patterns, and whether lighting and shadow behavior appears physically plausible.

If verification succeeds, your Cameo becomes available immediately for video generation. If verification fails, the app provides specific feedback about the issue—common failure reasons include insufficient lighting (too dark or harsh shadows), obscured features (face not fully visible, accessories blocking view), poor audio quality (background noise, low volume), or movement issues (turned too quickly, moved out of frame). You can immediately retry verification after addressing the flagged issue.

Permission Management

Once verified, your Cameo exists in your account with default privacy settings—only you can initially use it in generated videos. To allow others to feature you in their Sora creations, navigate to Settings > Cameos > [Your Cameo] > Share Access. The app provides three permission models:

- Private: Only you can use your Cameo (default setting)

- Selective: Specific people you invite can use your Cameo

- Revocable Invitation: Generate a one-time invitation code for someone to request access, which you can approve or deny

For each person granted access, you can set granular controls including whether they can use your Cameo in public or private videos, whether they can share generated videos featuring you externally, and time-limited access that automatically expires after a specified period. These controls address professional scenarios where temporary access makes sense—a client project with a defined timeline, for example.

The app's "Videos Featuring Me" section displays all content created using your Cameo, regardless of who generated it. This transparency allows you to monitor usage and identify any unauthorized or concerning content. You can request removal of specific videos, revoke a user's access immediately, or delete your Cameo entirely (which invalidates all existing videos featuring it).

This verification and permission system creates friction by design—preventing unauthorized use requires making legitimate use slightly more complex. For users concerned primarily with generating personal content, the process is straightforward. For teams collaborating on professional content, plan ahead to complete verification and permission-sharing before production timelines begin.

Consent and Privacy: Deep-Dive

The consent architecture in Sora 2 represents OpenAI's attempt to balance creative capability with ethical safeguards. Understanding how consent verification works, what data OpenAI collects, and your rights regarding that data proves essential for legal compliance and informed decision-making.

Consent Verification Mechanism

Sora 2 implements a three-layer consent model that operates continuously rather than as a one-time check. The first layer occurs during Cameo creation through the liveness-checked verification recording. By recording yourself while explicitly stating consent, you create cryptographic proof of your knowing participation. The system captures biometric markers—facial geometry, voice characteristics, and behavioral patterns—that tie your Cameo to your physical presence at a specific timestamp.

The second layer activates when someone attempts to use your Cameo. If you've granted access to specific users, the system verifies that the generator matches your approved list before allowing Cameo integration. Each generation request logs the user identity, timestamp, and prompt text, creating an audit trail of all usage. This log becomes visible to you in the "Videos Featuring Me" dashboard, providing transparency about how your likeness appears in generated content.

The third layer occurs at distribution time. OpenAI embeds invisible watermarks in all Cameo-featuring videos that cryptographically encode metadata including your Cameo ID, generation timestamp, and consent status. These watermarks persist through re-encoding, screenshots, and most editing operations, allowing detection of your likeness in distributed content even if someone extracts and reposts the video outside Sora's platform.

Compared to other platforms offering human likeness integration, Sora 2's consent framework proves more restrictive but also more protective:

| Platform | Consent Method | Liveness Verification | Ongoing Audit Trail | Watermarking | Data Deletion | GDPR Compliant |

|---|---|---|---|---|---|---|

| Sora 2 Cameos | In-app recording with phrase | Yes (required) | Yes (all uses visible to subject) | Cryptographic (invisible) | Full deletion available | Yes |

| HeyGen | Photo/video upload | No (static images accepted) | No (usage not visible to subject) | Visible logo (removable on paid plans) | Unclear retention policy | Partial |

| Synthesia | Studio recording or photo | Optional (for premium) | Limited (enterprise only) | Visible logo | Limited (avatar remains) | Yes |

| Runway Gen-4 | No direct likeness integration | N/A | N/A | Content credentials | N/A | Yes |

The table reveals that Sora 2 enforces stricter consent requirements than competitors focusing on convenience over protection. HeyGen allows static photo uploads without liveness checks, creating deepfake risk. Synthesia offers liveness verification only for premium enterprise clients. Runway Gen-4 avoids the entire issue by not supporting direct human likeness integration, relying instead on fully synthetic generation or style transfer techniques.

Data Collection and Storage

When you create a Cameo, OpenAI collects and stores your verification video, the extracted identity encoding, metadata including device information and IP address, and consent logs with timestamps. According to OpenAI's privacy documentation, verification videos undergo processing to extract identity features, then the system deletes the raw video within 30 days. The identity encoding—a mathematical representation of your appearance and voice—persists as long as your Cameo remains active.

OpenAI states that identity encodings remain isolated from other training data and do not contribute to model improvement. This separation addresses concerns about your likeness inadvertently appearing in other users' generations. The encoding format contains insufficient information to reconstruct your full verification video, functioning more like a secure hash than a compressed video file.

Metadata logs—recording who accessed your Cameo and when—persist indefinitely to maintain the audit trail. This retention enables you to monitor usage months or years after initial consent. If you delete your Cameo, OpenAI commits to deleting the identity encoding and ceasing new generations, though previously generated videos remain in existence unless you separately request their removal.

For users in GDPR-covered jurisdictions (European Union, United Kingdom), you possess specific rights including the right to access all data OpenAI holds about your Cameo, the right to request deletion of your identity encoding and associated metadata, the right to export your usage logs in machine-readable format, and the right to withdraw consent without penalty. Exercising these rights requires submitting a formal request through OpenAI's privacy contact system, which typically processes requests within 30 days.

Revocation and Removal

Sora 2 provides three levels of consent revocation depending on how thoroughly you want to restrict your likeness. Selective revocation removes access for specific users while leaving your Cameo active for others you've approved. Navigate to Settings > Cameos > Permissions, select the user whose access you want to revoke, and tap "Remove Access." This immediately prevents them from generating new content featuring you, though videos they've already created remain in their library unless you separately request removal.

Complete deactivation disables your Cameo entirely without deleting the underlying data. Your Cameo becomes unavailable for any new generation, including your own, but the identity encoding remains in OpenAI's systems. This option suits temporary privacy concerns or situations where you want to preserve the option to reactivate later without re-verifying.

Full deletion permanently removes your Cameo and requests deletion of the identity encoding. Access Settings > Cameos > [Your Cameo] > Delete Permanently. OpenAI displays a warning that this action cannot be undone and that existing videos featuring your Cameo will display a "Cameo Deleted" indicator but won't automatically disappear. Deletion processes asynchronously, typically completing within 24-72 hours.

For removing specific videos that feature your Cameo—even those generated by others who have your permission—use the "Videos Featuring Me" dashboard. Each video displays a "Request Removal" option. This submits a takedown request to the video's creator and to OpenAI's moderation team. Creators receive 48 hours to voluntarily remove the content; if they don't respond, OpenAI's team reviews the request and may force removal if consent appears invalid or misused.

This revocation architecture creates practical challenges for professional collaborations. If you grant Cameo access to a client for a project, then later revoke access, they lose the ability to regenerate or modify videos featuring you. Plan project scope and timeline carefully to avoid situations where revocation disrupts legitimate ongoing work. Consider time-limited permissions that automatically expire after a project deadline rather than requiring manual revocation.

Legal Considerations by Jurisdiction

Consent requirements and legal liability vary significantly across jurisdictions. In the United States, no federal law specifically governs AI-generated likeness, though several states have enacted relevant legislation. California's AB 2602 prohibits distribution of deceptive AI-generated media depicting individuals without their consent, with penalties up to $10,000 per violation. New York's recently passed legislation requires explicit consent for commercial use of AI-generated likenesses. Texas has similar provisions under its SHIELD Act.

European Union's GDPR treats biometric data (including facial geometry and voice characteristics) as sensitive personal data requiring explicit, informed consent. This creates higher legal standards than standard data processing. The EU AI Act, entering force in phases through 2027, classifies systems that generate "deep fakes" or manipulate biometric data as high-risk AI, requiring conformity assessments and transparency obligations. Sora 2's consent architecture appears designed to comply with these requirements, though full legal clarity awaits regulatory guidance.

China's Personal Information Protection Law (PIPL) and Deep Synthesis Regulation require consent for synthetic media featuring individuals and mandate visible watermarks identifying AI-generated content. Sora 2's watermarking satisfies the technical requirement, though the consent verification process may conflict with PIPL's data localization requirements, potentially explaining the platform's unavailability in China at launch.

For professional users creating commercial content featuring Cameos, implementing supplementary legal protections proves prudent even though Sora 2 handles basic consent. These protections include obtaining signed model release forms that explicitly authorize AI-generated derivative works, maintaining records of consent beyond Sora 2's platform in case of future disputes, verifying that talent understand how their likeness will be used in AI-generated contexts, and consulting legal counsel in jurisdictions with specific AI-generated media laws.

The intersection of employment law and AI-generated likenesses creates particularly complex scenarios. If you create a Cameo of an employee for company content, does that employee retain rights to their likeness after leaving the company? Can they revoke consent and disrupt existing marketing materials? Clear contractual agreements addressing these questions prevent costly disputes. Consider provisions that grant the employer perpetual license to use AI-generated content created during employment, even if the individual later revokes their Cameo, or require employees to maintain Cameo availability for a reasonable transition period after departure.

Upload Requirements and Best Practices

Successful Cameo verification depends on meeting technical requirements and following best practices that improve both approval rates and generation quality. Understanding what the system looks for in verification videos allows you to optimize your recording for best results.

Technical Specifications

The Sora iOS app accepts verification videos with specific parameters. Video duration must fall between 5-20 seconds—recordings shorter than 5 seconds provide insufficient data for identity encoding, while recordings longer than 20 seconds create unnecessary processing overhead without quality improvement. The optimal duration is 8-12 seconds, providing enough variety in angles and expressions while keeping file size manageable.

Resolution requirements mandate at least 720p (1280×720 pixels), though 1080p (1920×1080) provides better quality. The app automatically captures at your device's maximum capability, so newer iPhones recording in 4K will deliver higher quality source material. However, the identity encoding process downsamples to a standardized representation, so the difference between 1080p and 4K source material proves marginal in final generation quality.

Frame rate should remain at 30fps minimum, with 60fps preferred for newer devices that support it. Higher frame rates capture more motion data during the head-turn sequence, improving the three-dimensional facial reconstruction. Audio must record at 44.1kHz or higher sample rate with mono or stereo channels. The app captures voice characteristics across the frequency spectrum, so avoid Bluetooth headphones or external microphones that might introduce compression or frequency cutoffs.

File size typically ranges from 30-150MB depending on resolution, duration, and device compression settings. The app handles compression and upload automatically, but verify adequate available storage on your device before beginning verification. The upload process requires a stable internet connection—cellular data works but WiFi provides more reliable transmission for files this size.

Lighting and Environment

Proper lighting proves critical for verification success. The ideal setup uses soft, diffused light from approximately 45 degrees in front of you, slightly above eye level. This positioning minimizes harsh shadows while providing enough contrast for facial feature detection. Avoid direct sunlight or harsh artificial light that creates strong shadows, backlighting that silhouettes your features, or dim lighting that forces the camera sensor to boost gain, introducing noise.

If recording indoors, position yourself facing a window with sheer curtains during daytime for natural, diffused light. For artificial lighting, desk lamps positioned at 45-degree angles work well—avoid overhead ceiling lights that create unflattering shadows under eyes and nose. Color temperature matters less than even distribution, though neutral white (4000-5000K) reproduces skin tones most accurately.

Background selection impacts both verification success and generation quality. Choose a plain, neutral background that contrasts with your hair and skin tone. Light to medium gray, beige, or soft blue backgrounds work well for most people. Avoid busy patterns, bright colors, or environments with multiple moving elements. The system performs background segmentation to isolate your figure, and complex backgrounds can confuse this process.

Ensure adequate space around your head and shoulders in the frame. The verification interface displays guidelines, but generally maintain 20-30% margin around your silhouette. This prevents features from being cut off when you turn your head side-to-side during the recording sequence.

Voice and Audio Quality

Clear audio capture proves as important as visual quality since Cameos integrate both appearance and voice. Position your device's microphone approximately 18-24 inches from your mouth—close enough for clear capture, far enough to avoid plosive sounds (hard P and B sounds causing audio spikes). Speak at your natural conversational volume and pace. The system learns your typical speech patterns, so exaggerated enunciation or volume changes actually decrease quality.

Choose a quiet environment with minimal ambient noise. Close windows to reduce street traffic sounds, turn off fans or HVAC systems temporarily, and ensure no television or music plays in the background. If you wear hearing aids or cochlear implants, the app accommodates these—just verify they function normally during recording.

The phrase you'll read aloud changes each verification session but typically includes your name or the current date. This personalization prevents using pre-recorded audio and establishes a clear consent timestamp. Practice reading the phrase once silently before starting the official recording to ensure smooth delivery without stumbles or long pauses.

Common Verification Failures and Solutions

Users encounter several recurring verification issues. Insufficient lighting causes approximately 40% of verification failures according to user reports in Sora community forums. The system cannot adequately detect facial landmarks in dim conditions or when harsh shadows obscure features. Solution: Verify your recording environment before starting the official verification by taking a test photo—if facial details appear clear and colors look natural in the photo, lighting is likely adequate.

Obscured features account for roughly 25% of failures. Hats, hooded sweatshirts, masks, heavy makeup, or bold glasses obscure the facial geometry the system needs to capture. Solution: Remove or adjust accessories that cover any portion of your face. If you normally wear glasses, you can keep them on—standard prescription glasses don't obscure features enough to cause failure. However, large sunglasses or heavily tinted lenses will trigger rejection.

Movement issues cause about 20% of failures. Turning your head too quickly during the rotation sequence or moving in and out of frame confuses the tracking system. Solution: Follow the on-screen pace indicator, which displays circles or arrows showing when to begin and end each rotation. Slow, smooth movements work better than quick jerks. Think "smooth pan" like a camera movement, not "snapping" your head position.

Audio problems represent approximately 10% of failures. Background noise drowning out your voice, microphone blockage, or extreme volume (too loud or too soft) prevents proper voice capture. Solution: Verify microphone access permissions in iOS Settings > Sora > Microphone. Test audio recording in a different app (Voice Memos) to confirm your microphone functions properly. Ensure fingers don't cover microphone ports, which vary by device model.

The remaining 5% of failures stem from miscellaneous technical issues like poor network connectivity interrupting upload, iOS version incompatibility, or transient server-side processing errors. These typically resolve on retry. If you experience repeated failures after addressing lighting, obscurement, movement, and audio issues, contact OpenAI support through the in-app help system for diagnostic assistance.

Generating Videos with Real People

Once your Cameo verification completes, you can integrate yourself into AI-generated videos through the prompt interface. Understanding how to structure prompts, set expectations for generation quality, and optimize for processing efficiency ensures better results with fewer iterations.

Prompt Engineering for Cameo Integration

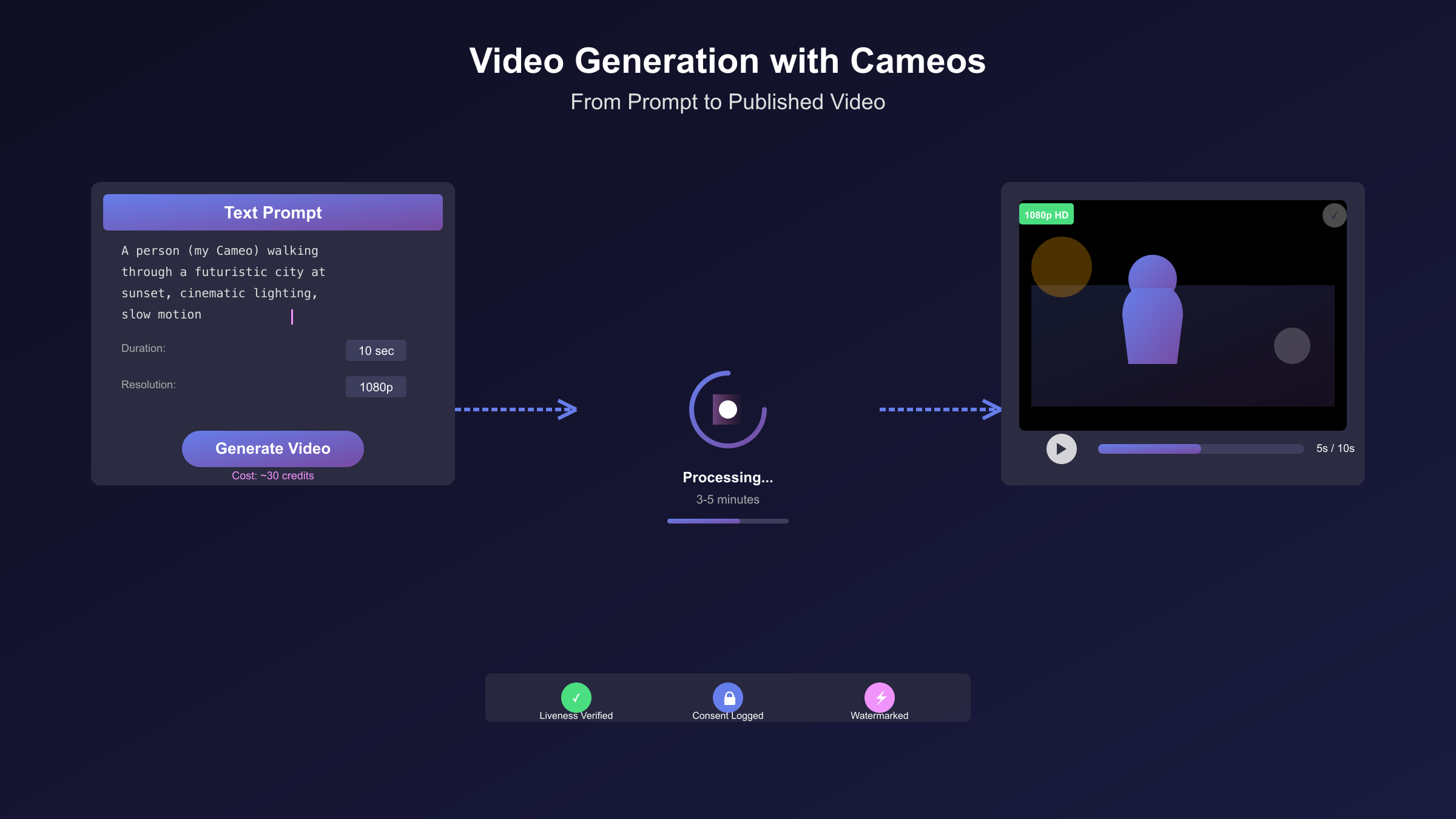

Writing effective prompts for Cameo-featuring videos follows similar principles to pure AI generation but requires additional consideration for how your likeness integrates into the scene. Start prompts with explicit subject identification using the format "A person (your Cameo)" to clearly signal that you want your verified identity in the scene rather than a synthetic character. For example: "A person (my Cameo) walking through a futuristic city at sunset."

Describe environment and context before specifying actions or camera movements. The generation model builds the scene first, then integrates your Cameo into that environment with appropriate lighting, scale, and interaction. Effective prompts flow from general to specific: "In a modern art gallery with white walls and spotlights (environment), a person (my Cameo) examining a colorful abstract painting (action), slow pan from left to right revealing their expression of wonder (camera movement)."

Specify lighting conditions if you want consistency with your verification recording or deliberately different atmospheric effects. The system adapts your appearance to match the scene's lighting, but extreme contrasts—if your verification was brightly lit and you request a dark noir scene—may produce less realistic results. Moderate lighting shifts integrate more naturally: "A person (my Cameo) sitting at a cozy cafe in warm golden hour light" works better than "A person (my Cameo) in complete darkness with a single spotlight."

Voice integration occurs automatically when you include dialogue in prompts. Specify what you want your Cameo to say using quotation marks: "A person (my Cameo) looking directly at camera and saying 'Welcome to our new product launch.'" The system generates both lip movements synchronized to the speech and audio matching your captured voice characteristics. However, voice synthesis quality degrades for speech that diverges significantly from your verification phrase in accent, emotion, or prosody. Natural, conversational dialogue resembling your verification tone works best.

For scenarios featuring multiple people, explicitly distinguish your Cameo from other characters: "A person (my Cameo) shaking hands with a professional colleague in business attire, standing in a corporate office lobby." The model generates the colleague as a synthetic character while rendering your Cameo with your verified identity. You can include multiple Cameos if you have permission to use others' identities: "A person (my Cameo) and a person (colleague's Cameo) presenting together in a conference room."

Processing Time and Credit Consumption

Cameo-featuring video generation requires more computational resources than pure AI generation, affecting both processing time and credit consumption. A typical 10-second video at 1080p resolution consumes approximately 25-35 credits, compared to 15-20 credits for equivalent pure AI generation. The overhead accounts for identity verification checks, consistency enforcement across frames, and audio-visual synchronization when dialogue is included.

Processing time for Cameo videos averages 3-5 minutes for 5-10 second clips, 5-8 minutes for 10-20 second clips, and 8-12 minutes for the maximum 60-second duration (Pro tier only). These times assume standard queue loads; during peak usage periods (evenings and weekends in US time zones), processing may extend 50-100% longer. Plus tier subscribers receive priority queue access that reduces wait times by approximately 30% compared to free tier users, while Pro tier receives highest priority with typical processing 50% faster than free tier.

Credit efficiency strategies become important for users with limited monthly allocations. Generate shorter clips and combine them in external editing software rather than requesting full-length scenes in a single generation. A 20-second scene might be better created as four 5-second clips (4 × 20 credits = 80 credits) versus one 20-second clip (60-70 credits), providing more opportunities to refine individual segments. This approach also allows partial regeneration if one segment doesn't meet expectations, rather than wasting credits regenerating an entire long clip.

Resolution significantly impacts credit consumption. Generating at 720p consumes approximately 40% fewer credits than 1080p for equivalent duration. If your distribution platform will compress the video anyway—social media posts, for example—generating at 720p then upscaling with editing software can save substantial credits while delivering visually acceptable results for screen viewing.

Iteration frequency affects effective credit cost per satisfactory output. Users typically need 1-3 generations to achieve desired results for simple scenes, but complex scenarios requiring specific expressions, precise timing, or challenging environmental integration may require 5+ iterations. Effective prompt engineering and realistic expectations about what Cameos can deliver reduce iteration waste. Study successful examples in Sora's community gallery before attempting complex personal projects.

Quality Expectations and Realism

Cameo-generated videos achieve photorealistic integration under optimal conditions but encounter limitations in specific scenarios. Best-case quality occurs in medium shots (showing head and upper torso) with natural actions like walking, talking, gesturing, or sitting. The system maintains identity consistency, generates appropriate facial expressions matching the scene context, synchronizes lip movements with synthesized speech, and integrates lighting and shadows realistically.

Quality degrades in several situations. Extreme close-ups that exceed the resolution detail captured in your verification recording may appear softer or less sharp than other scene elements. Highly specific facial expressions—like exaggerated surprise, intense anger, or technical movements like winking—may appear unnatural if those expressions didn't feature in your verification clip. Complex physical actions like dancing, sports movements, or precise hand gestures show less realism than general movement.

The uncanny valley effect—where almost-human representations trigger discomfort—occasionally appears in Cameo videos, particularly in extended clips where viewers can scrutinize micro-expressions and subtle movements over time. This effect proves less pronounced than in pure AI-generated humans because your Cameo starts from authentic captured data, but it remains present. For professional use cases like marketing videos or educational content, keeping clips to 5-10 seconds minimizes uncanny valley detection while maintaining viewer engagement.

Audio-visual synchronization quality depends on dialogue complexity. Simple sentences with clear pacing synchronize well, producing convincing speech where lip movements match audio timing and your synthesized voice sounds natural. Complex dialogue with rapid pacing, technical terminology, or emotional extremes may show sync drift or audio artifacts. The system performs better with conversational speech patterns than formal narration or dramatic performance.

Environmental interaction realism varies by scenario complexity. Your Cameo naturally integrates with static environments and simple props—sitting at a desk, holding a coffee cup, standing in a landscape. Complex interactions like typing on a keyboard, using tools, or manipulating small objects show less precision. The system understands general spatial relationships but struggles with fine motor control representation.

Quality Comparison: Real People vs AI Characters

Deciding when to use Cameos versus pure AI-generated characters depends on weighing authenticity needs against practical constraints. Different use cases favor different approaches based on audience recognition requirements, production timelines, and quality expectations.

| Dimension | Cameos (Real People) | Pure AI Characters | Winner for Most Use Cases |

|---|---|---|---|

| Identity Authenticity | Perfect preservation of specific person | Generic synthetic faces | Cameos (when recognition matters) |

| Setup Overhead | 5-10 minutes verification | Zero (immediate generation) | Pure AI (faster start) |

| Per-Video Generation Time | 3-12 minutes | 2-8 minutes | Pure AI (25-40% faster) |

| Credit Cost (10sec, 1080p) | 25-35 credits | 15-20 credits | Pure AI (40% cheaper) |

| Facial Expression Range | Limited to verification capture | Unlimited variety | Pure AI (more flexible) |

| Voice Consistency | Your actual voice characteristics | Any voice style or accent | Depends on use case |

| Legal/Consent Complexity | Requires consent management | No consent needed | Pure AI (simpler legally) |

| Uncanny Valley Risk | Lower (starts from real data) | Higher (fully synthetic) | Cameos (more natural) |

| Commercial Use Confidence | High (verified consent) | Medium (no real person issues) | Cameos (legally safer) |

The table reveals that neither approach universally dominates. Cameos excel when audience recognition of a specific individual adds value—personal brand content, executive communications, testimonials, educational instruction, or any scenario where "you" matters more than "a person." Pure AI characters excel for fictional narratives, abstract concepts, bulk content generation, or scenarios where specific identity is irrelevant.

Consider production context when choosing. A marketing video featuring the company CEO benefits from Cameos even with the extra setup time and cost, because authentic leadership presence builds trust. That same company's explainer video about a technical process might use pure AI characters since viewer connection to a specific person doesn't enhance comprehension.

Budget constraints also drive decisions. Teams with limited credit allocations should reserve Cameos for high-value content where authenticity justifies the 40-70% cost premium, using pure AI generation for supplementary or background content. Mixing approaches strategically—Cameos for key spokesperson shots, pure AI for supporting scenes—optimizes both budget and authenticity.

Quality expectations matter differently across contexts. Professional marketing materials demand the highest realism, favoring Cameos' natural foundation over pure AI's occasional artifacts. Social media content tolerates more stylization, making pure AI's flexibility and speed advantageous. Educational content falls between—instructor recognition helps student engagement (favoring Cameos), but conceptual illustrations work fine with pure AI characters.

Timeline pressure influences the trade-off. If you need content immediately and haven't verified a Cameo, pure AI generation lets you proceed without setup delay. For planned production with advance notice, spending 10 minutes on Cameo verification unlocks better authenticity for all future projects involving that person.

International Access: Workarounds and Alternatives

The iOS app's US and Canada restriction creates significant barriers for international users seeking to use Sora 2's Cameos feature. Understanding available workarounds and alternative platforms allows creators worldwide to access similar capabilities despite geographic limitations.

Geographic Restrictions and Reasons

OpenAI limited initial availability to US and Canadian iOS users for several technical and regulatory reasons. Content moderation challenges vary by jurisdiction—different countries impose different restrictions on AI-generated content, synthetic media, and deepfake technology. Launching in two jurisdictions with relatively unified regulatory frameworks allows OpenAI to refine moderation systems before expanding to markets with divergent requirements.

Payment processing complexity also influences rollout strategy. The credit-based pricing model requires payment infrastructure that varies significantly across regions. US and Canada share similar payment ecosystems, while international markets require integration with region-specific payment methods, currency conversion systems, and local tax compliance. China's Personal Information Protection Law and data localization requirements may conflict with OpenAI's cloud infrastructure, potentially explaining the exclusion of the world's largest market.

Server infrastructure and latency considerations affect user experience quality. Cameo verification and video generation require real-time processing that degrades with high latency. OpenAI likely concentrates server capacity in North America initially, with plans to expand data center presence as demand grows and the technology matures.

VPN and App Store Workarounds

International users can access Sora 2 through multi-step workarounds, though each approach introduces complications. The most common method involves creating a US or Canadian Apple ID, using a VPN service during app download and account creation, and maintaining VPN connectivity during Cameo verification to simulate US presence.

| Region | Official Access | Workaround Method | Technical Difficulty | Recommended Alternative |

|---|---|---|---|---|

| United States | Full (iOS) | N/A | N/A | Native Sora 2 |

| Canada | Full (iOS) | N/A | N/A | Native Sora 2 |

| United Kingdom | None | US App Store + VPN | Medium | Google Veo 3 (expected Q1 2026) |

| European Union | None | US App Store + VPN | Medium-High (GDPR conflicts) | Runway Gen-4, Synthesia |

| China | None | US App Store + VPN | High (payment, latency) | Domestic alternatives, API transit when available |

| Australia | None | US App Store + VPN | Medium | Wait for expansion (likely Q2 2026) |

| Japan | None | US App Store + VPN | Medium | Runway Gen-4 |

| Latin America | None | US App Store + VPN | Medium | Wait for expansion |

Creating a US Apple ID requires providing a US address (use a valid business address or mail forwarding service) and US payment method. Prepaid debit cards from US issuers sometimes work, or use US Apple Gift Cards purchased through third-party retailers that sell internationally. The Apple ID must remain set to the US region, as switching regions invalidates app downloads.

VPN selection matters for performance and reliability. Choose services with servers physically located in the US, low latency (under 100ms to OpenAI's servers), and stable connections that don't drop during multi-minute video generation. ExpressVPN, NordVPN, and Surfshark receive positive reports from Sora users attempting international access, though no VPN guarantees success as OpenAI may implement VPN detection.

For Chinese users specifically, additional challenges include the Great Firewall blocking many VPN protocols, payment processing difficulties (most Chinese credit cards won't process US App Store transactions), and significant latency even with quality VPN services. The comprehensive Sora 2 China user guide details specific strategies for mainland Chinese creators, including recommended VPN providers, payment card options, and latency optimization techniques. International users seeking ChatGPT Plus (which may eventually include Sora access) can explore fast subscription services like those detailed in guides about purchasing ChatGPT Plus from China, which also apply to Sora subscriptions.

API Access and Transit Services

When OpenAI eventually releases Sora 2's API (timeline unannounced), international users gain an alternative access method that circumvents iOS app restrictions. API access allows integration into custom applications, web interfaces, or workflow automation regardless of geographic location. However, API availability doesn't guarantee Cameos support—the consent verification process may remain tied to the mobile app due to liveness check requirements.

For users in regions with restricted OpenAI access, API transit services provide stable connectivity with optimizations for international markets. Services like laozhang.ai offer API routing with China-direct connectivity achieving approximately 20ms latency, transparent pricing with credit balances, and multi-node routing for 99.9% availability. When Sora's API launches, such services could enable Chinese and international creators to access generation capabilities without VPN complexity, though Cameos verification might still require the iOS app initially.

Alternative Platforms for International Users

While awaiting Sora 2 expansion, international creators can use competing platforms with fewer geographic restrictions:

Runway Gen-4 operates globally with no geographic restrictions, though it lacks direct Cameos-equivalent features. It excels at style transfer and motion control, making it suitable for scenarios where specific human identity matters less than creative control. Pricing starts at $12/month for limited credits, with professional tiers reaching $76/month for sustained production.

Google Veo 3, expected to launch in early 2026 based on announcements, will likely offer global availability from launch given Google's international infrastructure. Veo 3 may include similar consent-based likeness integration, though technical details remain unannounced. The Veo 3 guide tracks availability updates for international users.

HeyGen and Synthesia provide talking avatar generation with human likeness integration, though focused on presentation and educational content rather than cinematic video generation. Both operate globally with various payment methods accepted. However, their consent frameworks prove less rigorous than Sora 2's, creating potential legal risks for professional use.

International users should evaluate whether waiting for official Sora 2 expansion, attempting workarounds, or using alternative platforms best serves their needs. Creators requiring immediate production favor alternatives. Those prioritizing Sora 2's specific quality and consent framework may accept VPN complexity or wait for geographic expansion expected within 6-12 months based on OpenAI's historical rollout patterns.

Troubleshooting Common Issues

Despite careful preparation, users encounter various issues during Cameo verification and video generation. Understanding common problems and their solutions reduces frustration and credit waste.

Verification Won't Start: If the Cameo verification interface doesn't appear or crashes on launch, verify iOS version compatibility (iOS 15+ required), confirm adequate storage space (need 500MB+ free), restart the Sora app completely (force quit and relaunch), and check for app updates in the App Store. If issues persist, try deleting and reinstalling the app, though this resets any in-progress work.

Liveness Check Fails Repeatedly: Persistent liveness failures despite good lighting and proper positioning usually indicate motion-related issues. Ensure you're turning your head slowly and smoothly rather than quickly jerking from angle to angle. The system looks for continuous motion, not discrete positions. Record yourself practicing the motion to identify if you're moving too fast or inconsistently. Some users with facial paralysis or limited mobility may struggle with liveness checks; contact OpenAI support for accommodations in these cases.

Poor Generation Quality: If generated videos featuring your Cameo appear low-quality, blurry, or unrealistic compared to examples, verify your verification recording quality first. Regenerate your Cameo with better lighting and resolution if the original was suboptimal—there's no limit on re-verification. Adjust prompt specificity—vague prompts like "person walking" yield worse results than detailed prompts specifying environment, lighting, and action. Review successful community examples to calibrate expectations; some scenarios inherently challenge the system's current capabilities.

Consent Permissions Not Working: If you've granted someone access to your Cameo but they report inability to use it, verify the permission status in Settings > Cameos > Permissions. Ensure you selected "Share Access" rather than just viewing their request. Check that both users are signed into the correct OpenAI accounts—permissions are account-specific, and logging in with different credentials will appear to lose access. Permission changes take 5-15 minutes to propagate through OpenAI's systems, so wait briefly after making changes before testing.

Credit Consumption Higher Than Expected: Unexpected credit depletion typically results from failed generations still consuming credits, higher resolution than intended, or multiple users sharing an account. Check Settings > Billing > Credit History to review detailed consumption logs. Failed generations consume partial credits (typically 30-50% of a successful generation). If you have multiple collaborators using your account, their generations count against your total allocation. Consider upgrading tiers if legitimate usage consistently exceeds your plan's limits.

Generated Videos Won't Export: Export failures usually stem from insufficient device storage or network interruptions during download. Verify free storage space exceeds the video file size (typically 50-200MB depending on duration and resolution). Ensure stable connectivity—switch from cellular to WiFi if experiencing issues. The app caches generated videos server-side for 30 days, so you can retry export later if immediate attempts fail.

Ethics, Legal Considerations, and Best Practices

Using AI to generate videos featuring real people carries ethical implications beyond technical capability. Establishing guidelines for responsible use protects both creators and subjects while advancing beneficial applications of the technology.

When NOT to Use Cameos

Certain use cases present unacceptable ethical risks regardless of technical consent compliance. Avoid creating deceptive content where viewers might reasonably believe the depicted scenario occurred in reality, particularly for political, financial, or medical claims. Even with consent, generating videos that misrepresent someone's actions or statements can constitute defamation or fraud depending on jurisdiction and context.

Don't use Cameos to create content the subject would find humiliating, even with their consent, as power dynamics in professional relationships may pressure people to agree to uses they're uncomfortable with. Employment contexts particularly risk coercion—an employee may feel unable to refuse their employer's request to create a Cameo for fear of retaliation. Obtain truly voluntary consent through clear communication about intended uses and explicit confirmation that refusal carries no penalty.

Avoid generating content involving minors, even your own children, given the evolving legal landscape around AI-generated media depicting minors and the inability of children to provide informed consent to future uses of their likeness. Several jurisdictions are considering or have enacted specific restrictions on AI-generated content featuring minors.

Don't create Cameos of deceased individuals without explicit authorization from their estate, as publicity rights often extend beyond death and unauthorized use may violate both legal rights and ethical norms around respecting the deceased.

Professional Use Checklist

Organizations using Cameos for commercial content should implement systematic safeguards. Obtain written consent that specifically addresses AI-generated derivative works, not just general photography or video releases. Standard model release forms predate AI generation and may not adequately cover this use case. Include specific language like "I consent to my likeness being used in AI-generated video content created through systems like OpenAI Sora."

Maintain comprehensive records including original consent documentation, Sora platform consent logs, descriptions of intended use cases shared with the subject, and records of content review opportunities provided to subjects before publication. These records prove essential if disputes arise months or years after content creation.

Implement review processes where subjects can preview generated content before public distribution. This transparency builds trust and allows identification of unforeseen issues. While Sora 2's platform allows subjects to view all content featuring their Cameo, proactive sharing before publication demonstrates good faith.

Establish clear policies around consent revocation. If a subject withdraws consent, what happens to existing published content? Does the organization agree to remove it, or claim a perpetual license granted at creation time? Addressing these questions in advance prevents conflicts.

For sensitive contexts—executive communications, medical education, legal proceedings—consider additional vetting. Have legal counsel review both the consent framework and the generated content. Maintain higher standards of accuracy and transparency in these applications where viewer reliance on content carries greater consequences.

Future-Proofing Your Approach

The legal and ethical framework governing AI-generated media continues evolving rapidly. Practices acceptable today may face restrictions tomorrow. Build adaptability into your workflow by using platforms with robust consent architectures that can adapt to changing requirements, documenting thoroughly to demonstrate good-faith compliance with standards as they existed at creation time, staying informed about emerging regulations in jurisdictions where you operate or distribute content, and maintaining conservative practices that exceed minimum legal requirements.

As detection technology improves and regulatory frameworks mature, expect increasing pressure for transparency about AI-generated content. Sora 2's watermarking provides technical compliance with emerging disclosure requirements, but consider also providing human-readable disclosures in content descriptions or credits. Statements like "This video features AI-generated scenes with consent from depicted individuals" set appropriate viewer expectations.

The Cameos feature represents a step toward ethically implemented AI video generation, but technology alone cannot ensure responsible use. Creators bear ultimate responsibility for using these tools in ways that respect individual rights, promote truthfulness, and advance beneficial applications while preventing harmful misuse. Approaching AI-generated media with the same ethical rigor you would apply to traditional media production establishes sustainable practices as the technology continues advancing.