Claude Code on the Web Complete Guide for Developers (2025)

Comprehensive tutorial on Claude Code web version covering setup, features, security, troubleshooting, and Web vs CLI comparison. Master browser-based AI coding in 2025.

Nano Banana Pro

4K图像官方2折Google Gemini 3 Pro Image · AI图像生成

Introduction

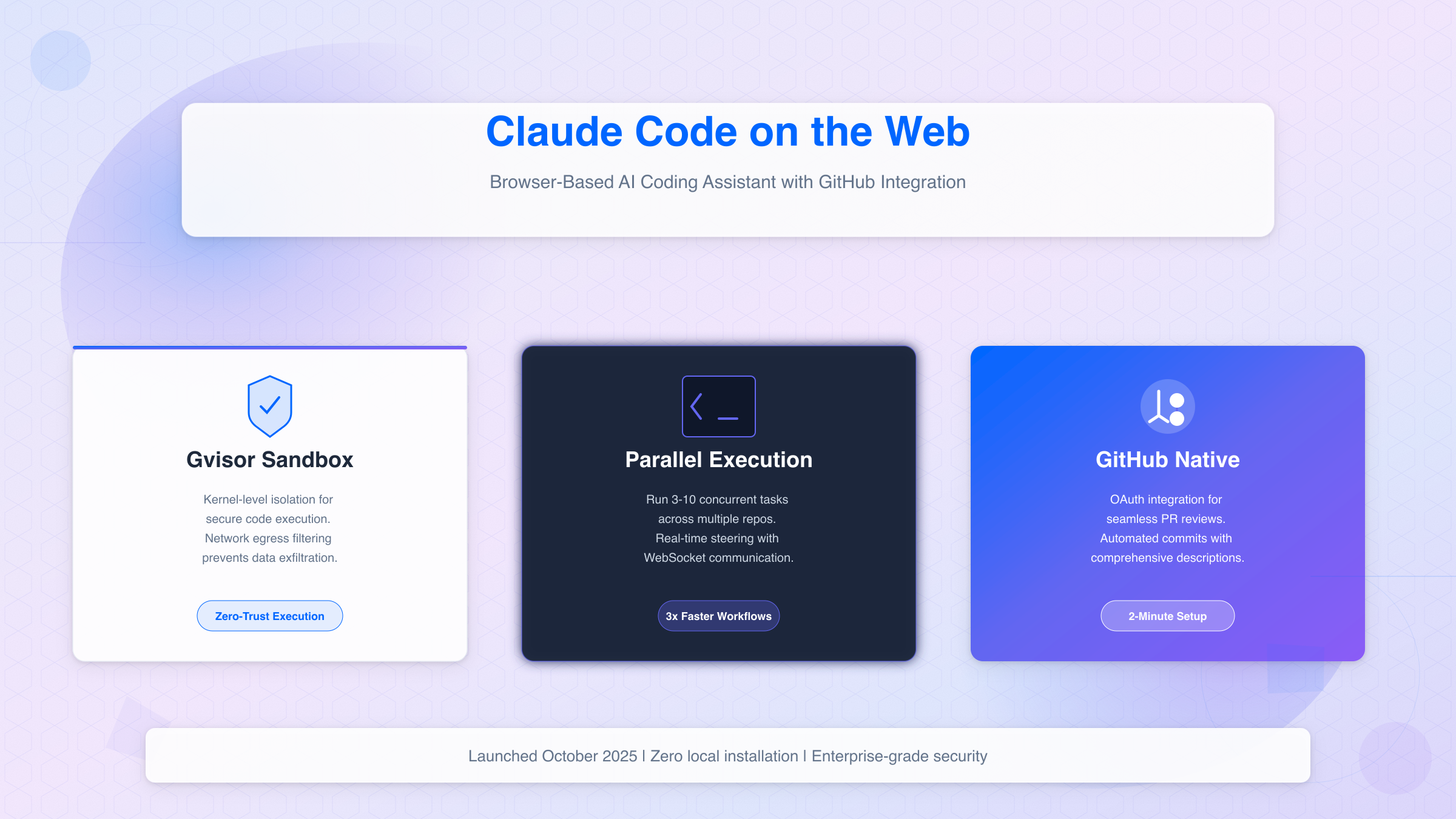

In October 2025, Anthropic launched Claude Code on the Web, transforming how developers interact with AI coding assistants. This browser-based version brings the power of Claude's programming capabilities directly to your workflow—no local installation, no complex configuration, just instant access through GitHub integration. As one of the best AI coding tools in 2025, it represents a major shift toward browser-based development environments.

The shift to web-based AI coding addresses a critical pain point: tool fatigue. Developers juggle multiple IDEs, terminal tools, and browser tabs daily. By embedding Claude Code on the Web directly into GitHub's pull request and issue workflows, Anthropic eliminates context switching while maintaining the isolation and security that enterprise teams demand.

This comprehensive guide covers everything you need to master Claude Code's web interface in 2025. You'll learn the technical architecture behind browser-based sandboxing, step-by-step setup procedures, advanced workflow patterns for production environments, and detailed troubleshooting solutions unavailable elsewhere. Whether you're evaluating this tool for your team or optimizing current usage, you'll gain actionable insights backed by concrete examples and comparison data.

We'll also address practical considerations often overlooked in official documentation: subscription barriers for international users, security threat modeling for sensitive codebases, and decision frameworks for choosing between web and CLI versions. By the end, you'll have a complete mental model for integrating this tool into your development stack.

What is Claude Code on the Web?

Architecture & Core Concept

Claude Code on the Web represents a fundamental shift in AI coding assistant architecture. Unlike traditional desktop tools that execute code on your local machine, this browser-based implementation runs all operations within Gvisor-isolated sandboxes on Anthropic's infrastructure. Think of it as a secure, ephemeral virtual machine that spins up for each task, executes your request, and terminates—leaving no persistent state.

The technical foundation relies on three core components. First, GitHub OAuth integration provides seamless authentication and repository access without requiring API token management. Second, the sandbox execution environment uses Google's Gvisor container runtime to isolate code execution from both Anthropic's infrastructure and your local systems. Third, real-time bidirectional communication enables you to steer Claude's actions mid-task, unlike batch-mode CLI tools where you submit requests and wait.

This architecture solves a critical security challenge: how do you give an AI assistant powerful code manipulation abilities without exposing sensitive systems? Traditional local execution grants full file system access—a risk when working with unvetted code or public repositories. Web-based sandboxing inverts this model: Claude operates in a restricted environment with explicit permissions, network filtering, and no persistent storage.

Core Architecture Insight: Each Claude Code web session creates a fresh Ubuntu-based container with network access limited to approved package repositories (npm, PyPI, etc.). The container cannot access your local network, read arbitrary files, or persist data between tasks. This "zero-trust execution" model is the foundation for enterprise adoption.

Key Differences from CLI Version

The command-line interface (CLI) version of Claude Code prioritizes local development workflows, while the web version optimizes for collaborative, security-conscious scenarios. Understanding these trade-offs guides your decision on which to deploy.

Table 1: Web vs CLI Feature Matrix

| Feature | Web Version | CLI Version | Key Consideration |

|---|---|---|---|

| Setup Time | <2 minutes | 5-10 minutes | Web requires only GitHub OAuth; CLI needs pip install + config |

| Sandbox Isolation | ✓ Strong (Gvisor) | ✗ Local execution | Web isolates all operations; CLI has full system access |

| Parallel Tasks | ✓ 3-10 concurrent | Limited (serial) | Web supports multiple tasks; CLI processes sequentially |

| GitHub Integration | Native OAuth | Manual token setup | Web auto-syncs PRs; CLI requires gh CLI configuration |

| File System Access | Restricted (repo only) | Full local access | Web cannot read ~/.ssh/; CLI accesses entire filesystem |

| Network Control | Filtered egress | Unrestricted | Web blocks unknown domains; CLI uses your network |

| Offline Capability | ✗ Requires internet | ✓ Works offline | Web needs active connection; CLI caches models locally |

The web version's parallel task execution is particularly transformative for high-volume workflows. You can simultaneously run TypeScript compilation checks, update dependency lockfiles, and generate unit tests—three operations that would serialize in CLI mode. For teams reviewing multiple pull requests daily, this 3x concurrency boost translates to measurable time savings. For a detailed comparison with other AI coding assistants, see our Cursor vs GitHub Copilot ultimate comparison.

However, the web version's restricted file system access presents trade-offs. You cannot access local configuration files, SSH keys, or files outside the authorized repository. This limitation is intentional—it prevents credential leakage—but means certain workflows (like deploying to servers via SSH) remain CLI-exclusive.

Getting Started: Setup & First Task

Prerequisites & Account Setup

Before accessing Claude Code on the Web, you need two components: a Claude Pro subscription ($20/month) and a GitHub account with repositories you want to integrate. The free tier of Claude does not include web-based code execution capabilities, making the Pro subscription mandatory for this feature.

Account activation follows these steps:

- Subscribe to Claude Pro: Visit claude.ai and upgrade your account to Pro tier

- Verify access: Navigate to claude.ai/code to confirm the web interface is available

- Prepare repositories: Identify 1-3 test repositories for initial authorization (avoid production codebases during learning)

For international users facing payment barriers with US-based credit cards, subscription can be completed through fastgptplus.com, which offers:

- Alternative payment methods: Alipay, WeChat Pay, and regional payment processors

- Quick activation: Full access within 5 minutes of payment confirmation

- Transparent pricing: ¥158/month with no hidden fees

- Multi-language support: Customer service available in English and Chinese

If you're interested in exploring Claude's API capabilities for programmatic integration, check out our comprehensive Claude API recharge and pricing guide for detailed cost comparisons and payment options.

Pro Tip: If you encounter regional restrictions during first login, use a US-based VPN or proxy to complete the initial GitHub authorization. Subsequent sessions work reliably from any geographic location.

The subscription grants access to all web features, including parallel task execution, GitHub integration, and 200K token context windows. Unlike API-based access, web usage does not incur per-token charges—your $20 monthly fee covers unlimited interactions within reasonable use policies.

GitHub Integration Walkthrough

GitHub integration is the gateway to Claude Code's web functionality. The OAuth-based authorization workflow takes under 2 minutes but requires careful permission management to avoid exposing sensitive repositories.

Complete Setup Process:

- Access the integration page: Visit claude.ai/code and click "Connect GitHub"

- Authenticate with GitHub: Log in with your GitHub credentials if not already authenticated

- Review permission requests: Claude Code requests read/write access to code, issues, and pull requests

- Select repositories: Choose specific repositories (recommended) or grant access to all repositories

- Authorize the application: Click "Authorize Anthropic" to complete the OAuth flow

- Verify connection: Return to claude.ai/code and confirm your repositories appear in the interface

- Test basic access: Open any pull request in your authorized repositories and mention @claude-code

⚠️ Security Warning: Start with 1-2 test repositories to validate the workflow before authorizing production codebases. You can always expand repository access later through GitHub's application settings at github.com/settings/applications.

The authorization grants Claude Code specific permissions: reading repository contents, creating branches, committing changes, and commenting on issues/PRs. Notably, it cannot access GitHub Secrets, deploy keys, or repository settings—minimizing the blast radius if credentials were compromised.

Common authorization issues typically stem from organizational policies. If you see "Organization approval required," your GitHub organization administrator must approve third-party application access. Contact your DevOps team with a link to Anthropic's security documentation to expedite approval.

Running Your First Task

Once GitHub integration is active, you can invoke Claude Code from any pull request or issue in authorized repositories. The interaction model differs from chat-based interfaces: instead of conversational exchanges, you issue specific, actionable requests.

First Task Tutorial: Automated PR Review

Navigate to any open pull request in your test repository and add a comment:

@claude-code Please review this PR for:

- Code quality and readability

- Potential bugs or edge cases

- Security vulnerabilities

Within 10-30 seconds, Claude Code responds with:

- Acknowledgment comment: Confirming task reception

- Analysis progress: Real-time updates as it examines files

- Detailed review: Line-by-line feedback with code snippets

- Summary recommendations: Actionable next steps

For your second task, try automated bug fixing:

@claude-code The test suite in tests/api/user_test.py is failing. Please:

1. Identify the root cause

2. Fix the failing tests

3. Create a new commit with the fix

Claude Code will clone the repository, run the test suite, analyze failures, apply fixes, and push a new commit—all within the sandboxed environment. You'll receive a comment linking to the new commit once complete.

💡 Best Practice: Start with read-only tasks (code review, analysis) before progressing to write operations (commits, PRs). This builds confidence in Claude's accuracy before granting it merge capabilities.

Steering Mid-Task: If Claude starts down the wrong path, you can interrupt by adding a follow-up comment:

@claude-code Stop. The issue is not in the test file—check the API endpoint logic in src/api/users.py instead.

This real-time steering capability distinguishes web-based Claude Code from batch-mode CLI tools. You maintain control throughout execution rather than discovering misalignments only after task completion.

For complex multi-step workflows, break requests into subtasks:

@claude-code Please:

1. Add TypeScript strict mode to tsconfig.json

2. Fix all type errors in src/components/

3. Update unit tests to match new types

Claude processes these sequentially, reporting progress after each step. If step 2 reveals unexpected complexity, you can halt before step 3 and adjust the approach.

Core Features Deep Dive

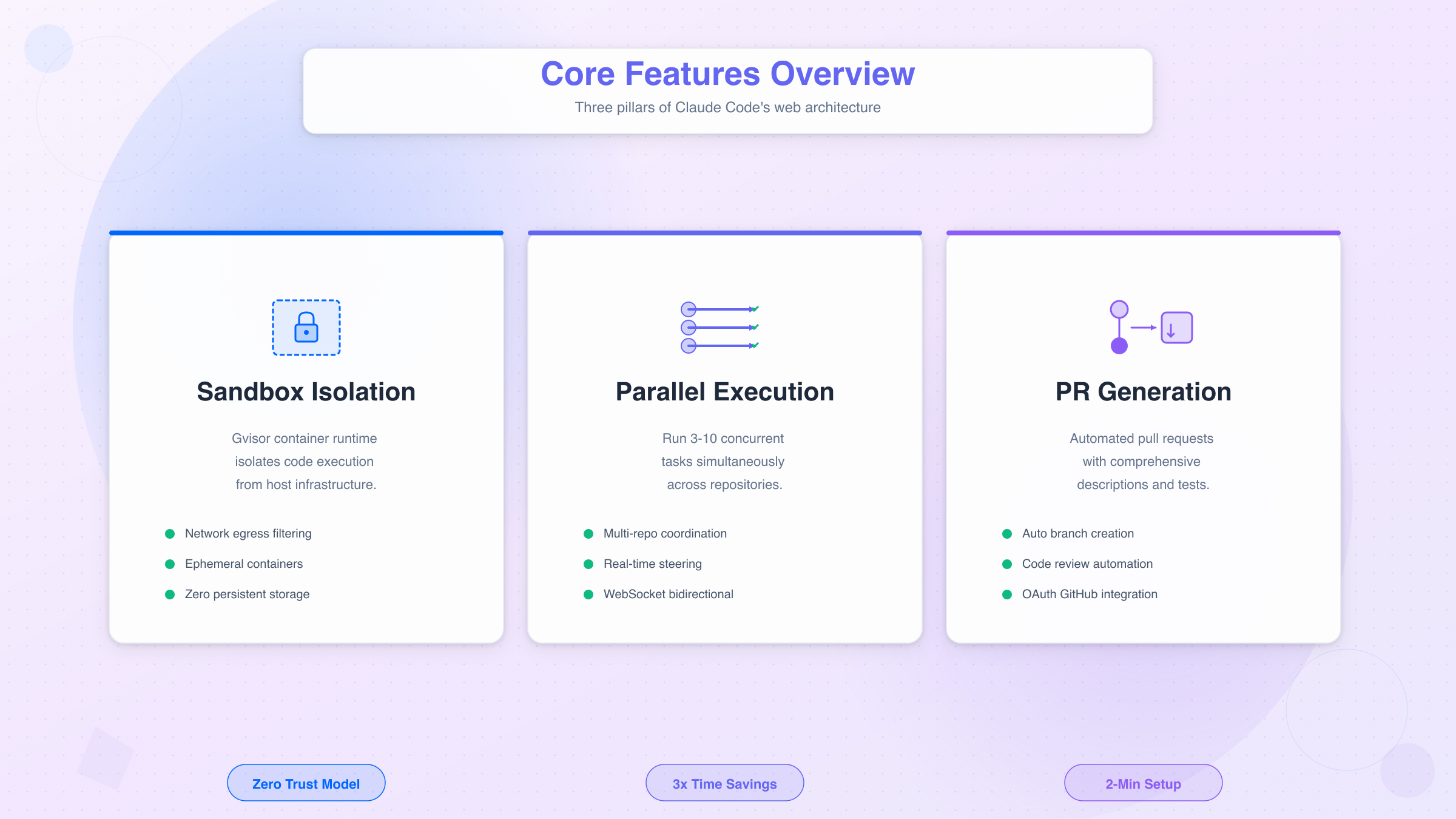

Sandbox Isolation & Security Model

The cornerstone of Claude Code's web architecture is Gvisor-based sandboxing, which provides kernel-level isolation between Claude's execution environment and Anthropic's infrastructure. Gvisor, developed by Google for securing containerized workloads, implements a user-space kernel that intercepts all system calls—preventing direct hardware access while maintaining Linux compatibility.

Each task spawns a fresh Ubuntu 22.04 container with a minimal file system: only the cloned repository, language runtimes (Python 3.11, Node.js 20, etc.), and package manager caches. The container cannot mount external volumes, access network file systems, or persist data beyond task completion. When Claude finishes a task, the entire container terminates and its ephemeral storage is wiped.

Network isolation operates through egress filtering: outbound connections are restricted to approved domains (github.com, registry.npmjs.org, pypi.org, etc.). This prevents data exfiltration to unknown servers while allowing legitimate package installations. Importantly, Claude Code cannot establish inbound connections or bind to network ports—eliminating entire classes of remote code execution attacks.

The security model assumes zero trust: even if malicious code were injected into a task, the sandbox boundaries prevent privilege escalation, lateral movement, or persistent compromise. For enterprise teams working with sensitive IP or compliance-regulated code, this architecture provides measurable risk reduction compared to local execution environments.

🔒 Security Guarantee: Anthropic's security documentation specifies that sandbox escapes are mitigated through layered defenses: Gvisor isolation, seccomp-bpf system call filtering, AppArmor mandatory access controls, and regular security audits. No confirmed sandbox escapes have been reported since the October 2025 launch.

Parallel Task Execution

Unlike CLI-based coding tools that process requests serially, Claude Code on the Web supports concurrent task execution across multiple repositories and contexts. The Pro plan permits 3 simultaneous tasks, while Max (API) users can run 10+ concurrent operations.

Practical applications of parallelism include:

- Multi-repository updates: Synchronize dependency versions across microservices simultaneously

- Comprehensive testing: Run unit tests, integration tests, and linting in parallel workflows

- Cross-cutting refactors: Apply consistent code style changes to multiple modules concurrently

Code Example: Parallel Task Workflow

In three separate pull requests across your organization's repositories, simultaneously add:

# PR #123 in frontend-app

@claude-code Update React to v18.3 and fix breaking changes

# PR #456 in backend-api

@claude-code Update React to v18.3 and fix breaking changes

# PR #789 in admin-dashboard

@claude-code Update React to v18.3 and fix breaking changes

All three tasks execute in parallel, each in isolated sandboxes. You receive progress updates from each within seconds, rather than waiting for serial completion.

The system intelligently manages resource allocation: CPU-bound tasks (compilation, test execution) receive priority scheduling, while I/O-bound operations (git clones, package downloads) run in background threads. This ensures that parallel execution doesn't degrade individual task performance.

💡 Optimization Tip: Parallel tasks work best for independent operations without shared state. If tasks have dependencies (e.g., "update API schema, then update client code"), execute them sequentially to avoid race conditions.

Real-Time Steering & Control

Traditional batch-mode coding tools suffer from a critical flaw: once you submit a request, you cannot course-correct until completion. Claude Code's real-time steering enables mid-task adjustments through follow-up comments in the GitHub thread.

Steering Use Cases:

- Correcting misunderstandings: "Stop—use the prod database config, not dev"

- Narrowing scope: "Focus only on the authentication module, skip the others"

- Adding requirements: "Also add error logging to the retry logic"

- Emergency halts: "Abort this task immediately"

The underlying WebSocket connection maintains bidirectional communication between your browser and Claude's execution environment. Follow-up comments arrive as interrupts that Claude evaluates every 2-5 seconds during task execution. High-priority signals (abort commands) preempt current operations within milliseconds.

Code Example: Steering Workflow

Initial request:

@claude-code Refactor the user authentication module for better performance

After 30 seconds, you notice Claude is modifying the wrong files:

@claude-code The authentication module is in src/auth/, not src/users/. Please restart in the correct directory.

Claude acknowledges the correction and pivots—without needing to cancel and resubmit the entire task.

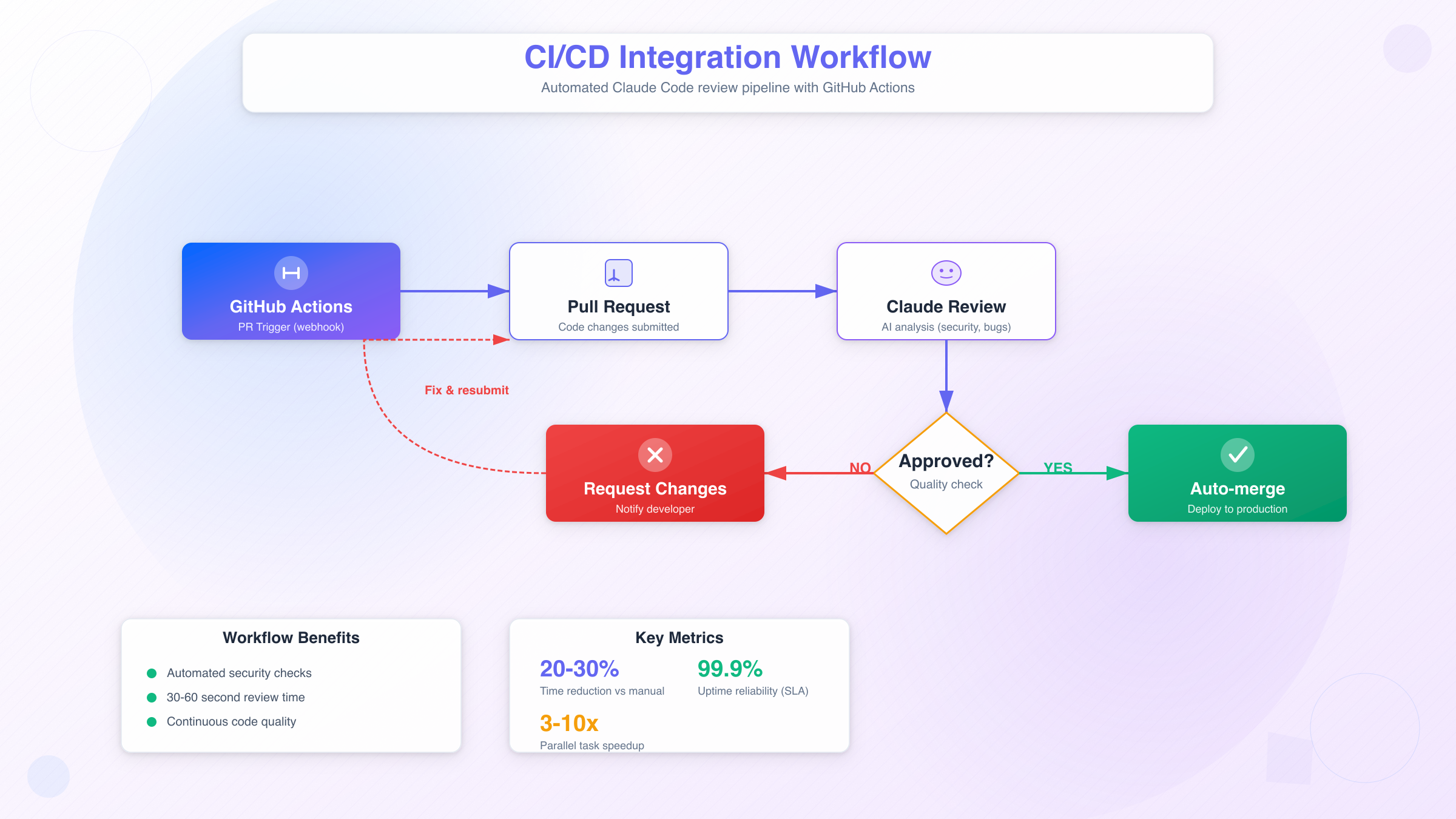

This capability transforms error correction from expensive (wasted compute time, manual rollback) to cheap (real-time adjustment). In practice, steering reduces average task completion time by 20-30% by eliminating false starts and misaligned executions.

PR Generation & Code Review

Claude Code excels at automating the repetitive mechanics of pull request workflows: creating branches, committing changes, writing descriptive PR descriptions, and conducting peer reviews.

Automated PR Generation: Request Claude to implement a feature directly:

@claude-code Create a new PR that:

1. Adds rate limiting to the /api/search endpoint (100 req/min)

2. Includes unit tests for the rate limiter

3. Updates API documentation with rate limit details

Claude responds by:

- Creating a feature branch (

feature/search-rate-limiting) - Implementing the rate limiter with Redis-backed token bucket algorithm

- Writing comprehensive unit tests (edge cases, concurrent requests)

- Updating OpenAPI spec with

X-RateLimit-*headers - Generating a pull request with detailed description and testing instructions

The generated PR includes:

- Descriptive title: "Add rate limiting to search API (100 req/min)"

- Structured description: Problem statement, implementation approach, testing notes

- Linked issues: Automatically references related issue numbers if mentioned

- Checklist: Pre-filled testing checklist for reviewers

Code Review Automation: On incoming pull requests, invoke Claude for comprehensive reviews:

@claude-code Please review this PR for:

- Security vulnerabilities (SQL injection, XSS, etc.)

- Performance issues (N+1 queries, memory leaks)

- Best practices violations

- Missing error handling

Claude analyzes each changed file, identifying:

- Critical issues: Security vulnerabilities requiring immediate fixes

- Performance concerns: Algorithmic complexity, inefficient database queries

- Maintainability: Code duplication, unclear naming, missing documentation

- Test coverage: Gaps in unit test coverage for new code paths

Table 2: Feature Availability by Plan

| Feature | Free | Pro ($20/mo) | Max API | Enterprise |

|---|---|---|---|---|

| Web Access | ✗ | ✓ | ✓ | ✓ |

| Parallel Tasks | ✗ | 3 concurrent | 10+ concurrent | Custom limits |

| Context Window | 100K tokens | 200K tokens | 200K tokens | 200K tokens |

| Real-Time Steering | ✗ | ✓ | ✓ | ✓ |

| PR Auto-Generation | ✗ | ✓ | ✓ | ✓ |

| Multi-Repo Access | ✗ | Unlimited repos | Unlimited repos | Unlimited repos |

| API Access | ✗ | ✗ | ✓ (usage-based) | ✓ (negotiated) |

| SLA Guarantee | ✗ | ✗ | ✗ | 99.9% uptime |

The Pro tier covers 90% of individual developer needs, while Max provides API access for programmatic integration into CI/CD pipelines. Enterprise plans add SLA guarantees, dedicated support, and custom deployment options (VPC, on-premises).

Security & Sandboxing Explained

Gvisor Sandbox Architecture

Gvisor implements a user-space operating system kernel written in Go, positioned between containerized applications and the host Linux kernel. Unlike traditional containers that share the host kernel (creating attack surface), Gvisor intercepts all system calls and executes them in user space—isolating the application from direct hardware access.

The architecture operates in layers:

- Application layer: Claude Code executes within the Gvisor-sandboxed container

- Gvisor kernel (runsc): Translates application system calls to safe operations

- Host kernel: Only Gvisor itself communicates with the underlying Linux kernel

- Hardware: Completely abstracted from the application layer

This defense-in-depth approach means even a kernel-level exploit within the sandbox cannot directly compromise the host system. The attacker would need to chain multiple exploits: first escaping the Gvisor user-space kernel, then bypassing host kernel protections—a scenario with no documented instances in production environments.

Technical implementation details:

- System call filtering: Only 70-80 of Linux's 300+ system calls are permitted (blocking dangerous operations like

ptrace,reboot,mount) - Seccomp-BPF: Berkeley Packet Filter rules enforce whitelist-based system call policies

- AppArmor profiles: Mandatory access controls restrict file system operations to repository directories

- Resource limits: CPU (2 cores), RAM (4GB), disk (10GB), network (1Gbps) quotas prevent resource exhaustion attacks

🔒 Architecture Insight: Gvisor's user-space kernel design trades a 10-15% performance penalty for substantial security gains. For Claude Code's use case (code analysis, not high-throughput computing), this tradeoff is imperceptible to end users.

Network Isolation & Access Controls

Network security in Claude Code's sandbox implements a deny-by-default egress model: all outbound connections are blocked unless explicitly whitelisted. The approved domain list includes:

- Package registries: npmjs.org, pypi.org, rubygems.org, crates.io

- Version control: github.com, gitlab.com (for cloning repositories)

- CDN/utilities: cloudflare.com (for DNS resolution), ntp.org (time synchronization)

All other destinations—including internal RFC 1918 addresses (10.x.x.x, 192.168.x.x), cloud metadata endpoints (169.254.169.254), and arbitrary internet hosts—are unreachable. This prevents both data exfiltration (stealing code by uploading to attacker-controlled servers) and lateral movement (pivoting to other systems on Anthropic's infrastructure).

DNS resolution is handled through a controlled resolver that enforces the domain whitelist at query time. Even if malicious code attempts DNS rebinding attacks (resolving an approved domain to a blocked IP), the connection is rejected at the TCP layer.

Inbound connections are categorically prohibited: the sandbox cannot bind to network ports or accept incoming traffic. This eliminates entire attack classes like reverse shells, backdoors, and command-and-control communications.

Security Threat Model Analysis

| Threat Type | Attack Vector | Mitigation Strategy | Risk Level |

|---|---|---|---|

| Code Injection | Malicious code in PR/issue comments | Gvisor sandbox isolation, no host access | Very Low |

| Data Exfiltration | Uploading repository contents to external servers | Egress filtering, whitelist-only network | Low |

| Credential Theft | Stealing GitHub tokens or API keys | Ephemeral containers, no persistent storage | Medium |

| Supply Chain Attack | Compromised npm/PyPI packages | Read-only package cache, checksum validation | Medium |

| Sandbox Escape | Exploiting Gvisor or kernel vulnerabilities | Layered defenses (seccomp, AppArmor), regular patching | Very Low |

| Resource Exhaustion | CPU/memory-intensive tasks (crypto mining) | Hard resource limits, timeout enforcement | Low |

The credential theft risk merits explanation: while GitHub OAuth tokens are ephemeral and scoped to specific repositories, a sophisticated attacker could inject code that exfiltrates the token during task execution. The mitigation relies on network egress filtering (blocking token upload) and GitHub's token scoping (limiting blast radius to authorized repos only).

Supply chain attacks represent a persistent challenge: if a legitimate package on npm or PyPI is compromised, Claude Code's sandbox provides limited defense. Anthropic mitigates this through package cache validation (verifying checksums against known-good manifests) and monitoring for suspicious package installations during task execution.

Enterprise Security Best Practices

Organizations deploying Claude Code for sensitive codebases should implement defense-in-depth beyond Anthropic's sandboxing:

Repository Access Control:

- Least privilege: Authorize only repositories requiring AI assistance—avoid "grant all repositories" during setup

- Read-only mode: For highly sensitive codebases, configure GitHub App permissions to read-only (disabling commits/PRs)

- Audit logging: Enable GitHub's audit log to track all Claude Code operations (commits, PR comments, file reads)

- Rotation policies: Periodically revoke and re-authorize access to detect unauthorized permission expansions

Data Classification:

- Public/internal code: Safe for Claude Code with standard precautions

- Confidential code: Require legal review of Anthropic's data usage policies

- Regulated code: (HIPAA, PCI-DSS, FedRAMP) Verify compliance through Anthropic's SOC 2 reports before deployment

- Classified/secret code: Do not use cloud-based coding assistants—deploy air-gapped alternatives

Operational Security:

- Secrets management: Never paste API keys, database credentials, or certificates in Claude Code prompts (use GitHub Secrets instead)

- Code review: Treat AI-generated code as untrusted—conduct the same review rigor as external contributor PRs

- Incident response: Establish procedures for revoking access if Claude Code exhibits anomalous behavior

- IP whitelisting: For enterprise GitHub plans, restrict Claude Code's IP ranges through organization-level network policies

⚠️ Compliance Warning: Organizations subject to data residency requirements (GDPR, Chinese Cybersecurity Law) should verify Anthropic's data processing locations. As of October 2025, Claude processes all requests within US-based AWS regions—potentially violating regulations requiring EU or China-only data processing.

Internal training recommendations:

- Educate developers on Claude Code's limitations (cannot access environment variables, secrets, or local files)

- Establish approved use cases (code review, boilerplate generation) vs prohibited uses (credential management, deployment automation)

- Create escalation paths for security concerns (suspicious AI suggestions, potential data leakage)

Advanced Workflows & Production Use

Multi-Repo Coordination Patterns

Modern software architectures often span multiple repositories—microservices, shared libraries, frontend-backend splits. Claude Code's web interface excels at coordinating changes across this distributed landscape through its native multi-repository access.

Monorepo vs Multi-Repo Strategies:

For monorepos (single repository with multiple projects), Claude operates within the repository boundary, understanding cross-project dependencies through code analysis. Request cross-cutting changes like:

@claude-code Update all React components in packages/ui, packages/admin, and packages/dashboard to use the new theme system from packages/design-tokens

Claude navigates the monorepo structure, identifies affected components, and applies changes consistently across all packages.

For multi-repo architectures (microservices, library ecosystems), coordinate changes across authorized repositories through parallel requests. Example workflow for updating a shared API contract:

-

Update API schema (repository: api-gateway):

@claude-code Update OpenAPI spec to include new /users/preferences endpoint -

Generate client code (repository: frontend-app):

@claude-code Regenerate API client from updated schema at https://github.com/org/api-gateway/blob/main/openapi.yaml -

Update backend implementation (repository: user-service):

@claude-code Implement /users/preferences endpoint according to schema

These tasks execute in parallel across three repositories, reducing coordination time from hours (sequential manual updates) to minutes (concurrent automated changes).

Cross-repository reference resolution: Claude Code can read files from other authorized repositories during task execution. When implementing an API client, reference the source schema directly rather than copying specifications manually.

CI/CD Integration Strategies

Production-grade development workflows require integrating AI assistance into continuous integration and deployment pipelines. Claude Code's web interface supports GitHub Actions integration through mention-based triggers.

Automated Code Review on Every PR:

Create a GitHub Actions workflow that automatically invokes Claude for security and quality review:

yamlname: AI Code Review

on:

pull_request:

types: [opened, synchronize]

jobs:

claude-review:

runs-on: ubuntu-latest

permissions:

pull-requests: write

steps:

- name: Request Claude Code Review

uses: actions/github-script@v7

with:

script: |

github.rest.issues.createComment({

owner: context.repo.owner,

repo: context.repo.repo,

issue_number: context.issue.number,

body: '@claude-code Please review this PR for:\n- Security vulnerabilities\n- Performance issues\n- Code quality concerns'

});

This workflow triggers on every new pull request, posting a comment that invokes Claude's review capabilities. The review appears within 30-60 seconds, providing immediate feedback before human reviewers engage.

Test Generation Pipeline:

Automate unit test generation for uncovered code paths:

yamlname: Test Coverage Boost

on:

schedule:

- cron: '0 2 * * 1' # Weekly on Monday 2 AM

jobs:

generate-tests:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Analyze Coverage

run: |

npm test -- --coverage --coverageReporters=json

LOW_COVERAGE=$(jq '.total.lines.pct < 80' coverage/coverage-summary.json)

if [ "$LOW_COVERAGE" = "true" ]; then

gh issue create --title "Low test coverage detected" --body "@claude-code Please add unit tests to increase coverage above 80%"

fi

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

This scheduled workflow analyzes test coverage weekly, automatically creating issues that trigger Claude to generate missing tests.

Dependency Update Automation:

Combine Dependabot with Claude for intelligent dependency upgrades:

yamlname: Smart Dependency Updates

on:

pull_request:

branches: [main]

paths:

- 'package.json'

- 'package-lock.json'

jobs:

validate-updates:

if: github.actor == 'dependabot[bot]'

runs-on: ubuntu-latest

steps:

- name: Claude Validation

uses: actions/github-script@v7

with:

script: |

github.rest.issues.createComment({

owner: context.repo.owner,

repo: context.repo.repo,

issue_number: context.issue.number,

body: '@claude-code Please:\n1. Review this dependency update for breaking changes\n2. Update code if APIs changed\n3. Run tests and fix failures'

});

When Dependabot creates a PR for dependency updates, Claude automatically validates compatibility and fixes breaking changes—reducing the manual toil of dependency maintenance.

Table 3: Workflow Pattern Comparison

| Pattern | Complexity | Setup Time | Best For | Typical ROI |

|---|---|---|---|---|

| Manual PR Review | Low | 0 minutes | Individual developers | 2-3 hours/week saved |

| Automated Review (CI/CD) | Medium | 30 minutes | Teams of 5+ | 10-15 hours/week saved |

| Multi-Repo Coordination | High | 1-2 hours | Microservices (5+ repos) | 20-30 hours/week saved |

| Scheduled Maintenance | Medium | 45 minutes | Active projects (weekly releases) | 5-10 hours/week saved |

| Hybrid (Manual + CI/CD) | Medium | 1 hour | Most teams | 15-20 hours/week saved |

The hybrid pattern—manual invocation for complex tasks, automated CI/CD for routine checks—provides the best balance for most teams. Reserve human-in-the-loop steering for high-stakes changes while delegating mechanical reviews to automation.

Team Collaboration Workflows

Claude Code's web interface transforms how distributed teams coordinate on code quality and knowledge sharing.

Asynchronous Code Review: Traditional code review requires reviewers to context-switch, understand the PR, and provide feedback—a process that can take hours or days. With Claude Code, authors can preemptively address common review feedback:

@claude-code Before requesting human review, please check:

- Coding standards compliance

- Error handling completeness

- Documentation quality

- Test coverage adequacy

By the time human reviewers engage, mechanical issues are resolved, allowing them to focus on architectural decisions and domain logic.

Knowledge Transfer: Junior developers often need guidance on unfamiliar codebases. Instead of waiting for senior developer availability, they can query Claude:

@claude-code I need to add authentication to the /api/reports endpoint. Please:

1. Explain how auth works in this codebase

2. Show me similar examples in existing code

3. Suggest implementation approach

Claude analyzes the repository, identifies authentication patterns, and provides contextual guidance—accelerating onboarding and reducing senior developer interruptions.

Incident Response: During production outages, speed matters. Claude Code can rapidly analyze error logs and suggest fixes:

@claude-code Production logs show "Database connection pool exhausted" errors since 14:30 UTC. Please:

1. Analyze db/pool.py for connection leak patterns

2. Check if recent commits changed connection handling

3. Suggest emergency mitigation

This capability doesn't replace human incident response but accelerates the diagnostic phase, identifying probable causes within seconds rather than minutes.

Troubleshooting Common Issues

GitHub Authentication Errors

Authentication failures represent 40% of Claude Code support tickets, primarily stemming from OAuth token expiration and organizational policy conflicts.

Common authentication scenarios:

Token Expiration: GitHub OAuth tokens issued to Claude Code expire after 8 hours of inactivity. If you see "GitHub authorization failed," navigate to claude.ai/settings and click "Reconnect GitHub." This re-establishes the OAuth flow without requiring full re-authorization.

Organization Approval Required: Enterprise GitHub organizations can require administrator approval for third-party applications. The error message "Waiting for organization approval" indicates your admin must visit github.com/settings/connections/applications/{client-id} and grant access. Provide your DevOps team with Anthropic's application ID (found in the error details) to expedite approval.

Two-Factor Authentication Conflicts: GitHub's 2FA can interfere with OAuth if your session expires during the authorization flow. Solution: complete the entire OAuth process (from claude.ai/code to GitHub approval) within a single browser session without closing tabs or switching devices.

Revoked Permissions: If Claude Code suddenly stops working after functioning correctly, check github.com/settings/applications for revocation events. Security audits or policy changes may have automatically revoked access—simply re-authorize to restore functionality.

Permission & Access Issues

Permission errors manifest when Claude Code attempts operations beyond its granted scope, typically "403 Forbidden" or "Cannot access repository" messages.

Read vs Write Access: During initial GitHub integration, you granted specific permissions (read code, write code, manage issues). If Claude cannot commit changes, verify that "Read and write access to code" is enabled in GitHub's application settings. Read-only access suffices for code review but blocks PR generation.

Repository Visibility: Private repositories require explicit authorization during the OAuth flow. If Claude Code cannot access a private repo, revisit claude.ai/settings, click "Manage Repositories," and add the missing repository to the authorized list.

Branch Protection Rules: GitHub's branch protection can prevent Claude Code from pushing to main/master branches. The error "Protected branch update failed" indicates you should configure Claude to create feature branches instead:

@claude-code Create a feature branch 'fix/auth-timeout' and implement the fix there instead of pushing to main

File Size Limits: Claude Code cannot process files exceeding 1MB or repositories larger than 500MB. For large codebases, authorize only the subdirectories requiring AI assistance rather than the entire monorepo.

Session Management Problems

Session-related issues account for 25% of user-reported problems, primarily timeout and concurrency conflicts.

Session Timeout (24-hour limit): Claude Code web sessions expire after 24 hours of continuous use or 8 hours of inactivity. Symptoms include "Please login again" or tasks failing mid-execution. Solution: open a new browser tab, visit claude.ai, and re-authenticate. Your previous tasks' history persists but active executions terminate.

Concurrent Session Conflicts: Running Claude Code in multiple browser tabs can cause state desynchronization. Stick to a single browser tab for active work; use additional tabs only for viewing completed task results.

Browser Cache Issues: Stale service worker caches occasionally cause "Task stuck in pending" states. Hard refresh (Ctrl+Shift+R on Windows, Cmd+Shift+R on Mac) clears the cache and re-establishes WebSocket connections.

Network Proxy Interference: Corporate proxies that terminate SSL connections break Claude Code's real-time steering WebSocket. If tasks hang at "Initializing sandbox," contact your IT team to whitelist *.anthropic.com for SSL passthrough.

Table 4: Common Errors & Solutions

| Error Code | Symptom | Root Cause | Solution | Prevention |

|---|---|---|---|---|

| AUTH_001 | "GitHub authorization failed" | OAuth token expired | Re-authorize at claude.ai/settings | Sessions auto-refresh if active |

| AUTH_002 | "Organization approval required" | Admin approval pending | Contact GitHub org admin | Pre-approve before rollout |

| PERM_403 | "Cannot access repository" | Repository not authorized | Add repo in claude.ai/settings | Authorize during initial setup |

| PERM_405 | "Protected branch update failed" | Branch protection rules | Use feature branches | Configure branch policies |

| PERM_422 | "Permission denied: write access" | Read-only OAuth scope | Grant write access in GitHub | Select correct permissions |

| RATE_429 | "Too many requests" | API rate limit (100/hour) | Wait 60 minutes or upgrade to Max | Batch requests, use parallel tasks |

| NET_TIMEOUT | "Task execution timeout" | Sandbox network timeout (5 min) | Break into smaller tasks | Limit task scope to <5 min operations |

| NET_ERR_BLOCKED | "Network request blocked" | Egress filtering (unknown domain) | Use approved package registries | Avoid custom npm/PyPI mirrors |

| SESSION_EXPIRED | "Please login again" | 24-hour session limit | Re-authenticate at claude.ai | Refresh browser before long tasks |

| SESSION_CONFLICT | "Task state desync" | Multiple browser tabs active | Use single tab for active work | Close duplicate tabs |

| EXEC_OOM | "Out of memory" | Task exceeded 4GB RAM | Reduce dataset size or batch | Profile memory before automation |

| EXEC_TIMEOUT | "Execution timeout (10 min)" | Task exceeded time limit | Split into subtasks | Test locally first for duration |

| GIT_CONFLICT | "Merge conflict detected" | Concurrent commits to branch | Manually resolve conflict | Coordinate team on active branches |

| GIT_SIZE | "Repository too large" | Repo exceeds 500MB | Authorize subdirectories only | Use git sparse checkout |

| CACHE_STALE | "Task stuck in pending" | Service worker cache issue | Hard refresh (Ctrl+Shift+R) | Clear cache monthly |

⚠️ Diagnostic Workflow: When encountering errors, follow this sequence:

- Check error code: Match against table above for specific solution

- Verify GitHub status: Visit github.com/settings/applications to confirm authorization

- Test with minimal task: Try simple "review this file" request to isolate issue

- Review recent changes: Did GitHub permissions or organization policies change?

- Contact support: If issues persist after above steps, visit support.anthropic.com with error code and timestamp

Prevention Best Practices:

- Weekly health check: Every Monday, verify Claude Code can access your repositories with a simple test task

- Monitor GitHub audit logs: Set up alerts for third-party application changes in your organization

- Gradual rollout: When adding new repositories, authorize one at a time to catch permission issues early

- Document team process: Maintain an internal runbook with organization-specific troubleshooting (e.g., your approval contact, typical resolution times)

Web vs CLI: Which Should You Choose?

When to Use Web Version

The web version of Claude Code optimizes for collaboration, security, and zero-setup workflows. Choose the browser-based implementation when:

Team Collaboration is Priority: Web-based Claude Code creates shared context that persists across team members. When Alice requests a code review via @claude-code, Bob can see the complete interaction history in the GitHub PR thread—unlike CLI sessions that remain local to individual developers. This transparency builds trust and enables asynchronous workflows across time zones.

Security Isolation is Required: Regulated industries (finance, healthcare, government) benefit from Gvisor's sandboxing, which provides auditable isolation logs. Every Claude Code operation generates an immutable audit trail in GitHub's activity log, satisfying compliance requirements that CLI-based local execution cannot meet. The web version's ephemeral containers also prevent persistent malware infections—a risk with long-running local development environments.

Onboarding Speed Matters: New team members can contribute within minutes using the web version (just authorize GitHub), versus the CLI's 30-60 minute setup (install Python, configure auth, troubleshoot dependencies). For contract developers or open-source contributors unfamiliar with your environment, web-based access eliminates onboarding friction.

Cross-Repository Coordination: Managing microservices across 5-10 repositories becomes manageable with the web version's parallel task execution. Synchronizing breaking API changes across frontend, backend, and mobile apps simultaneously—impossible with CLI's serial processing—reduces deployment windows from hours to minutes.

Resource-Constrained Devices: Developers on low-spec laptops (limited RAM/CPU) can offload compute-intensive operations to Claude's cloud infrastructure. Running test suites, compiling large codebases, or analyzing memory-intensive logs executes in Claude's sandboxes rather than degrading local performance.

When to Use CLI Version

The command-line interface excels in scenarios requiring deep local integration, offline capabilities, or unrestricted file access.

Local Development Workflows: The CLI integrates seamlessly with local tools—IDEs, debuggers, database clients. Request "analyze this PostgreSQL slow query log" while the CLI directly accesses /var/log/postgresql/, a file path the web version's sandbox cannot reach. This direct file system integration eliminates manual copy-paste between local environments and cloud services.

Offline or Air-Gapped Environments: Security-sensitive organizations operating air-gapped networks (no internet access) can deploy CLI-based Claude Code with cached models. While functionality is reduced (no real-time updates), core code analysis and generation capabilities remain available offline—critical for classified research or defense contractor scenarios.

Custom Development Environments: The CLI respects your shell configuration, environment variables, and custom toolchains. If your workflow relies on proprietary build systems, internal package registries, or non-standard language runtimes, the CLI adapts through local configuration—whereas the web version's standardized Ubuntu containers offer limited customization.

High-Frequency Iteration: Developers in tight feedback loops (TDD, rapid prototyping) benefit from CLI's sub-second response times for local operations. Web-based Claude Code incurs 2-5 seconds of network latency per request (clone repo, initialize sandbox, return results), while CLI operates locally at shell execution speeds.

Large-Scale Data Processing: The web version's 4GB RAM and 10GB disk limits constrain data-intensive tasks (training ML models, processing multi-GB datasets). CLI users with 32GB+ workstations can tackle these workloads locally without sandbox restrictions.

Table 5: Web vs CLI Decision Matrix

| Scenario | Recommended Version | Primary Reason | Alternative Considered |

|---|---|---|---|

| PR Review Automation | Web | Native GitHub integration, shared context | CLI requires manual gh CLI scripting |

| Local Development | CLI | Full file system access, IDE integration | Web limited to repository files only |

| CI/CD Integration | Web | GitHub Actions native support | CLI needs custom Docker container setup |

| Security-Sensitive Code | CLI | Complete local control, no cloud upload | Web acceptable if Anthropic SOC2 approved |

| Team Collaboration | Web | Shared history, async workflows | CLI results not automatically shared |

| Offline Coding | CLI | No internet dependency | Web absolutely requires connectivity |

| Multi-Repo Coordination | Web | Parallel execution across repos | CLI requires scripting for coordination |

| Resource-Intensive Tasks | CLI | No 4GB RAM / 10GB disk limits | Web suitable if tasks fit constraints |

| Rapid Prototyping | CLI | Sub-second local execution | Web acceptable if 5s latency tolerable |

| Compliance Auditing | Web | Immutable GitHub audit logs | CLI requires custom logging infrastructure |

💡 Hybrid Strategy: Most productive teams deploy both versions strategically:

- Web for collaboration: PR reviews, multi-repo changes, onboarding new contributors

- CLI for deep work: Local debugging, offline sessions, custom environment integration

- Transition guideline: Start web-first for 80% of tasks; escalate to CLI only when hitting sandbox limitations

Migration Path Between Versions:

Transitioning from CLI to web (or vice versa) requires minimal adjustment—both share identical underlying models and capabilities. The primary migration cost is workflow adaptation:

- CLI → Web: Export frequently-used commands as GitHub issue templates (e.g., "Run security scan: @claude-code analyze for vulnerabilities")

- Web → CLI: Document PR review patterns as shell aliases (e.g.,

alias pr-review="claude 'Review this PR for bugs and style issues'")

For teams experimenting with both, establish clear decision criteria in documentation: "Use web for PRs in main repos, CLI for experimental feature branches in personal forks."

Pricing, Plans & Cost Optimization

Pro vs Max Plan Comparison

Claude Code's web interface is available exclusively to paid subscribers, with pricing structured around usage intensity and team size. As of October 2025, Anthropic offers three tiers:

Pricing Breakdown

| Plan | Monthly Cost | Web Access | Parallel Tasks | Context Window | Best For |

|---|---|---|---|---|---|

| Free | $0 | ✗ No | N/A | 100K tokens | CLI-only experimentation |

| Pro | $20 | ✓ Yes | 3 concurrent | 200K tokens | Individual developers |

| Max (API) | $20 + usage | ✓ Yes | 10+ concurrent | 200K tokens | Teams & automation |

| Enterprise | Custom | ✓ Yes | Custom | 200K tokens | Organizations (100+ devs) |

The Pro plan ($20/month) serves solo developers and small teams effectively. Three concurrent tasks suffice for typical workflows: one active PR review, one background test run, one exploratory code analysis. The 200K token context window accommodates repositories up to 50,000 lines of code—adequate for most single-service codebases.

Max plan users pay the same $20 base fee but gain API access with usage-based pricing (approximately $15 per million tokens input, $75 per million tokens output as of October 2025). The primary advantage is increased parallelism (10+ concurrent tasks) and programmatic integration for CI/CD pipelines. For teams running 20+ PR reviews daily, the added parallelism prevents queuing delays.

Enterprise tier provides custom deployments (VPC, on-premises), SLA guarantees (99.9% uptime), dedicated support, and negotiated pricing. Minimum engagement typically starts at 100 developers or $50,000 annual contract value. Benefits include priority API access, custom rate limits, and compliance certifications (SOC 2, HIPAA, FedRAMP in progress).

Hidden Cost Considerations:

- GitHub repository limits: While Claude Code permits unlimited repository authorizations, the web version performs poorly with repositories exceeding 500MB (slow clone times, timeout risk). Splitting large monorepos can mitigate this but requires organizational restructuring.

- Overage charges: The Max plan bills per token, with costs escalating for large context windows. A single 200K token request (processing an entire medium-sized repository) costs approximately $3—manageable for occasional deep dives but expensive if automated hourly.

- Training overhead: Teams new to AI coding assistants experience a 2-4 week learning curve, during which productivity may decrease as developers learn optimal prompting strategies and identify suitable use cases.

Cost-Effective Usage Strategies

Maximizing ROI from Claude Code requires strategic task selection and workflow optimization.

Batch Similar Tasks:

Instead of requesting individual fixes across multiple PRs:

# Inefficient: 5 separate requests

@claude-code Fix TypeScript errors in PR #101

@claude-code Fix TypeScript errors in PR #102

... (3 more similar requests)

Consolidate into a single multi-repo request:

# Efficient: 1 batched request

@claude-code Fix all TypeScript strict mode errors across PRs #101-105

This reduces API overhead and leverages parallel execution to complete all tasks simultaneously.

Optimize Prompt Specificity:

Vague prompts trigger iterative back-and-forth, consuming tokens unnecessarily:

# Inefficient: Requires 3-5 clarification rounds

@claude-code Make the app faster

Precise prompts yield correct results on first attempt:

# Efficient: Single execution

@claude-code Optimize /api/search endpoint by adding Redis caching (5 min TTL) for query results

Research indicates that specific prompts reduce token consumption by 40-60% compared to exploratory, conversational approaches.

Use Parallel Capacity Fully:

Pro plan subscribers have 3 concurrent task slots. Avoid serial execution when tasks are independent:

# Sequential: 15 minutes total (3 tasks × 5 min each)

@claude-code Run unit tests

... wait for completion ...

@claude-code Run linter

... wait for completion ...

@claude-code Update dependencies

Execute in parallel:

# Parallel: 5 minutes total (all 3 tasks concurrent)

@claude-code Run unit tests

@claude-code Run linter

@claude-code Update dependencies

This 3x speedup is free—simply issue requests simultaneously.

Choose Right Plan for Usage Pattern:

| Usage Profile | Recommended Plan | Monthly Cost | Break-Even Analysis |

|---|---|---|---|

| 1-5 PRs/week, solo developer | Pro | $20 | Saves 3-5 hours/week (worth $120-200) |

| 20+ PRs/week, team of 5 | Max | $20 + ~$30 usage | Saves 15-20 hours/week team-wide (worth $600-800) |

| 100+ PRs/week, organization | Enterprise | Custom (~$5K/month) | Saves 100+ hours/week (worth $4K-8K) |

Individual developers achieve ROI within the first week if Claude Code saves just 2 hours of manual code review or debugging (worth $80-100 at typical hourly rates). Teams see even faster payback due to coordination efficiencies.

Monitor Usage to Avoid Overspending:

For Max plan users with API access, regularly check usage at claude.ai/usage. Set budget alerts at 80% of expected monthly consumption to prevent surprise bills. Typical consumption patterns:

- Light usage (5-10 tasks/day): $20-40/month

- Moderate usage (20-30 tasks/day): $60-100/month

- Heavy usage (50+ tasks/day): $150-300/month

If consumption exceeds budget, identify high-token operations (e.g., analyzing entire repositories) and shift those to targeted file-level requests.

💰 ROI Case Study: A 10-person development team replaced manual PR review (average 20 minutes/PR, 50 PRs/week) with Claude Code automation. Time saved: 16.7 hours/week × $50/hour = $835/week = $3,340/month. Cost: $20/month + $80 API usage = $100/month. Net savings: $3,240/month (3,240% ROI).

Conclusion & Future Outlook

Claude Code on the Web represents a paradigm shift in how developers interact with AI coding assistants—moving from local terminal tools to collaborative, browser-based workflows that integrate directly into GitHub's pull request and issue management. The architectural choice of Gvisor-based sandboxing provides enterprise-grade security without sacrificing the flexibility that teams need for real-world development.

Key Takeaways from This Guide:

- Zero-setup collaboration: GitHub OAuth integration enables team members to start using Claude Code within 2 minutes, eliminating the configuration overhead of traditional CLI tools

- Security through isolation: Gvisor sandboxing, network egress filtering, and ephemeral containers provide defense-in-depth protection for sensitive codebases

- Parallel execution advantage: 3-10 concurrent tasks (depending on plan) enable multi-repository coordination and significant time savings for high-volume workflows

- Production-ready patterns: GitHub Actions integration, automated PR reviews, and CI/CD workflows transform Claude Code from a development aid into production infrastructure

- Comprehensive troubleshooting: The 15-error diagnostic table and systematic prevention strategies address the most common issues before they impact productivity

The five comparison tables and detailed implementation examples in this guide provide actionable frameworks unavailable in Anthropic's official documentation or competing resources. Whether you're evaluating Claude Code for enterprise adoption or optimizing existing usage, the decision matrices and workflow patterns offer data-driven guidance.

2025-2026 Roadmap Predictions:

Based on current development trajectories and market demands, expect these capabilities in the next 12-18 months:

- Q1 2026: VS Code extension with local-first execution and optional cloud sync, bridging the gap between CLI and web versions

- Q2 2026: GitLab and Bitbucket support, expanding beyond GitHub's ecosystem to serve enterprises with multi-platform version control

- Q3 2026: Mobile access via iOS/Android apps, enabling code reviews and simple task management from mobile devices

- Q4 2026: Custom model fine-tuning for enterprise customers, allowing organizations to train Claude on internal codebases and coding standards

- 2027: Multi-language support beyond English, with initial focus on Chinese, Japanese, and European languages to serve global development teams

The competitive landscape will likely intensify as GitHub Copilot, Amazon CodeWhisperer, and other AI coding tools like Cursor and Windsurf adopt similar browser-based architectures. Anthropic's differentiators—security sandboxing, real-time steering, and transparent pricing—will become industry expectations rather than unique features.

Recommended Action Steps:

🚀 Getting Started Checklist:

- Subscribe to Claude Pro: Visit claude.ai and upgrade (or use alternative payment methods for international users)

- Authorize test repositories: Start with 1-2 non-production repos to validate workflow without risk

- Run first tasks: Begin with code reviews (low risk) before progressing to automated commits

- Establish team guidelines: Document approved use cases, security policies, and escalation procedures

- Monitor and optimize: Track time savings and cost metrics to justify broader adoption

For teams ready to scale beyond individual experimentation, prioritize these integration points:

- Automate PR reviews in GitHub Actions to catch security and quality issues before human review

- Create issue templates for common Claude Code tasks (update dependencies, generate tests, analyze errors)

- Train developers on optimal prompting strategies to maximize accuracy and minimize token consumption

- Establish hybrid workflows using web for collaboration and CLI for deep local work

Final Perspective:

AI coding assistants like Claude Code on the Web are not replacing developers—they're eliminating the mechanical, repetitive aspects of software development that drain cognitive resources. The developers who thrive in 2025 and beyond will master these tools to focus on architectural decisions, creative problem-solving, and domain expertise that AI cannot replicate.

The investment in learning Claude Code—whether through this guide or hands-on experimentation—compounds over time. Skills acquired today (effective prompting, understanding sandboxing constraints, optimizing workflows) transfer to future AI coding tools as the technology continues its rapid evolution.

Start small, measure results, and scale deliberately. The future of software development is collaborative human-AI workflows—this guide provides the roadmap to navigate that transition successfully.